[HAIP] Transparency Without Teeth: Making the Hiroshima AI Process a Practical Bridge

Published

Modified

HAIP increases transparency but does not yet change behavior Voluntary reporting without incentives becomes symbolic Real impact requires linking HAIP to audits and procurement

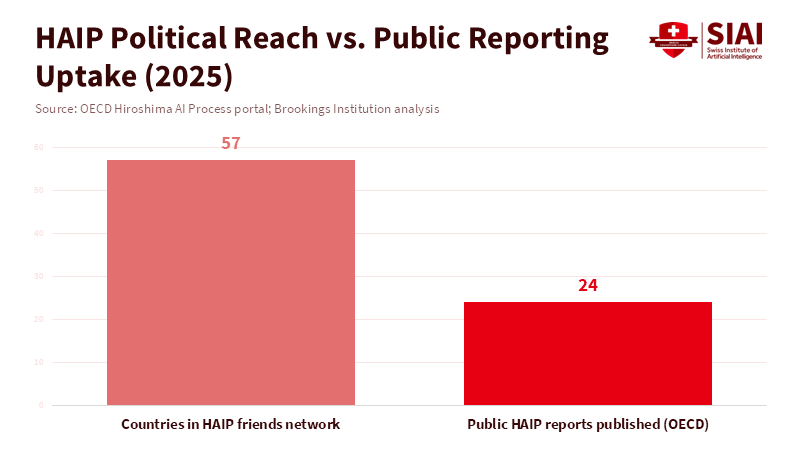

By June 2025, the OECD reported that twenty organizations from various sectors and regions had voluntarily joined the HAIP reporting process, while many more countries joined the HAIP friends network. This highlights a gap between broad diplomatic support and the smaller number of organizations actually reporting. Political momentum and voluntary disclosure often outpace real-world adoption. Reporting is useful, but it is not a complete governance plan. For HAIP to reduce real harms, it needs three things: a small, machine-readable set of comparable fields; affordable, modular checks for higher-risk systems; and clear purchasing or accreditation rewards that change developers' incentives. Without these steps, HAIP risks becoming a ritual of openness that reassures the public but does not change developer behavior on a large scale.

Introducing HAIP and its promise

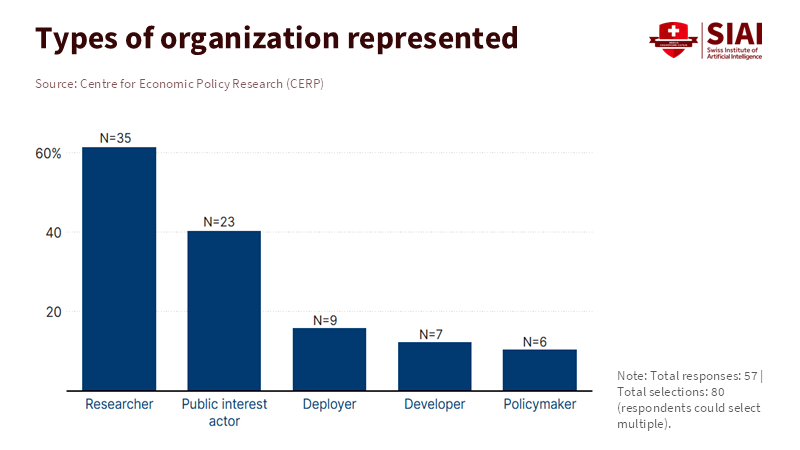

The HiThe Hiroshima AI Process started with a simple idea: rapid advances in AI called for a shared starting point and a way for organizations to show how they manage risk. The G7 created a code of conduct and guiding principles, and the OECD developed a reporting plan so groups could share their governance and testing practices. The approach is simple. Developers explain how they identify risks, what tests they run, who approves them, and how they handle incidents. These accounts are made public so peers and buyers can learn. This design makes sense for a divided global system because it uses openness and peer learning as the first step in governance. offers immediate value for teaching. For teachers and policy trainers, the portal provides real, up-to-date case documents for students to analyze. These reports turn abstract ideas like “governance” and “risk” into concrete evidence that can be read, checked, and compared. However, for narrative reporting to lead to learning and better practice, it must be usable. If reports are too long, inconsistent, or hard to understand, they will help researchers more than they will help buyers. That is why HAIP should be used as a living teaching resource and a place to test new methods. Students can extract key fields, compare reported tests, and design follow-up audit plans. This approach turns HAIP, a collection of essays, into practical governance tools.

Functionality and mechanics of HAIP reporting

HAIP is meant to show how institutions work, not just to rate one system. The OECD reporting plan covers governance, risk identification, information security, content authentication, safety research, and actions that support people and global interests. In 2024, the pilot phase included companies, labs, and compliance firms from ten countries. In 2025, the OECD opened a public portal for submissions. These steps focus on depth and method, not just quick comparisons. The form asks who did the red-teaming, the size of the tests, if third-party evaluators were involved, and how incidents were handled. These are specific points that can be taught, checked, and improved.

This design has three main effects. First, it creates a useful record of what organizations actually do, giving teachers and researchers real data to use and compare. Second, it supports learning that fits different situations, since a test that works in one place may not work in another. Third, it adds a cost barrier. Writing a public, institution-level report takes time for legal review, test documentation, and senior approval. The OECD and G7 noticed this during their pilot and mapping stages and have made tools and mapping a priority. Still, making it easier to take part depends on lowering these costs. The policy challenge is clear: keep the parts of the form that teach and reveal information, and add a small, standard set of machine-readable fields for buyers and researchers to compare at scale.

Limits: divergence, incentives, and checks

HAIP works in a world with many national rules. Europe uses strict, risk-based laws. The United States uses voluntary frameworks, market incentives, and security measures. China takes a directive, pilot-led approach focused on state goals and industry growth. HAIP’s strength is its ability to work across these different systems. Its weakness is that it is voluntary and not enforced. When countries have different priorities, like rapid growth or strategic independence, a voluntary report is unlikely to change behavior. In practice, agreeing on words does not mean agreeing on incentives.

The next point is practical. Voluntary reports only matter when there are economic rewards or penalties. If buyers, funders, or procurement teams see a verified HAIP report as a quick way to secure contracts, then developers have a reason to disclose. If not, many will choose not to take part. The early record of submissions, with few reports despite strong political support, shows the gap between public support and real market incentives. In short, transparency needs to be rewarded to make a difference.

Verification is the hardest part. Narrative reports can be vague. An organization might describe its tests in a way that sounds strong but lacks real evidence. The solution is a modular approach. For low-risk systems, self-attested reports with a small machine-readable core may be enough. For high-risk areas like healthcare, critical infrastructure, or justice, there should be accredited technical checks and a short audit statement. This tiered model keeps costs manageable and focuses the most thorough checks where they are needed. The policy tool here is not force, but targeted standards and public funding to help small teams take part without high costs. Results from pilots and procurement trials will show which approach works best.

What teachers and institutions should do now

For teachers, HAIP is a hands-on lab. Assign an HAIP submission as the main document. Have students pull out key data, such as test dates, model types, red team details, whether third-party testing was conducted, and the incident-handling timeline. Then, ask them to write a short audit plan to check the claims. This exercise builds two important governance skills: turning words into checks you can verify, and designing simple evidence requests that protect trade secrets.

For institutions like universities, hospitals, and research labs, HAIP should serve two purposes. First, build internal records that align with HAIP’s main areas to enable decision auditing. Second, link disclosure to buying and hiring. Ask outside vendors for HAIP-style reports on sensitive projects. Make governance maturity part of grant and vendor selection. Invest in a small legal and technical team to prepare solid, redacted reports. The goal is clear: use HAIP to improve institutional practices and make verified reporting a real advantage in procurement and partnerships.

From transparency to policy design: small, testable moves

What is a practical way forward? Start with a pilot in one sector, such as healthcare or education. The OECD suggests that any AI system used for decisions in this sector should need verified HAIP attestation for a year, with funding to help small providers create accurate reports. Track three results: whether verified reporting cuts down on repeated checks, catches real harms or near misses, and speeds up safe procurement. If the pilot works, expand using shared recognition and simple procurement rules. At the same time, fund open-source tools that turn internal logs into safe, redacted attachments and fill out the basic machine-readable fields. Together, these steps make reporting useful in practice. The approach is modest and experimental, matching the size of the challenge: not sweeping global law, but tested, repeatable local policy that builds trust and evidence.

The Hiroshima AI Process did what it set out to do diplomatically: carve out a shared space for principles and a voluntary channel for public reporting. According to Brookings, the HAIP Framework's early history highlights both its achievements in international diplomacy and the practical challenges it faces. Rather than replacing HAIP with stricter laws or discarding it, the focus should remain on building upon its foundation as an innovative global AI governance tool. The work is to pair it with small, practical policy tools: a short, machine-readable core, a tiered checks-and-balances regime for higher-risk systems, procurement incentives that reward verified reports, and funded tools to lower the cost of participation. For teachers, HAIP is already a classroom-ready corpus. For policymakers, HAIP is a scaffolding that can support tested, sectoral pilots. If governments and institutions take these steps now, reporting will stop being a ritual and start changing behavior.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Brookings Institution. 2026. “The HAIP Reporting Framework: Its value in global AI governance and recommendations for the future.” Brookings.

East Asia Forum. 2025. “China resets the path to comprehensive AI governance.” East Asia Forum, December 25, 2025.

OECD. 2025. “Launch of the Hiroshima AI Process Reporting Framework.” OECD.

World Economic Forum. 2025. “Diverging paths to AI governance: how the Hiroshima AI Process offers common ground.” World Economic Forum, December 10, 2025.

Ema, A.; Kudo, F.; Iida, Y.; Jitsuzumi, T. 2025. “Japan’s Hiroshima AI Process: A Third Way in Global AI Governance.” Handbook on the Global Governance of AI (forthcoming).

Comment