The Tools Will Get Easier. Directing Won’t: AI Video Streaming’s Real Disruption

Published

Modified

AI video streaming is mainstream; tools are easier, directing still matters Without rights, provenance, and QC, slop scales and trust falls Train hybrids and set standards to gain speed without losing story

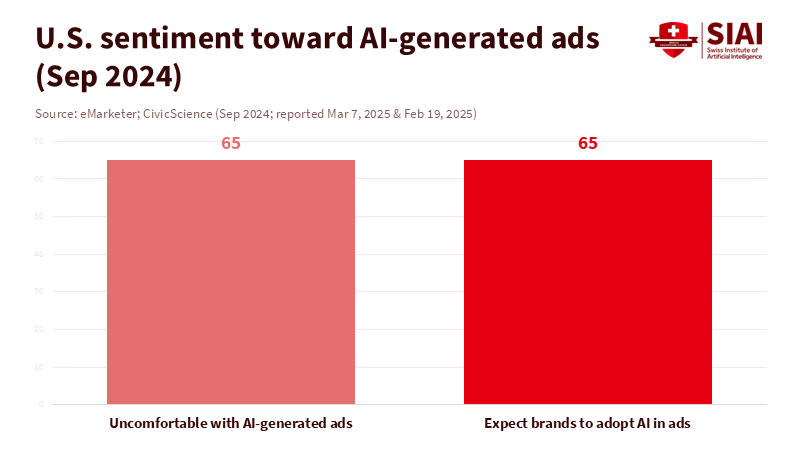

Back in December 2025, everyone's jaw dropped when OpenAI and Disney made a deal: a cool $1 billion investment for a three-year license to use over 200 characters from Disney, Marvel, Pixar, and Star Wars in OpenAI's Sora. This shows two big things. First, AI video isn't just some experiment anymore; it's a real way to get content out there, complete with familiar characters and a way to get right into people's homes. Second, just because making videos is getting easier doesn't mean they'll be better. We're going to see way more videos that seem like content, act like content, and please algorithms like content—but totally miss the mark on story, morals, and good filmmaking. This isn't meant to bash AI video. It's just a reminder to treat it like a new type of camera, not a replacement for the director. People have already shown they're wary: surveys from 2024 and 2025 showed that most folks are uneasy about media made by AI. If AI video takes off without any rules, quantity will win over quality. But if we design things right, we can lower costs and risks while actually improving stories.

AI video is a revolution in tools, not in talent

The tech has definitely gotten better: models can now make short, decent-quality videos in seconds, remix footage, and keep things looking consistent. Studios and streamers aren't just playing around anymore; they're making rules about when and how to use these tools in productions. The industry is adopting this stuff quickly; by mid-2024, most firms said they use AI all the time. Surveys in 2025 showed that media companies are investing more in AI across the board, from planning stories to adding effects and translating content. This is a big deal. It's a fundamental change in tools that speeds up the process and makes it cheap to try new things. But it’s still just about the tools. The important stuff—like taste, structure, pacing, acting, and ethics—still needs a human. You can't solve those problems with a simple prompt. It takes the same good judgment that has always made movies watchable.

This upcoming trend will be like what happened with data: people who could use powerful software but didn't know enough to design good studies. They could get results fast, but they weren't always accurate. With video, we'll have people who can put scenes together but don't know much about directing. That doesn't mean their work is useless. It just means the industry needs to think about what success looks like. Think of it like power steering, not autopilot. These tools make it easier to turn the wheel, but they don't decide where to go or how to handle curves. If we think that easier is better, we'll end up with a bunch of almost-good movies that feel empty. And because AI-generated videos send this straight to viewers, mistakes become a much bigger problem.

AI video needs some professional help to be good

We've already seen examples of this. Netflix has been using AI for a while, like for creating anime backgrounds and testing AI lip-sync and effects. These are small jobs within a bigger process, not a total robotic takeover. The benefit is in the small stuff: cleaning up images, translation, and timing. Streamers and studios have also released rules for using AI. This is how it should work: humans directing, machines helping, and everything documented. AI video can be great for short fan clips or controlled story experiments that don't confuse people.

The risks are also obvious. People don't like destructive AI content. A 2025 study found that most viewers are uncomfortable with AI-generated media. People are already used to scrolling past low-quality content. This problem will definitely get worse as AI-generated video tries to tell longer stories. Viewers will forgive mistakes in a short video, but they won't be so forgiving if a movie has bad acting, a weird story, or bad lip-sync for 45 minutes. Having a process allows standards to be established: quality checks to ensure everything looks right and to compare performance to the story. AI can create a room, but it can't tell you if it feels real.

AI video will affect money, rights, and how things are distributed

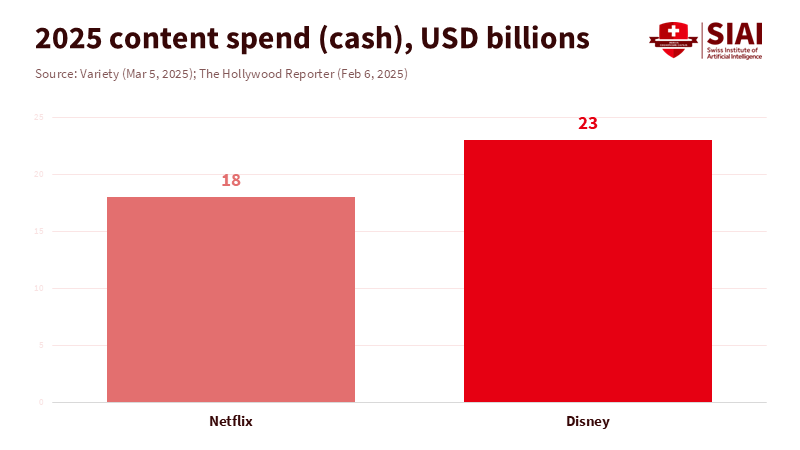

Pay attention to where the money goes. The top companies are spending a ton on content: the top six spent around $126 billion in 2024. Some companies are spending even more: Netflix is planning about $18 billion in 2025, and Disney is projecting around $24 billion in 2026. AI tools can cut post-production time, reduce the need for re-shoots, and translate things into other languages without hiring more people. It also opens up new opportunities for ads. The entertainment industry is expected to generate around $3.5 trillion, with more ads shown on streaming services and targeted by AI. AI-generated video fits here: use short clips to pique interest, create dynamic ads, and test new ideas.

Rights protection will be key. The Disney–OpenAI deal sets out the idea: license characters, not actor appearances; control distribution through an approved channel; and ensure everything is safe. This is how big companies will control AI media: by letting fans play within certain limits. For policy, this means better watermarks, clear information, and good communication between tools. For unions, this means contracts that protect actors' consent and payment when AI uses their faces or voices. It also means labels that viewers understand. If AI video is going to catch on, it needs to be cheap and have clear rights rules.

How educators and politicians should control AI video

Education needs to change now. Film schools need three tracks. First, teaching directing by using AI tools as cameras. Students should still learn the basics of storytelling and how to use AI to plan shots. Second, a track that covers the ins and outs of AI models, data ethics, and automation. Graduates should know as much about color correction as they do about lenses. Third, a policy track that treats AI video as a social problem: important disclosures, safe watermarks, and fair boundaries that can be taught to everyone. This doesn't replace writing, acting, or camera work. It prepares them for the machines that are now part of how stories are told.

For administrators, the choices are immediate. Get tools that show where content came from. Ensure that any use of AI is clearly stated in the paperwork. Check for AI-created faces and voices, and get the people's approval. Run pilot projects that pair students with industry mentors to test AI: human writing, AI planning, human filming, AI edits, and human mixing. Measure the results: time saved, quality differences, and how people respond. For politicians, the focus should be realistic. Require clear labels for AI media and ensure they're on content for kids. Update worker contracts so that AI is used by professionals, not as a way to cut jobs. Research how to measure video quality so we aren't just judging things by clicks. Make AI video boring: safe and reliable.

The rules we make now will decide what we watch later

The first thing I said—$1 billion and 200 characters—shows that AI video is going to be a popular template. The tech will only get easier. That doesn't mean anyone can be a director. It means it's easier to reach a first version of a video, making what happens next even more important. If we focus on quantity over quality, people will lose interest. If we set standards for craft, consent, and content source, we can open things up more while protecting good storytelling. Schools can train hybrid storytellers. Administrators can find safe tools. Politicians can set strong guidelines. What we see in the future will depend on the choices we make today. Treat AI video as a new instrument. Keep a human in charge. And judge success the same way: whether the story sticks with us when the screen goes black.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Ampere Analysis. (2024, October 29). Top six global content providers account for more than half of all spend in 2024.

Autodesk. (2025, August 15). Spotlight on AI in Media & Entertainment (State of Design & Make, M&E spotlight).

Barron’s. (2025, December). Disney and OpenAI are bringing Disney characters to the Sora app.

Bloomberg. (2025, December 11). Disney licenses characters to OpenAI, takes $1 billion stake.

Bloomberg. (2025, December 19). Inside Disney and OpenAI’s billion-dollar deal (The Big Take).

Deloitte. (2025, March 25). Digital Media Trends 2025.

Disney (The Walt Disney Company). (2025, December 11). The Walt Disney Company and OpenAI reach agreement to bring Disney characters to Sora (press release).

eMarketer. (2025, March 7). Most consumers are uncomfortable with AI-generated ads.

McKinsey & Company. (2024, May 30). The state of AI in early 2024.

Netflix Studios. (2025). Using Generative AI in Content Production (production partner guidance).

OpenAI. (2024, December 9). Sora is here (launch announcement; 1080p, 20-second generation).

PwC. (2025, July 24). Global Entertainment & Media Outlook 2025–2029 (press release and outlook highlights).

Reuters. (2025, July 30). Voice actors push back as AI threatens dubbing industry; Netflix tests GenAI lip-sync and VFX.

Reuters. (2025, December 11). Disney to invest $1 billion in OpenAI; Sora to use licensed characters in early 2026.

Variety. (2025, March 5). Netflix content spending to reach $18 billion in 2025.

The Hollywood Reporter. (2024, November 14). Disney expects to spend $24 billion on content in fiscal 2025.

The Hollywood Reporter. (2025, December). How Disney’s OpenAI deal changes everything.

The Verge. (2024, December 9). OpenAI releases Sora; short-form text-to-video at launch.

Comment