The Case for Continuing DOGE’s Disruption — Reframing Federal AI Adoption

Published

Modified

Federal AI adoption depends on tools and training, not elite titles DOGE proved rapid automation can work but exposed skill gaps Lasting reform requires institutionalized AI, not rollback

Getting AI into the federal government isn't about finding rare AI geniuses. It's more about giving regular people the right tools and boosting everyone's basic skills across a huge system. When tools that write drafts, make summaries, and clean up data become available to workers, the key thing isn't having amazing AI models. Instead, it's about whether people can actually use these tools well in their daily jobs. The last year showed both the good and bad sides of this idea. There was a quick rollout of tools for agencies, but also a lot of public worker changes. This worried some people, but the big lesson is obvious: trying to fit people into specific job titles misses the point. What really matters is setting up these tools, helping everyone learn to use them, and ensuring workers have a reason to adopt the new, more productive ways of doing things. That's why it's important to keep pushing forward with DOGE's efforts to modernize, even if we need to fix some mistakes along the way.

Why federal AI adoption must be driven by tools, not titles

When it comes to AI in the federal government, success should be measured by how many useful systems are in use, not by how important the people who used to sit in fancy offices are. The government has been putting AI assistants to work in offices, which is having a real impact. Instead of just writing research papers, these tools are being tested by groups and early users. This shows that AI is more likely to be accepted when workers see how it helps them every day - like writing an email, summarizing a document, or automating a form. It's not just about adding a research scientist to the organization chart. This means changing the way we think, from hiring people with only knowledge to putting AI capabilities directly into how people work. It can be messy and reveal where skills are lacking, but it's the only way to ensure the public gets the most out of AI.

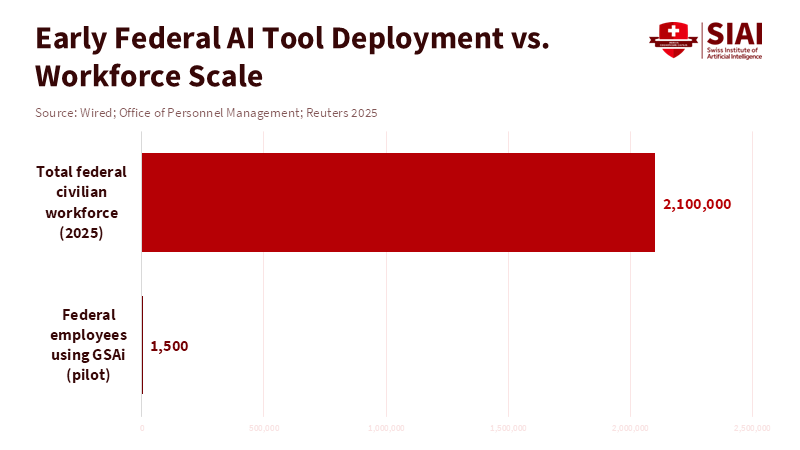

Using automated tools changes the number of people needed for different jobs. While some routine jobs become less necessary, there's a greater need for new roles, such as trainers, prompt engineers, data managers, support specialists, and people who ensure everything is done correctly. These jobs aren't the same as doing academic AI research, and it's a mistake to mix the two. If they're seen as the same, agencies might hire too many people with rare skills or fail to spend enough on the support needed to turn AI models into services people can rely on. According to WIRED, DOGE has taken steps to support regular federal workers in using AI tools by deploying its proprietary GSAi chatbot to 1,500 employees at the General Services Administration. This doesn't mean we can ignore ethics, safety, and oversight. Instead, it means that the people who create the rules and the people who understand the technology need to work together. They need to turn safety rules into practical guidelines that staff can follow. Having clear rules for handling data, simple checklists, and automatic logging makes people feel less afraid and makes it easier to try new things. Focusing on practical guidelines rather than creating fancy job titles will make AI adoption in the federal government something that lasts, rather than just for show.

How DOGE's Actions Moved AI Forward

DOGE's focus on quick changes had a clear result: AI assistants were rolled out into everyday work, and there were big reductions in the number of employees, which made news. According to the Office of Evaluation Sciences, about 35 percent of GSA employees used the agency's internal GenAI chat tool at least once within the first five weeks after it launched, suggesting that while these systems can assist with daily tasks, a significant portion of workers may not be adopting them immediately. According to a report from Federal News Network, when agencies automated certain tasks, it removed the need for people to handle time-consuming manual work, allowing staff to concentrate on more strategic responsibilities. This led to improvements in efficiency but also brought significant changes to how people worked.

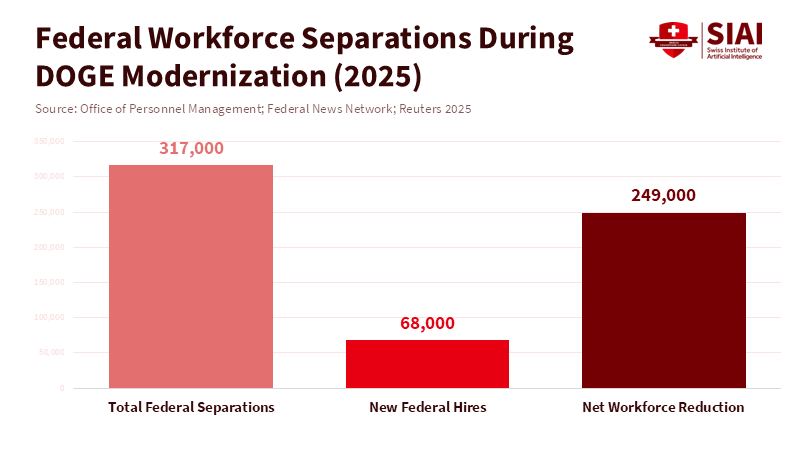

At the same time, the number of workers changed a lot. Reports showed that many people left their jobs during this period. This explains why adopting AI felt difficult: the people who knew the old ways of doing things and could help make the transition were often the ones leaving. This doesn't mean trying to modernize was a mistake. It means we need a clearer, slower plan to keep important knowledge while introducing new tools. We need to recognize that automation reduces some work, but knowledge is still needed to make sure technology is used safely and effectively.

Finally, public arguments about who was fired and why mixed politics with misunderstandings about technology. Some people left because they were still in a trial period or because their jobs were really software engineering roles labeled as research. According to a recent article, there remains confusion in public administration over the distinction between AI researchers and those skilled in statistical modeling or software implementation. However, the article emphasizes that modernizing with AI is necessary, provided that related vulnerabilities are carefully managed. It's to make job definitions clearer and invest in retraining workers. This way, people with implementation skills can move into jobs that support and monitor automated systems, rather than just being replaced.

Training for the Future

If the goal is to have AI widely and safely used in the federal government, the first step is to improve basic skills across agencies. This means funding affordable training for many employees on how to use AI assistants daily, write effective prompts, spot errors, and keep data clean. Training should be hands-on, short, and focused on specific tasks, not just abstract workshops on how models work. When training is practical, people are more likely to use AI because they see the direct benefits. This approach treats tools and users as equally important: neither can succeed without the other. Investing in training is much cheaper than replacing entire teams and prevents the loss of knowledge that happens when experienced people leave.

In practice, agencies should provide support when rolling out new tools. Every test should include specialists who can fix prompts, document problems, and create simple reports that show where the assistant is helpful and where it isn't. These specialists don't need to be experts; they just need to understand how things work, relate to people, and have some technical skills. By creating these middle-skill positions, the government makes the transition smoother and creates career paths for existing staff. This makes adopting AI more inclusive.

Another good idea is to introduce automation step by step. Instead of sudden layoffs and full-scale replacements, agencies should automate specific tasks, check them against human review, and then expand. This reduces the chance of errors and keeps human judgment where it's important. It also creates a series of successes that build support. The lesson is simple: speed without support creates problems, but speed with support leads to lasting change.

Making it Last

To make AI adoption in the federal government successful long-term, policymakers should focus on three things: tools, training, and governance. Procurement and certification should favor usable solutions that come with strong support and clear plans for how to fit them into existing systems. This means looking beyond the AI model itself and requiring user training, built-in security, and post-deployment monitoring. Workforce policy should fund quick re-skilling programs and create clear career paths for the middle-skill jobs that keep automation running smoothly. This prevents confusion between researchers and engineers from leading to long-term talent shortages. Governance should be practical, with required logging, regular audits, and easy-to-use systems for reporting incidents that nontechnical staff can use. These three pillars will make AI adoption in the federal government sustainable, no matter who is in charge.

Some might worry about fairness, accuracy, and how fast things are changing. They might say that quick automation leads to mistakes and hurts public services. They are right to ask for safeguards. The answer isn't to slow everything down, but to demand clear safety measures before expanding: error rates under human review, the number of sensitive data incidents, and how confident users are. These measures can be achieved and enforced when tests are designed to produce them. Taking a “measures-first” approach turns abstract ethical concerns into practical “pass/fail” checks that protect the public while allowing useful systems to grow.

Finally, the question of people leaving their jobs needs to be addressed clearly. If people left mostly on their own or are still in a trial period, steps can be taken to keep the knowledge of those who remain: creating written guides, recording walkthroughs, and offering short periods for departing staff to transfer their knowledge. If people were fired unfairly, legal and HR solutions should be used. But neither situation is a reason to reject modernization. The goal is to balance speed with care, so AI adoption improves services.

AI adoption in the federal government is most successful when it is practical, responsible, and builds skills. DOGE's actions put pressure on companies to put tools in workers' hands and show what automation can do. The mistakes made, such as cutting staff too quickly, not defining jobs clearly, and not providing enough training, can be fixed. By combining deployments with training, rolling out automation step by step, and creating clear rules, we can secure gains while preserving important knowledge. If the goal is to improve services, policymakers should keep pushing for modernization. The goal is to put usable tools in people's hands, teach them to use them safely, and build a system that lasts. That is how AI adoption becomes a real improvement that citizens can see, measure, and trust.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Brookings Institution. “How DOGE’s modernization mission may have inadvertently undermined federal AI adoption.” January 21, 2026.

Politico. “Pentagon’s ‘SWAT team of nerds’ resigns en masse.” April 15, 2025.

Reuters. “DOGE-led software revamp to speed US job cuts even as Musk steps back.” May 8, 2025.

Wired. “DOGE Has Deployed Its GSAi Custom Chatbot for 1,500 Federal Workers.” March 2025.

U.S. Office of Personnel Management. Federal workforce data, personnel actions and separations (2025 updates). 2025–2026 dataset.

ZDNet. “Tech leaders sound alarm over DOGE’s AI firings, impact on American talent pipeline.” March 2025.

Comment