The Case for World Models: The Necessity of a Stable, Shared Map of Reality in Artificial Intelligence

Input

Modified

World models let AI build an internal map of reality to simulate and plan They move systems beyond prediction toward reasoning and transfer Grasping world models is now essential for future AI education and policy

When agents can learn internal representations of their environments, it changes how we approach education, governance, and AI design. These agents can predict outcomes and plan better than systems that only guess the next token or pixel. This difference matters in practice, not just in theory. Studies show that agents with clear world models can handle many control tasks with less human help and lower computing costs than older methods. Having an internal map—a simple, flexible way to represent space, objects, and time—helps them learn faster and act more reliably. For educators and leaders, this means rethinking what we teach machines and how we prepare people to work with advanced systems. For policymakers, it shifts how we balance safety, transparency, and buying decisions. World models also enable new types of audits, unlike black-box predictive systems. The main idea here is the world model: a structured, lasting map of the environment that agents can check, update, and use to run predictions.

A deep inspection of what a world model is and its name

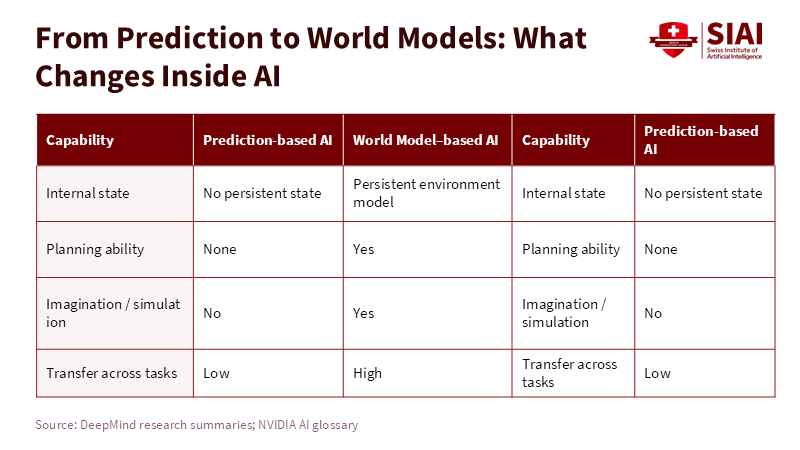

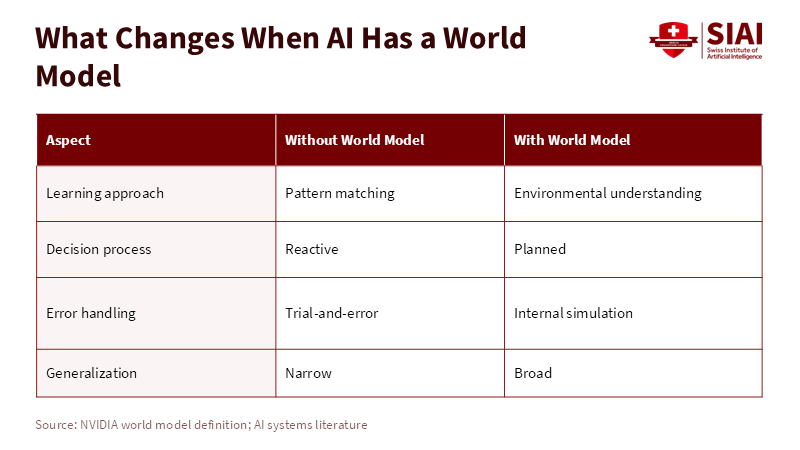

A world model is a compact representation of objects, agents, and the rules governing their interactions over space and time. Unlike basic prediction systems that just match patterns to guess what comes next, world models build a kind of memory map. This map lets the system run quick simulations, test different actions, and choose the best ones based on the results. Moving from simple prediction to this kind of planning shows why the term world model matters—it describes something internal, flexible, and lasting, not just a short-lived process.

The terminology of 'world model' is significant because it shapes architectural decisions. Designing for a world model leads to systems that prioritize explicit state representations, temporal consistency, and mechanisms for integrating new data without necessitating complete retraining. Contemporary research reflects this approach: teams develop 3D and 4D scene representations for visual coherence, train agents to simulate trajectories for planning, and implement memory systems to preserve beliefs across sessions. This paradigm also influences academic curricula. Beyond statistical pattern recognition, students must acquire skills in representation engineering, which entails constructing and analyzing models of space, time, and causality. When procuring AI solutions, decision-makers should assess whether a system employs an internal world model or functions solely as a large-scale predictor generating plausible outputs. This distinction affects the propagation of failures, auditability, and system reset procedures.

How world models impact instruction, assessment, and education

World models make training more efficient by leveraging data more effectively and enabling easier transfer learning. Because a world model can simulate experiences, it often needs fewer real-world interactions to learn how to act in different situations. Recent research shows that agents with world models can handle hundreds of tasks with a single general setup. They do this by imagining possible outcomes rather than relying on large labeled datasets or many human demonstrations. This means schools teaching AI can focus less on collecting massive datasets and more on designing courses that teach key skills for building and working with representations. For teachers, hands-on activities should move from gathering more data to building, testing, and improving internal simulations.

Assessment strategies must evolve to align with the capabilities of world models. Evaluations that focus solely on short-term prediction accuracy overlook the essential strengths of world models. The primary benchmark should be the model's ability to support logical, goal-directed actions over extended periods. Both educational institutions and industry should implement assessments that measure an agent's planning proficiency through internal simulations. For example, the ability to transfer knowledge from a simulated warehouse environment to a novel real-world context with minimal retraining is a critical indicator. Policymakers should ensure that certification programs incorporate stress tests based on simulated scenarios, rather than relying exclusively on withheld test data. Systems utilizing world models require regulatory audits that assess both representational accuracy and update procedures, not merely output performance.

Useful suggestions for leaders and those in policy

When choosing systems, it is important to consider how the model is built. A system based on a world model fails in different ways and needs different monitoring than a simple predictor. If a world model gets something wrong about reality, its internal simulations can lead to bad decisions, but these mistakes can often be found by checking the model’s state. This lets auditors see what the system believes and what it has simulated, giving more clarity than black-box predictors. Leaders should ask for the ability to inspect states and review imagined scenarios as part of any purchase agreement.

Safety protocols have to adjust to the capabilities of world models. Regulators should require organizations to conduct systematic simulation-based safety testing before deploying systems because a world model can simulate unusual or dangerous events cheaply offline. An example is robotics systems trained with created warehouse environments can undergo stress tests on unusual situations that would be costly or dangerous to set up in the real world. Policy options about standards for simulation accuracy and the chain of custody of simulation data emerge from this. Those in policy roles must determine which simulation standards support real-world transfer and which are marketing-driven. Public procurement criteria should favor simulation benchmarks that can be reproduced and documentation of how internal models change during production.

Common criticisms and solid, fact-supported rebuttals

Some people worry that world models are just another overhyped idea that works in labs but fails in real life. However, real-world evidence suggests otherwise. Recent studies across different fields show that agents using world models can handle many tasks within a single system and perform well across different settings. A big reason for this progress is the focus on making models stable and robust during training. World models are now built to handle changes, not just work in theory. While they are not a perfect solution yet, these results show the research is on the right track and has real promise.

Another point of concern is computing resources, with some questioning whether world models demand more. Counterintuitively, the reverse might hold true. By supporting effective experience gained through simulations, world models often reduce the need for extensive real-world datasets or continuous field experimentation through repeated trials and errors. Current proofs of concept indicate that agents that employ internal thought to achieve success can achieve results on more modest computing budgets than some elementary, data-dependent baselines. Creating high-accuracy 4D scenes and simulating for long periods of time has costs, so procurement activities must balance the importance of accuracy with the suitability of a lighter-weight model. The right balance depends on task complexity and downstream risk.

Actionable steps for educators and decision-makers

For educators: prioritize teaching representational evaluation. Assignments must challenge students to construct compact world models, scrutinize their internal states, and validate that simulated outcomes align with actual results under controlled disturbances. This cultivates a troubleshooting approach, encouraging them to rectify the representation rather than the policy. For curricula designers, include modules centered on simulation precision, integrating multisensory inputs into a shared model, and mechanisms of causal reasoning within models. These modules offer both conciseness and significant influence, enabling students to reason about systems poised to underpin robotics, AR/VR, and sophisticated decision-support tools.

Decision-makers and policymakers should require certification through simulation, including regulations for state transparency. Contracts for high-risk AI systems should require vendors to provide reproducible simulation tests and to grant access to the model's internal state under specified test conditions. Regulatory frameworks should establish standards for documenting simulated stress tests, recording imagined trajectories, and versioning updates to world models. These requirements support effective audits and reduce the risk of concealing failures due to unexpected data distributions. It is advisable to introduce these provisions in pilot procurements for non-critical systems and to adjust requirements based on system risk.

In summary, enabling machines to develop and manipulate an understanding of their environment fundamentally alters approaches to AI robustness, auditability, and transferability. This advancement reduces data requirements, introduces new opportunities for policy-driven auditing, and redirects educational priorities toward representation and simulation skills. Educators, leaders, and policymakers are urged to prioritize model-based instruction, mandate stress testing, and establish procurement policies that reward transparent and examinable simulations. These measures will determine whether world models yield safer, more effective systems or become another generation of opaque black boxes. The objective should be to develop systems capable of envisioning improved outcomes and to require transparency regarding the internal models guiding their decisions.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Hafner, D., et al. (2025). Mastering diverse control tasks through world models. Nature. (Published April 2025).

DeepMind. (2025). Genie 3: A new frontier for world models. DeepMind Research Blog.

NVIDIA. (n.d.). What is a world model? NVIDIA Glossary.

Reuters. (2024). 'AI godmother' Fei-Fei Li raises $230 million to launch AI startup. Reuters.

r/MachineLearning. (2013). Discussion: What exactly are world models in AI? Reddit thread.

Comment