When the Children Replace the Parent: How LLMs Replace Wikipedia and What Educators Must Do

Input

Modified

LLMs replace Wikipedia by absorbing its knowledge while diverting human attention AI convenience erodes verification and shared correction Public knowledge now needs active policy protection

Large language models (LLMs) are rapidly supplanting Wikipedia as the go-to resource for students seeking fast, authoritative answers. This shift is not just about convenience; it represents a significant transformation in how public knowledge is accessed, evaluated, and contributed to. While students and educators may benefit from instant, seemingly well-cited essays generated by LLMs, the growing reliance on these tools threatens the core mechanisms that preserve, verify, and update public knowledge. As LLMs make it easier than ever to bypass original sources like Wikipedia, educators must reconsider their role in fostering information literacy and protecting the integrity of open knowledge systems.

LLMs Replacing Wikipedia: Evidence and Trends

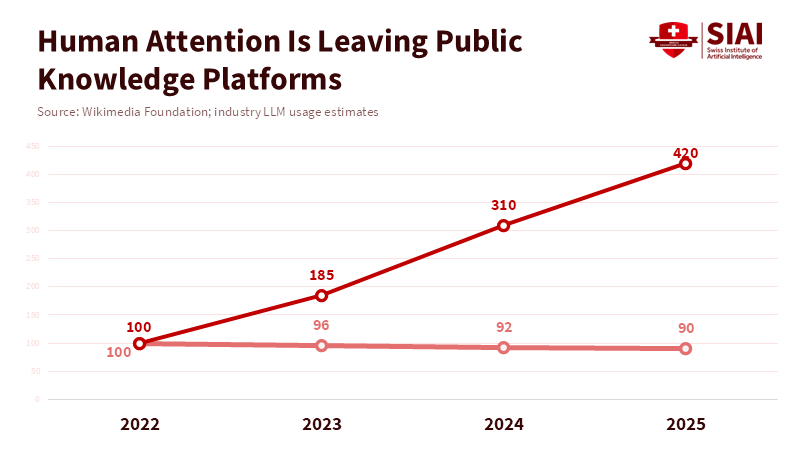

Metrics such as page views, unique visitors, and edit counts are not exhaustive, yet they provide valuable insights. Fluctuations in these figures influence contributor recruitment, correction processes, and site funding. A recent study examined these metrics across various language editions of Wikipedia, controlling for seasonal variation. The study identified persistent declines in certain user activities following the widespread adoption of popular chatbots. While this trend does not indicate the imminent disappearance of Wikipedia, it demonstrates that the essential cycle of readers becoming editors—which sustains the accuracy and vitality of many pages—is threatened by evolving user behaviors.

LLMs are trained on publicly available texts, including encyclopedias and documents curated by volunteers. These models synthesize information into unified responses that users often accept without consulting the original sources, thereby reducing traffic to those pages. This decrease lowers motivation among volunteer editors and donors, diminishing the discovery of new content, as noted on technology platforms and editor forums since chatbots became popular.

The timing of these changes supports this idea. The sharpest drops in user activity seem to happen right after new LLM products are released. Data from platforms and community reports agree: Questions and discussions that used to drive many page views now often lead to an AI summary, with no one clicking back to the source. This shift in behavior changes what we value, moving away from a living page—with its discussions, revision history, and visible corrections—to a quick, temporary answer. If public knowledge is judged by these short-lived answers, the system we have for maintaining, questioning, and documenting knowledge will weaken over time.

LLMs Replacing Wikipedia: Broader Implications Beyond Technology

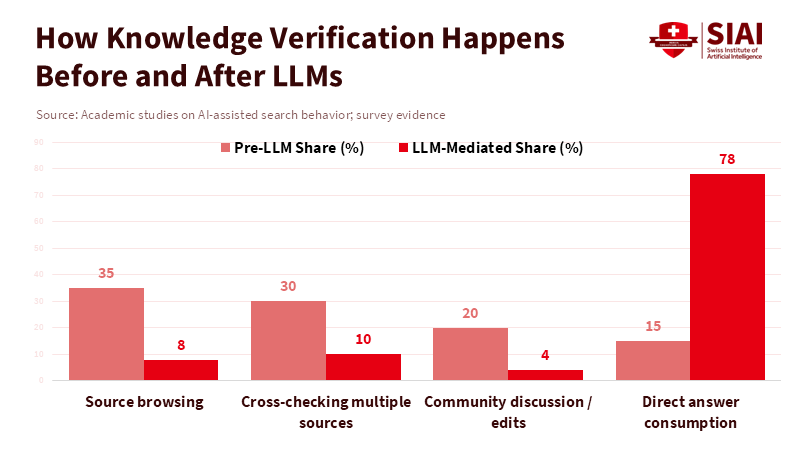

The impact on education is one of the first places we see the human cost. The old way of doing research—searching, clicking, reading, evaluating evidence, and citing sources—taught students to see information as something that could be questioned and debated. A Wikipedia page and its discussion history show how verification works in public. Chat interfaces, on the other hand, give a neat story and usually stop there. Students turn in well-written papers that are often hard to check for accuracy. Teachers now face a choice: Ban these tools and force the problem underground, or change assignments to reward the research process rather than just the final product. Banning the tools usually doesn't work. It hides the behavior and wastes opportunities to teach important skills. The better option is to teach students how to verify information actively and to require evidence of their thinking in their work.

The economics of public knowledge also suffer. Many public knowledge projects depend on being visible to attract new contributors and donations. If AI systems give answers directly and don't send readers back to the source, fewer people will accidentally come across these projects. Wikimedia has tried to make deals with companies and has updated its methods to filter out bot traffic and get value from how third parties use its content. These actions might recover some money. They don't automatically rebuild the community relationships and incentives that keep content accurate, up to date, and well-explained. Money can help with maintenance, but it can't bring back a culture of public editing on its own.

There is an inherent risk to the integrity of public knowledge. When AI systems generate well-written yet inaccurate or incomplete responses, these errors can disseminate rapidly. In platforms such as Wikipedia, mistakes are traceable and subject to public discussion and correction. However, AI-generated answers often obscure or omit source information. As more individuals consume summaries without source attribution, the capacity to question and rectify public claims diminishes. This issue is particularly significant for contentious subjects, including policy debates, health guidance, and historical interpretations, where public understanding is shaped by the transparency and accessibility of corrective mechanisms.

LLMs Replacing Wikipedia: Strategies for Educators, Administrators, and Policymakers

Start in the classroom with simple, easy-to-enforce habits. Require students to include a short record of their research for any assignment that uses AI: the exact question they asked the AI, the sources they checked, and a brief explanation of why they thought those sources were trustworthy. Ask students to include the specific revision or paragraph they used. Grade this record as part of the assignment. These steps turn a closed answer into a research project. They teach students how to verify information and bring back the habit of checking sources. Small assignments that focus on the research process instead of just the final writing will do more to protect public knowledge than simply banning the tools. Administrators should regard knowledge resources as essential infrastructure. This entails ensuring consistent funding for editorial roles in less prominent subject areas and underrepresented languages, supporting initiatives that facilitate editing, and promoting tools that enhance source visibility in both human- and machine-readable formats. For example, an AI-generated answer could include a 'view source' feature that links directly to the relevant public page and highlights the specific content used. Facilitating access to original revisions restores the public page's function in discovery and correction, a capability that private AI interfaces alone cannot replicate.wn.

For policymakers: require that public AI systems disclose information sources. Set standards so each AI answer includes a machine-readable provenance bundle with main sources and exact versions cited. This does not require open AI models but does enable verification and changes incentives: crediting sources encourages reliable citation and motivates companies to connect answers to original public content. Launch pilot programs and targeted regulations to test and refine these measures, starting with major consumer AI systems.

Expect resistance and be ready for it. Tech advocates correctly point out that AI can identify contradictions, expand expert judgment, and suggest useful edits faster than human teams alone. These benefits are real. The way incentives are set up affects the results. A platform designed to maximize user participation won't automatically prioritize ensuring information is traceable and correct. Design and policy must align the platform's incentives with the public good. If correcting information is a social priority, product design and regulations should reward it, rather than relying on companies to act out of goodwill.

Remember: convenience is not completeness. LLMs are popular for speed but lack public traceability. To preserve reliable knowledge, educators should require clear source documentation; institutions must support and fund public knowledge projects; policymakers should ensure AI systems credit original information sources. These steps guide AI's benefits toward maintaining open, verifiable knowledge. Sustaining public knowledge requires deliberate choices in teaching, funding, and rule-making. Without this, public knowledge risks becoming private, uncheckable answers.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Bartels, M. (2026). At 25, Wikipedia Now Faces Its Most Existential Threat—Generative A.I. Scientific American.

OpenAI Community. (2025). What will be the fate of StackOverflow? Community Forum.

Reeves, N., Yin, W., & Simperl, E. (2024). Exploring the Impact of ChatGPT on Wikipedia Engagement. arXiv preprint.

Stack Overflow Meta. (2025). Do you agree with Gergely that 'Stack Overflow is almost dead'? Meta StackOverflow.

Wikimedia Foundation. (2024). Wikipedia:Statistics.

Comment