Pay for the Light: Why AI transparency funding must be the price of openness

Published

Modified

AI transparency is a public good that cannot survive without explicit funding Unfunded openness will weaken Western firms against state-subsidized competitors Paying for transparency is the only way to keep AI markets both open and competitive

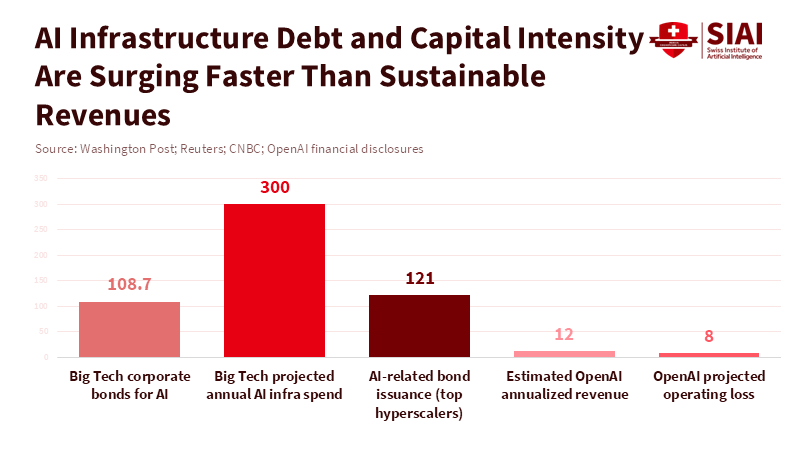

In the last three months of 2025, tech companies borrowed around $109 billion through corporate bonds to pay for the intense competition in AI. Big AI companies shifted from small research budgets to huge data centers and computing power, costing them tens of billions more. This shows something important: open AI systems will only stay open if there's money to cover the electricity, staff, checks, and responsible management that make openness real. Saying that AI transparency is a good thing, legally or morally, is important, but it's not the whole story. According to the California State Legislature's Transparency in Frontier Artificial Intelligence Act, new regulations require companies developing artificial intelligence to increase transparency, which could create challenges for international competitors, especially those backed by governments. To protect both our values and fair competition, we have to connect transparency with a way to pay for it. This AI transparency funding would put a fair price on the benefits to everyone and treat funding as a clear part of policy.

The Reality of Paying for AI Transparency

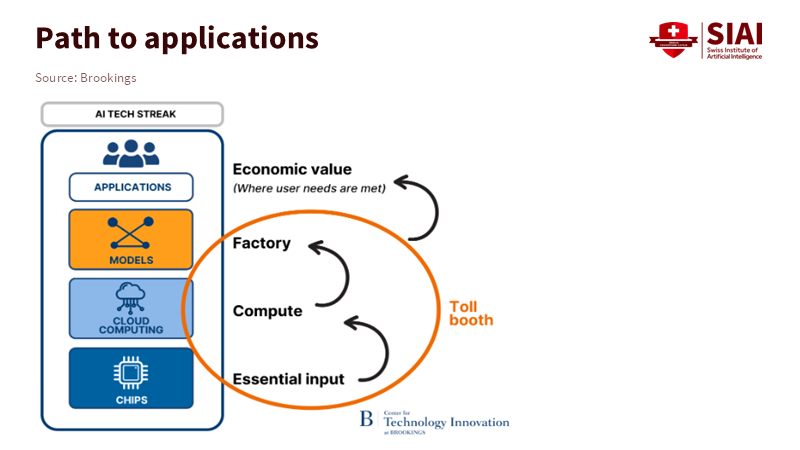

First, a lot of money is being spent on infrastructure. Top companies are building massive data centers and borrowing a lot to do it. Teaching and running the biggest AI models now needs constant money for computer chips, power, cooling systems, and fast connections. Companies get this money from investors, partners, and by selling bonds. This creates a pattern: investors are paying for growth now, hoping to make money later. But making money later isn't a sure thing. Competition, increased efficiency, and global politics can lower the expected profits. Open AI models and shared training data increase costs because of checks, removing sensitive info, paperwork, and following rules. This doesn't directly create new income unless it's used to make money. This creates a common problem: companies are asked to provide something good for everyone – real transparency – but their own profits depend on keeping their technology secret.

Second, there's a big difference in government support around the world. Many AI projects in China get direct and indirect support from the government. This includes money, tax breaks, shared computer networks, and support from local governments, which lowers the cost for companies to grow. According to the Foreign Policy Research Institute, local governments in China, such as in Beijing, Shanghai, and Shenzhen, subsidize the cost of computing power for AI startups through computing power vouchers. In contrast, companies in the United States generally do not receive similar government support. This matters because if transparency rules are the same for everyone, without considering where the money comes from, it could favor companies that are supported by subsidies. If transparency is a requirement for everyone, it needs to come with different ways to pay for it, or it will unfairly hurt companies that don't get subsidies.

That's why AI transparency funding is important: we need to pay for transparency directly. This could be done in different ways, like:

- Direct payments from the government to help companies check their AI.

- A fee paid by big AI companies that goes into a fund for public transparency.

- Tax credits for independent checks of AI models.

- Payments to smaller companies to help them follow the rules.

The specific plan is important, but the main idea is simple. If transparency creates benefits for everyone – like less misinformation, better safety, ways for regulators to check AI, and more trust – then it makes sense to use public money or have the industry work together to pay for it. Asking companies to pay for it on their own puts a strain on the market and encourages them to cut corners.

AI Transparency Funding: How to Compete with Rivals Supported by Governments

The central strategic question for Western firms is not whether transparency is desirable — it is — but how to keep their commercial engines running while meeting it. Open models and published datasets can create ecosystem value: researchers reuse, startups build, regulators inspect. But monetising that value is nontrivial. Advertising, enterprise subscriptions, vertical services and device ecosystems are plausible revenue routes for companies steeped in platform economics. Yet the numbers show a gap between headline valuations and durable cash flow in many frontier players, and the capital intensity of data centres and chips keeps growing. That gap is why some investors speak of an “AI bubble”: valuations price in sustained, very large margins that hinge on monopoly positions or unmatched scale. When rivals are state-backed, the contest is not just about product quality; it is about who can afford to subsidise a long investment horizon.

A practical response is to design transparency rules that recognise financing realities. One element is transitional relief calibrated by firm size, revenue, or access to strategic capital. Another is co-funding arrangements that link transparency to commercial advantage: firms that open crucial model documentation could receive preferential access to public procurement, tax credits for AI R&D, or co-funded compute credits. That makes transparency a serviceable investment — companies will open up because it buys market access and lowers other costs. A second element is mandatory contribution models. If the public gains from independent audits, a small industry levy earmarked for public-interest auditing bodies can scale as the market grows; that prevents free-riding and keeps audit capacity well-funded.

A final element is targeted risk sharing for the costliest pieces of transparency: independent red teams, third-party compute replication for validation, and long-running model documentation. These are expensive, uncertain, and not easily amortised into a subscription fee. Pooling those costs, or underwriting them through public guarantees, reduces the chance that a firm will avoid transparency because it cannot afford the verification.

AI Transparency Funding: Principles for Design and Policy

Policy should be based on four connected ideas. First, transparency requirements should be clear and specific. If they're vague, companies will do the minimum. Specify what information to share (model cards, training data, computer budgets, audit logs) and how to make results reproducible. Second, fund the standards. Create a public group to check AI, funded by user fees, industry fees, and government money, to avoid being controlled by companies. Third, match requirements to capabilities. Very large models that can do many things should have stronger transparency and cost-sharing requirements than small, specialized models. This allows for new ideas while targeting the biggest risks.

Fourth, make funding depend on performance. Payments, credits, and contracts should go to companies that meet strict transparency goals, checked by independent auditors. This sends a message that transparency isn't just for show, but a performance measure that improves competitiveness. It also avoids punishing companies that are transparent but underperform. Funds should reward real openness and verifiability. For small companies, the costs of auditing and paperwork make it harder for the rule to enter the market.

A good plan could use different ways to support transparency. A basic transparency standard would apply to all systems used widely. According to a study by Theresa Züger, Laura State, and Lena Winter, evaluating AI projects with a public-interest and sustainability focus can involve funding mechanisms such as a fee that increases with revenue to support public audits and measures to monitor computing power. Additional payments could be allocated to shared resources that benefit everyone, including safety test datasets, open benchmarks, and independent studies that assess how AI models perform. International cooperation would help create a level playing field. If foreign subsidies are clear, trade solutions or similar funding could be considered to prevent unfair advantages. The policy should involve laws, funding, and international agreements.

AI Transparency Funding: What It Means for Educators, Administrators, and Policymakers

Educators and administrators need to see AI transparency funding as both something to manage and something to teach. For universities and research labs, transparent funding can be a way to have steady collaboration. Government or industry payments that require open model documentation create predictable income for staff and data management. Administrators should develop the ability to manage data and audit models in the long term. These skills will be valuable in both education and public service. When buying AI systems, education systems should prefer companies that commit to real transparency and accept cost-sharing or payment plans tied to audit performance.

For policymakers, the challenge is to create the right system. Short, simple rules will either be avoided or create too many burdens. Instead, create a policy that combines basic requirements, shared funding, and targeted rewards, using many tools. Create clear ways to report information and to obtain third-party certification to reduce problems. Most importantly, build public audit groups with technical knowledge and shared governance so they can't be easily controlled by companies. Internationally, coordinate standards with other countries so that transparency requirements don't unfairly hurt companies.

Expect some criticism. Some will say that public funding of transparency is helping companies too much. The response is that the public already pays when private systems create problems like misinformation, privacy issues, and systemic risk. Funding transparency is an investment that reduces those problems and creates a public asset: models and datasets that can be audited and checked. Others will argue that fees and requirements will stop new ideas. But history shows the opposite when rules are paired with predictable funding and market rewards. Clarity lowers uncertainty and can grow markets by building trust. Finally, some will say that supporting domestic companies is protectionist. That's why the plan is important: link support to auditability and provide access to shared public resources, and allow qualified international companies to participate under similar rules.

Transparency without funding is just talk. The huge sums being spent on AI development show why funding is important. If openness is going to be real, it has to be priced and funded in a way that protects competition, encourages verification, and protects the public interest. Policymakers should create AI transparency funding now. They should fund public audit groups, allow fees tied to company size, and create benefits for verified openness. Educators and administrators should demand suppliers that can be audited and build the ability to manage models. Investors should prefer companies that see transparency as an asset. If we do this, openness will become a competitive advantage. If we don't, transparency will either be fake or cause companies to leave the market. We have a choice to make, and time is running out.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Bloomberg. (2026). A guide to the circular deals underpinning the AI boom. Bloomberg Business.

Epoch AI. (2025). Most of OpenAI’s 2024 compute went to experiments. Epoch AI Data Insights.

Forbes. (2025). AI data centers have paid $6B+ in tariffs in 2025 — a cost to U.S. AI competitiveness. Forbes.

Financial Times. (2025). How OpenAI put itself at the centre of a $1tn network of deals. Financial Times.

Rand Corporation. (2025). Full Stack: China's evolving industrial policy for AI. RAND Perspectives.

Reuters. (2026). OpenAI CFO says annualized revenue crosses $20 billion in 2025. Reuters.

Statista. (2024). Estimated cost of training selected AI models. Statista.

Visual Capitalist. (2025). Charted: The surging cost of training AI models. Visual Capitalist.

Washington Post. (2026). Big Tech is taking on more debt than ever to fund its AI aspirations. Washington Post.

CNBC. (2025). Dust to data centers: The year AI tech giants, and billions in debt, began remaking the American landscape. CNBC.

Comment