Wearable AI: How Our Bodies Are Becoming the Next Tech Hub

Input

Modified

Wearable AI is moving computing from screens to body-level devices Education policy must balance personalization with privacy and trust Early rules will decide whether wearable AI helps learning or harms it

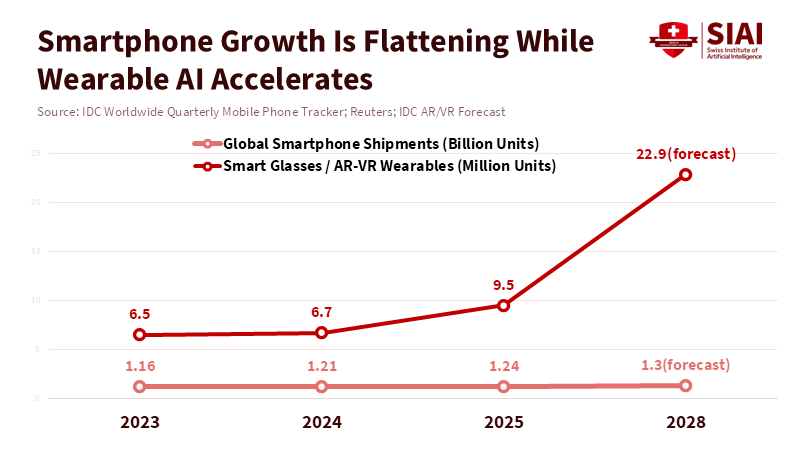

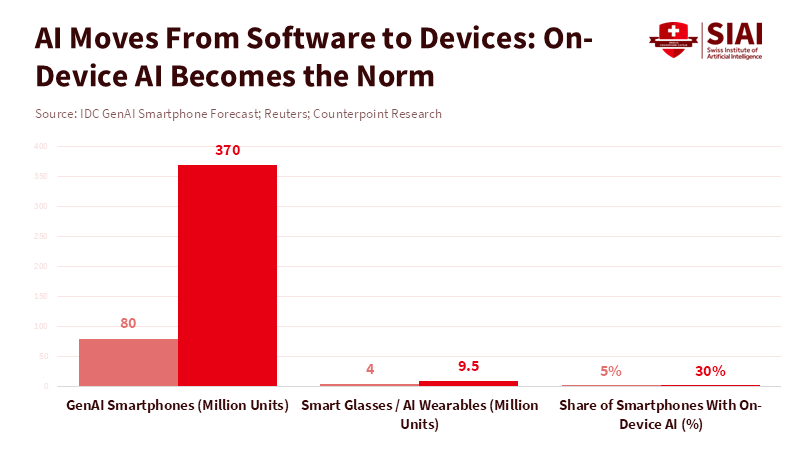

Initially, artificial intelligence (AI) focused primarily on processing text and images. By 2025, advances in AI enabled it to interpret video content, including scene analysis, action recognition, and temporal changes. Currently, major technology companies are investing significantly in wearable devices, marking a shift toward the physical integration of AI. This transition indicates that smartphones, long the primary personal technology, are evolving into ubiquitous, wearable systems embedded in clothing, eyewear, and domestic environments. This wearable AI isn't just one new product. It's a whole shift in how we use technology, and it calls for new rules about privacy, how schools procure technology, and even what we teach in classrooms. The question isn't whether this wearable AI is coming, but how we can ensure it helps students learn rather than taking over the learning process.

Wearable AI: A New Way of Doing Things

The advent of smartphones fundamentally altered attention, work, and learning patterns through a single, portable interface. Wearable AI has the potential to drive another paradigm shift by integrating pervasive sensors, on-device AI processing, and continuous connectivity. Companies are developing compact devices, such as pins, glasses, and earbuds, equipped with cameras, microphones, and AI chips that integrate with cloud systems to anticipate user needs, summarize ongoing activities, and manage complex tasks.

This technical pivot has direct educational consequences. Unlike a laptop or phone, a wearable is designed to occupy the same physical space as a learner and to move with them through school, home and fieldwork. That means continuous contextual data — gaze, location, ambient audio, short video clips, and biometric readings — may become available for adaptive tutoring, classroom management, and formative assessment. The promise is personalized in situ feedback: a wearable that detects a student’s confusion during a lab and provides a hint, or that captures a teacher’s explanation and produces a searchable summary. But the presence of persistent sensing also creates new structural risks. Surveillance-style deployments can chill discussion, undermine equitable access, and transform informal peer interactions into recorded artifacts. The policy imperative is to design norms and procurement rules that preserve pedagogical gains while preventing data capture that corrodes trust.

What This Means for Schools

The transition to wearable AI has significant implications for educational settings. Unlike laptops or smartphones, wearable devices are designed for continuous use, accompanying students throughout various environments. Consequently, extensive data—including gaze tracking, location, audio, video, and health metrics—may be leveraged to support learning, classroom management, and student assessment.

Imagine a wearable device that detects when a student is confused in a lab and provides a hint, or one that records a teacher's explanation and generates a summary that students can search later. But there are risks, too. Constantly monitoring students could make them feel like they're always being watched, make it harder for everyone to have equal access to learning, and turn casual conversations with friends into recorded events. We need rules to ensure we get the benefits of wearable AI without losing trust.

Wearable AI in the Classroom: What's Possible, What's Not

Schools will want to experiment with wearable AI to help students learn individually. For example, in English class, a wearable could help with pronunciation, explain new words, and track how much students participate. In a chemistry lab, it could demonstrate safe procedures and record how well students follow them. These aren't just ideas; some schools are already trying out sensors and feedback to help students practice skills and learn languages.

When implemented correctly, wearable AI can accelerate feedback and show how students learn. But we need to be realistic about what it can do. AI can make mistakes, sensors can misread situations, and summaries can leave out important details. The best systems will be simple, keep teachers in charge, explain how decisions are made, and allow students to opt out easily. Data from these systems should be seen as clues, not as final judgments.

Fairness, Tech Support, and Buying Decisions

Whether wearable AI helps everyone or just makes things worse for some depends on fairness, tech support, and how schools make buying decisions. Many schools lack reliable Wi-Fi, effective device management, or sufficient staff to monitor how the AI is performing. Buying an AI pin or smart glasses is just the beginning. Schools also need to budget for security, student login management, AI monitoring, and teacher training.

Cost is a significant factor. Projections indicate that the wearable AI market will soon reach a multi-billion-dollar valuation, attracting considerable corporate interest while also driving rapid product turnover. Schools should avoid vendor lock-in by advocating for interoperability standards. It is essential to require transparent performance metrics, robust privacy assurances, and opportunities for independent evaluation of AI systems.

Regulatory Considerations for Wearable AI

Wearable AI is prompting us to rethink rules on student privacy, safety, what we teach, and how schools procure. Old privacy rules focus on data stored on servers. Wearables complicate matters because they process data on the device, continuously collect sensor data, and make context-based decisions. Rules need to cover not just how data is stored, but also how it's collected and how decisions are made in real-time.

Schools should ensure that any device used for learning has clear controls that let users turn off the camera or see what the AI is processing during a lesson. Contracts should include explanations of how the AI works, transparent updates, and guarantees that student data can be deleted. Without these safeguards, schools could end up using AI systems that make critical decisions without clear explanations.

Rules need to be practical. Too many restrictions can hinder effective learning and create inequality when only wealthy schools can afford custom solutions. On the other hand, no rules at all could lead to companies taking advantage of and schools constantly monitoring students. A good approach is to have specific rules, like limits on recording video during private moments, clear notices to parents and students about what's being recorded, requirements that AI used for evaluation be checked by independent labs, and expiration dates for data permissions so that data doesn't stay around forever. Policies should also clearly define the role of teachers: wearables should help teachers, not replace them.

Addressing Key Concerns

Some people will say that wearable AI is just another gadget that will fail like past experiments. They'll point to failed hardware and how hard it is to change people's habits. These are valid points, but they don't tell the whole story. Past failures often occurred when the tech didn't really meet users' needs or fit well with existing workflows. Today's wearables are different. They have more powerful AI, better batteries, and a clearer software system that connects to cloud services. The companies are also connecting hardware to platform strategies to create a system that keeps people locked in, like they are with smartphones. That's why we need to set rules now before it's too late.

Concerns regarding accuracy and bias are also prominent. AI systems trained on generalized internet data may misinterpret speech or cultural cues, posing significant risks in educational contexts. Mitigation strategies include mandating transparency in training data, evaluating AI performance with diverse student populations, and instituting regular re-assessments. Automated assessments should be considered preliminary and supplemented by teacher judgment. Contractual requirements for these practices will incentivize companies to adapt, enabling the development of assessment tools that support, rather than supplant, educators.

How to Get Started

Start small and be ready to change course if needed. Schools should test wearable AI in limited programs with clear goals: accurate tutoring, happy teachers, and measurable student progress. Data plans should be in place from the start, with limits on how long data is retained, how it's used, and independent audits. According to a study by Alzghaibi, the successful use of AI-powered wearable devices depends on factors like user education, technical reliability, and professional oversight. Schools should select wearables that prioritize these elements to protect privacy and enable seamless transitions between brands without disrupting learning. These steps may cost more upfront, but they reduce risk and protect trust.

To encourage responsible use of wearable AI, policymakers can reward companies that minimize data retention, have strong on-device AI, and provide transparent information about how their AI works. Grant programs can help schools pay for audits and teacher training. Teacher training programs should include AI content to help teachers evaluate and use wearables safely. Without these measures, schools might either reject useful tools out of fear or adopt them unsafely.

Wearable AI is the next step in tech. It's not just a futuristic idea. The AI that used to be in the cloud will increasingly be on our bodies, in our pockets, and on our walls. This is both a promise and a threat. It promises immediate learning support, but it threatens constant surveillance and unfair assessments. Schools must not let companies make decisions for them. Instead, administrators, policymakers, and teachers should work together to set standards that prioritize privacy and explainability, invest in trials with clear learning objectives, and require independent checks of AI behavior. If we make wearable AI a tool that helps teachers, not replaces them, we can gain its benefits while protecting people's rights. Now is the time to set rules that future students will appreciate.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Fortune Business Insights. (2025). Wearable AI Market Size, Share & Trends: Forecast to 2034. Fortune Business Insights.

Grand View Research. (2025). Wearable AI Market Report: Industry Analysis and Forecast to 2033. Grand View Research.

IDC. (2024). Worldwide wearable device shipments forecast and market analysis. IDC Press Release.

MacRumors Staff. (2026, January 21). Apple Developing AirTag-Sized AI Pin With Dual Cameras. MacRumors.

Reuter(s). (2026, January 21). Apple to revamp Siri as a built-in chatbot — Bloomberg/Reuters reporting. Reuters.

Scientific American. (2026). AI’s next battleground is your body. Scientific American.

The Verge. (2026). Apple is reportedly working on an AirTag-sized AI pin. The Verge.

Wired. (2026). Gear News of the Week: Apple’s AI Wearable and a Phone That Can Boot Android, Linux, and Windows. Wired.

Comment