The Age of the Reality Notary: How Schools and Institutions Must Learn to Certify Truth

Published

Modified

Digital truth can no longer be judged by human sight or sound alone Institutions must certify reality, not just detect fakes after harm occurs Education systems now play a central role in rebuilding trust in evidence

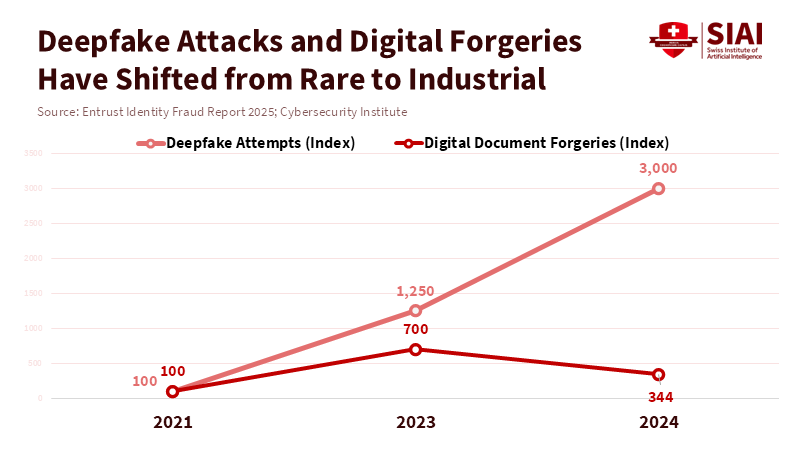

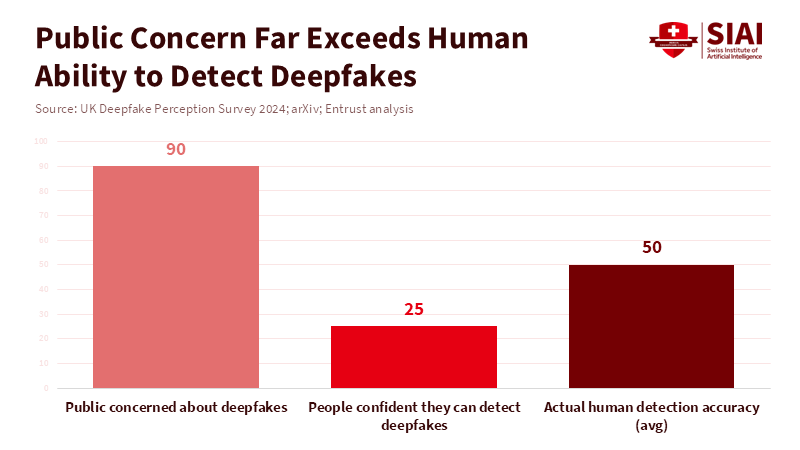

In 2024, there was a deepfake attempt about every five minutes. This shows a significant shift in how we see public truth. Almost everyone is worried about being fooled by fake audio or video. These two things together are a warning: fake sights and sounds have quickly become a significant problem. Schools, leaders, and lawmakers need to do more than teach people how to spot fakes. They need to rebuild the ways we trust images, recordings, and documents. We need to create a system of reality keepers—professionals and organizations that can certify trustworthy digital proof. If we don't, we risk losing control over public truth to those who can easily weaponize fake media.

Reality Keepers: Changing the Role of Verification in Education and Organizations

Usually, we think of verification as something experts do after something has been created. An expert examines the details of a film, metadata, or an object, then makes a decision. However, this approach is becoming outdated. Now, fake media is generated by computer programs trained on large datasets of images and audio. These programs can replicate lighting, facial expressions, and even unique speaking styles. Verification is no longer just about finding a single-pixel error. It's about proving the source of an image or recording and when it was taken. We need to start verifying information earlier, closer to where images and recordings first appear. Schools and organizations need to teach and use methods to verify content sources when they are created and shared, rather than trying to identify fakes later. New verification tools are improving, but they still have issues. Detectors work well on things they already know, but they struggle with new methods and changes. This means we can't rely only on detection. Organizations need processes in place to support.

This is important because it changes who is responsible for the truth. Before, newspapers, courts, and labs were the ones who decided what was real, even though they weren't perfect. Now, schools, online platforms, and service providers need to help certify content as real enough for specific uses. The idea of a reality keeper includes being a technician, an auditor, and a teacher. A reality keeper might create a digital record that includes information about where something came from, when it was created, which device was used, and any changes made. This record would stay with the media. For everyday tasks such as online notarizations, rental agreements, and school assignments, this record could be the difference between trust and fraud. This focus on origin is important because, while detectors are improving, fake media methods are improving even faster. We need to focus on protecting the truth from the outset, not after the damage is done.

The Evidence: The Problem, the Cost, and the Limits of Human Judgment

The numbers show why we need to act quickly. There has been a massive increase in fake content and fraud. Companies that track identity fraud report that deepfake attempts are occurring frequently. Surveys say that people are apprehensive about being tricked. Experts say fraud will increase significantly due to new computer programs, unless we take action. Fraud could increase by billions of dollars across finance, real estate, and business between now and the middle of the decade. These problems are not imaginary. Title insurers, escrow agents, and banks have already reported cases in which fake voices or videos were used to steal funds. The finance world is like a warning sign. When fraud becomes too costly, the response is swift: transactions are frozen, rules are tightened, and lawsuits are filed.

How well people can spot fakes tells another part of the story. Studies show that people are poor at detecting high-quality forgeries. We can't rely on human accuracy anymore. Computer programs can perform well at detecting forgeries, but only in controlled settings. In real-world settings, where attackers continually adapt their methods, detectors perform less effectively, particularly as fake media techniques evolve. This means that organizations that rely on people to detect forgeries will miss more forgeries. Those who rely only on detectors will face problems with false positives and policies that don't work against new attacks. We need a mix of human and computer verification, along with clear steps for verifying information and holding individuals responsible if they don't follow those steps. This is where the role of the reality keeper comes in.

What Schools, Leaders, and Lawmakers Must Do Now to Build Reality Keeper Systems

First, schools need to do more than teach a single lesson on media knowledge. They need to create skills that build over time, from kindergarten through college and professional training. Younger students should learn simple habits, like noting who took a picture, when, and why. Older students should learn to read metadata, understand how information is stored, and practice tracking sources. Professionals such as journalists, notaries, and title agents should verify information, maintain audit logs, and require proof of origin from others. These skills are essential for everyone. We can teach these skills in practical ways, such as pairing classroom activities with foundational tools, running simulations in which students create and identify fakes, and offering short professional certificates that require ongoing training. The goal is not to create many image verification experts, but to ensure that organizations have simple, repeatable ways to make it harder to create fakes.

Second, we should require proof of origin for essential transactions. This proof would include information about the device used to create the content, a secure timestamp, a record of any changes made, and, when needed, a verified identity. For matters such as remote closings, loan approvals, and election materials, organizations should not accept media that doesn't meet a basic standard of proof. The point is not to make small things complicated, but to create easy, automated checks that don't inconvenience legitimate users while making it more complex and more expensive to commit fraud.

Third, we need to create official reality keeper roles and give organizations a reason to use them. Reality keepers would be licensed professionals who work in organizations such as schools, banks, and courts, or who are provided by trusted third parties. Their license would require them to comply with standards for verification, audit practices, and legal responsibilities. Getting accredited wouldn't require advanced technical skills. Still, it would require following standard procedures: capturing proof of origin, tracking the source of information, maintaining basic security knowledge, and handling disagreements. It's essential to give organizations a reason to use reality keepers. According to EasyLearn ING, professionals can strengthen defenses against digital fraud, including deepfakes, by participating in short, practical courses focused on verification skills. Insurance policies and regulations could reward those who consistently use these reality-keeper techniques. Lawmakers should see these programs as essential and fund pilot programs in schools and high-risk areas.

Addressing the Main Concerns

Some might worry that this will be expensive and complicated. But the cost of doing nothing is rising faster. The cost of adding proof of origin to a video or document is small compared to the value of transactions in finance and real estate. Studies show that the extra work is manageable and can be offset by lower fraud losses and insurance savings. Others might say that attackers will adapt, and that verification will never be perfect. That's true, but we don't need perfection. We just need to make it harder for fraud to be worth it. Even a single additional verification step for wire transfers can prevent much of the crime. Some may be concerned about surveillance and privacy. But we can design the proof of origin to include only the necessary information and to use privacy-protecting methods. We need rules and oversight to minimize the use of data. The alternative—allowing fake content to spread unchecked—creates much bigger problems for privacy and democracy by weakening our trust in facts.

A deepfake every five minutes is not just a statistic; it's a call to action. If we let the ways we trust images and recordings weaken, the future will be one where truth is controlled by whoever can create the best fakes. The answer is to teach about origin, require proof of origin for essential exchanges, and create accredited reality keepers who are legally and operationally responsible. Schools should teach the skills, leaders should establish standards, and lawmakers should require basic verification and fund training programs. This won't solve everything, but it will rebuild trust where it has been lost. According to a recent article, a recommended first step is launching phased pilot programs in selected educational settings that use synthetic personas, along with systems to verify the origin of essential materials and dedicated training for roles focused on maintaining digital authenticity. If these initiatives are not implemented, there is a risk that others may create deceptive content and shape public opinion. That would be a loss we can't afford.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Deloitte. (2024). Generative AI and Fraud: Projected economic impacts. Deloitte Advisory Report.

Entrust. (2024). Identity Fraud Report 2025: Deepfake attempts and digital document forgeries. Entrust, November 19, 2024.

Jumio. (2024). Global Identity Survey 2024 (consumer fear of deepfakes). Jumio Press Release, May 14, 2024.

Alliant National Title Insurance Co. (2026). Deepfake Dangers: How AI Trickery Is Targeting Real Estate Transactions. Blog post, Jan 20, 2026.

Tasnim, N., et al. (2025). AI-Generated Image Detection: An Empirical Study and Benchmarking. arXiv preprint.

Group-IB. (2025). The Anatomy of a Deepfake Voice Phishing Attack. Group-IB Blog.

Stewart Title. (2025). Deepfake Fraud in Real Estate: What Every Buyer, Seller, and Agent Needs to Know. Stewart Insights, Oct 30, 2025.

Comment