Take It Down, Keep Moving: How to Take Down Deepfake Images Without Stalling U.S. AI Leadership

Published

Modified

48-hour takedowns for non-consensual deepfakes Narrow guardrails curb abuse, not innovation Schools/platforms: simple, fast reporting workflows

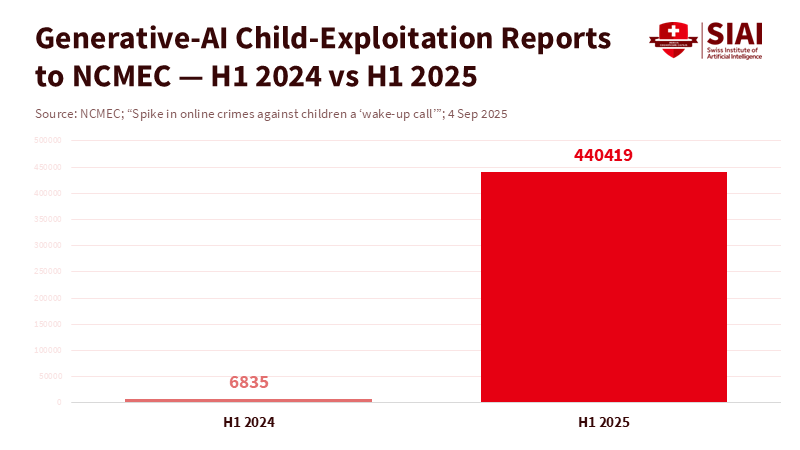

Deepfake abuse is a vast and growing problem. In the first half of 2025, the U.S. CyberTipline received 440,419 reports alleging that generative AI was used to exploit kids. That's a 64 times increase from 2024! This causes harm every single day, so we really need to put some protections in place, and fast. The Take It Down Act aims to quickly remove deepfake images without disrupting America's AI progress. Some people worry that rules might slow things down and let China get ahead. But we need to make sure tech improves while keeping people safe. The Act shows we can protect victims and encourage AI leadership at the same time.

What the Act Does to Get Rid of Deepfake Images

This law is all about what works. It makes it illegal to share private images online without permission, including real photos and AI-generated ones. Covered platforms have to create a simple system for people to report these images so they can be taken down. If a victim sends a genuine request, the platform must delete the content within 2 days and do its best to remove any copies it knows about. The Federal Trade Commission can punish those who aren't following the rules. The penalties are harsher if minors are involved, and it's illegal to threaten to publish these images, too. It's all about promptly removing deepfake images once a victim speaks up. Platforms have until May 19, 2026, to get this system up and running, but the criminal stuff is already in place.

The details matter. Covered platforms are websites and apps that are open to the public and primarily host user-generated content. Internet providers and email are omitted. The Act has a good-faith clause, so platforms that quickly remove stuff based on clear evidence won't get in trouble for taking down too much. It doesn't replace any state laws, but it adds basic protection at the federal level. Basically, Congress is bringing the straightforward approach of copyright takedowns to a situation where delays can cause significant problems, especially for children. This law is targeting things that can't be defended as free speech. It's about non-consensual, sexual images that cause real harm. That's why, unlike many other AI proposals in Washington, this one became law.

What the Law Can and Can't Do to Remove Deepfake Images

The Act is a response to a significant increase in AI being used for exploitation. Getting stuff taken down fast is super important because a single file can spread quickly across the internet. The two-day rule helps prevent lasting damage. The urgency of the Act shows just how much harm delayed removal causes victims.

But a law that focuses on what we can see might miss what's going on behind the scenes. Sexual deepfakes grab headlines. Discriminatory algorithm decisions often don't. If you get turned down for credit, housing, or a job by an automated system, you might not even know a computer made that decision or why. Recent studies suggest that lawmakers should take these less-obvious AI harms just as seriously as the more visible ones. They suggest things like impact assessments, documentation, and solutions that have as much power as what this Act gives to deepfake victims. If we don't have these tools, the rules favor what shocks us most over what quietly limits opportunity. We should aim for equal protection: quickly remove deepfake images and be able to spot and fix algorithmic bias in real time.

There's also a limit to how many takedowns can do. The open internet can easily get around them. The most well-known non-consensual deepfake sites have shut down or moved under pressure, but the content ends up on less-obvious sites and in private channels, and copies pop up all the time. That's why the Act says platforms need to remove known copies, not just the original link. Schools and businesses need ways to go from the first report to platform action to getting law enforcement involved when kids are involved. The Act gives victims a process at the federal level, but these place needs a system to support it.

Innovation, Competition, and the U.S.–China Dynamic

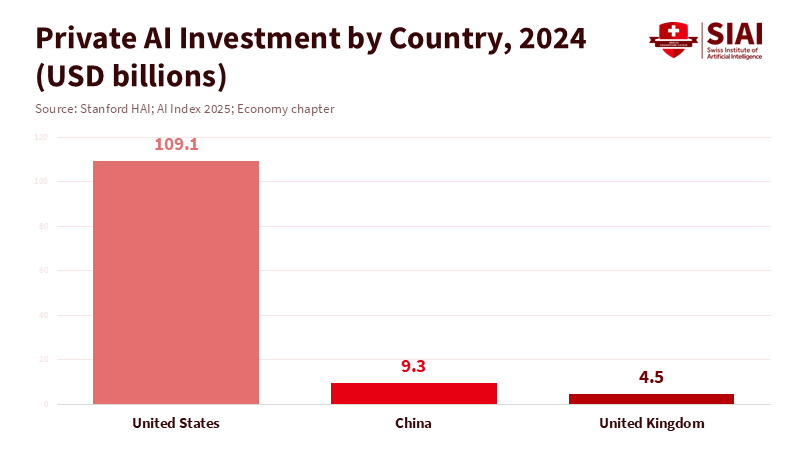

Will this takedown rule slow down American innovation? The stats say no. In 2024, private AI investment in the U.S. hit \$109.1 billion, almost twelve times China's \$9.3 billion! The U.S. is also ahead in creating important models and funding AI projects. A clear rule about non-consensual private images won't undermine those numbers. Good rules actually reduce risks for place that need to use AI while following strict legal and ethics guidelines. Safety measures aren't bad for innovation; they encourage responsible use.

A lot is happening with laws in the U.S. Right now. In 2025, states were considering many AI-related bills. A lot of them mentioned deepfakes, and some became law. Many are specific to certain situations, some don't agree with each other, and a few go too far. This could be a problem if it leads to changing targets. The government has suggested one-rulebook ideas to limit what states can do, but businesses disagree on if that would help. What we should be doing is improving coordination. Keep the Take It Down rules as a foundation, while adding requirements based on risks. Stop state measures only when they really cause conflicts. Focus on promoting what's clear, not what's confusing.

What Schools and Platforms Should Do Now to Remove Deepfake Images

Schools can start by sharing a simple guide that tells people how to report incidents and includes legal information in digital citizenship programs. Speed and guidance are key, connecting victims to counseling and law enforcement when needed.

Platforms should use the next few months to test things out. Pretend to receive a report, check it, remove the file, search for and delete identical copies, and document everything. Take the takedown process to all areas where content spreads, like the web, mobile, and apps. If your platform allows third-party uploads, make sure one victim report reaches every location where the host assigns an internal ID. Platforms should release brief reports on response times as we get closer to May 19, 2026. The extra hour you save now could prevent problems later. For smaller platforms, following the pattern from DMCA workflows can lower risks and engineering costs.

Beyond Takedown: Building More Trust

The Act gives us a chance. Use it to raise the standards. First, invest in image authentication where it is most important. Schools that use AI should use tools that support tracking image origin. This won’t spot everything, but it will link doubts to data. Second, make sure people can see when an automated tool was used and challenge it where it is essential. This is like what the Act grants to deepfake victims: a way to say, That's wrong, fix it”. Third, treat trust work as a means of growth. America doesn't fall behind by making places safer. It gains adoption and lowers risks while keeping the pipeline of students and nurses open.

The amount of deepfakes will increase. That shouldn’t stop research. People should ensure that clear notice forms are in place and uphold a duty of care for kids. The Act does not solve every AI problem. But by prioritizing response & transparency, we can build trust in AI’s potential. Take steps now to show your commitment to innovation.

We started with a scary issue. This underlines the need for action. The Take It Down Act requires platforms to remove content. The law is clear. It won't fix it, others will need to be addressed. It will not end the battle between creators & censors. But it will allow control & and we can remove deepfake images. This is we should seek: a cycle of trust

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Brookings Institution. (2025, December 11). Addressing overlooked AI harms beyond the TAKE IT DOWN Act.

Congress.gov. (2025, May 19). S.146 — TAKE IT DOWN Act. Public Law 119-12. Statute at Large 139 Stat. 55 (05/19/2025).

MultiState. (2025, August 8). Which AI bills were signed into law in 2025?

National Center for Missing & Exploited Children. (2025, September 4). Spike in online crimes against children a “wake-up call”.

National Conference of State Legislatures. (2025). Artificial Intelligence 2025 Legislation.

Skadden, Arps, Slate, Meagher & Flom LLP. (2025, June 10). ‘Take It Down Act’ requires online platforms to remove unauthorized intimate images and deepfakes when notified.

Stanford Human-Centered AI (HAI). (2025). The 2025 AI Index Report (Key Findings).

Comment