The Missing Middle of Teen Chatbot Safety

Published

Modified

Teen chatbot safety is a public-health issue as most teens use AI daily Adopt layered age assurance, parental controls, and a school “teen mode” with crisis routing Set a regulatory floor and publish safety metrics so safe use becomes the default

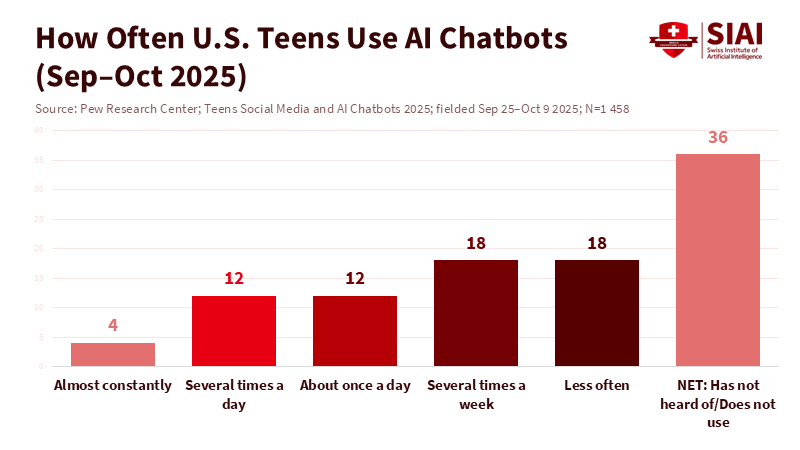

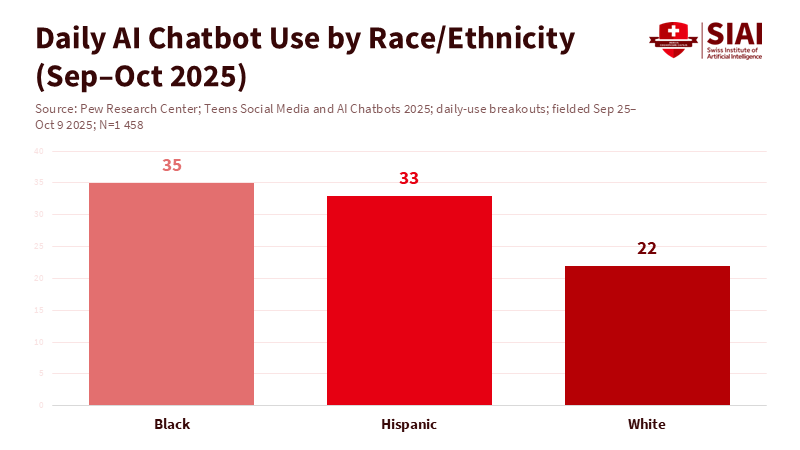

Here’s a stat that should grab your attention: around 64% of U.S. teens are using AI chatbots now, and about a third use them every day. So, teen chatbot safety isn’t a small thing anymore; it’s just part of their daily online lives. When something becomes a habit, little slip-ups can turn into big problems: like bad info about self-harm, wrong facts about health, or a late-night so-called friend that messes up ideas about consent.

The real question isn’t if teens are using chatbots—they clearly are—but if schools, families, and the platforms themselves can come up with rules that keep up with how fast and intensely these tools are being used. We need to switch from just letting anyone jump in with no rules to having a more protective approach focused on stopping problems before they start. We can’t just say it’s good enough for adults. It needs to be safe enough for kids. That bar needs to be higher, and it should’ve been raised already.

Why Teen Chatbot Safety is a Health Concern

Teens aren’t just using these things for homework anymore; they’re using them for emotional support. In the UK, the numbers show that one in four teens asked AI for help with their mental health in the last year, usually because getting help from real people felt slow, judgmental, or harsh. A U.S. survey shows most teens have messed around with chatbots, and a bunch use them daily. This combo of using them a lot and having secret chats makes things risky when the chatbot answers wrong, isn’t clear, or acts too sure of itself. It also makes it seem normal to have a friend who’s always there. This isn’t harmless; it changes how teens think about advice, being on their own, and asking for help.

If something has so much influence, then it needs safety measures built in. The proof is there now: teens are using chatbots a lot and for serious stuff. Policies have to treat this as ongoing, not just as an app you download once. A serious event prompted people to demand action. In September 2025, a family said their teen’s suicide came after months of talking to a chatbot. After that, OpenAI said it would check ages and give answers made for younger users, mainly dealing with thoughts of suicide. This came after earlier talk about maybe telling the authorities if there were signs of self-harm. That was a touchy subject, but it showed that this whole thing has risks.Heavy, unsupervised use when you’re young and unsure—that’s what makes persuasive but sometimes wrong systems risky. If the main user is a kid, then the people in charge need to do what they can to keep them away from stuff that could hurt them.

From Asking Your Age to Actually Knowing It: Making Teen Chatbot Safety Work

There are some basic rules already. Big companies make you be at least 13 to use their stuff, and if you’re under 18, you need a parent’s okay. In 2025, OpenAI introduced parental controls so parents and teens could link accounts, set limits, block features, and add extra safety. The company also discussed using a system to guess your age and check IDs so users get the correct settings and less risky content. These are must-haves; they’re better than just asking people to tell on themselves. Of course, getting consent has to mean something. Controls have to be on point and tough to get around. Mainly, protecting kids should be the priority, especially at night when risky chats tend to happen.

Knowing a user’s age should be a system, not just a one-time thing. Schools and families need different layers of protection, including account settings, device rules, and safety features for younger users. Policies should ensure you’re checking for risks in a real way, including spotting potential failures (for example, incorrect information), putting controls in place, measuring how things are going, and making changes when needed.

In other words, safety for teens on chatbots should mean stricter rules for touchy subjects, set times when they can’t be used, blocking lovey-dovey or sexual stuff, and making it easy to get help from a real person if there’s a serious problem—all while keeping their info private and not causing unnecessary alarms. The standard should be pretty darn sure, and you should have to prove it. All these rules are key to making sure promises turn into real action.

A Schools First Idea for Teen Chatbot Safety

Schools are right in the middle of all this, but policies often treat them like they don’t matter. Surveys from 2024 and 2025 found that many teens are using AI to help with schoolwork, often without teachers even knowing. A lot of parents think schools haven’t said much about what’s okay and what’s not. We can fix this gap. School districts can put a simple, doable plan in place: have different levels of access based on what you’re doing, have people check things over, and have clear rules about data.

What I mean is, there should be one mode for checking facts and writing stuff with sources, and another, more strict mode for deep or emotional questions. A teacher should be able to see what the student is doing, what they’re asking about, and a short reason why it’s okay on their device. Data rules need to be clear: don’t train the AI using student chats, keep logging to a minimum, and have set rules for deleting data. Schools are already doing this for search engines and videos, so they should do it for chatbots too.

School safety for teen chatbots should also include social skills, not just tech barriers. Health teachers should show how to find proof and spot wrong answers. Counselors should deal with risks flagged by the tools. School leaders should set rules about using them at night related to going to class and feeling okay. Companion chatbots need extra thought. Studies show that teens can become emotionally attached to fake friends, which can blur boundaries and lead to advice that encourages controlling behavior. Schools can ban romantic companions on devices they control while still allowing educational tools, explaining why: consent, feeling for others, and real-world skills don’t come from being alone. It’s not about scaring kids; it’s about guiding them toward learning and keeping them away from the parts of adolescence that are easiest to mess with.

Thinking About the Criticisms—and How to Answer Them

Criticism one: Checking ages might invade privacy and mislead users. That’s true, so the best designs should start with predicting ages using the device, use rules that can be adjusted, and only check IDs when there’s a serious risk, and you have permission. There are clear guides on making sure the methods fit the risk: reduce risks using the least intrusive ways that still get the job done, keep track of mistakes, and write down the trade-offs. Schools can further reduce risk by ensuring the checks aren’t part of a student’s school record and by not saving raw images when estimating faces. Telling everyone what’s going on is key: share the error rates, tell teens and parents how they can argue a decision, and allow people to report misuse secretly. If we do these things, teen chatbot safety can move forward without turning every laptop into a spy tool.

Criticism two: Strict safety measures might make inequality even worse, since students with their own devices might get around the school rules and end up doing better. The answer is to create safe access, the easiest way to do things everywhere. Link school accounts to home devices using settings that move with them, apply the same modes and quiet hours across the board, and don’t train the AI on student data so families can trust the tools. When the simplest thing is also the safest, fewer students will try to cheat the system.

And the idea that safety measures stop learning just ignores what’s happening now: teens are already using chatbots for quick answers. A better design can raise the bar by asking for sources, showing different points of view, and controlling the tone when dealing with touchy subjects involving kids. Everyone does better when every student has access in a set way, rather than when some people can buy their way around the safety measures.

What the People in Charge Should Do Next

To policymakers, that reads: Do it now—make sure any chatbot teens use meets these three rules. First, make age checks real: start with the least nosey way possible, and only go further as needed. Also, have independent audits to find any biases and mistakes. Second, make sure there’s a teen mode that blocks certain features, uses a calmer tone for emotional times, strictly blocks sexual stuff and companions, and makes it easy to get help from a real person in an emergency. Third, demand tools that are ready for schools: admin controls, limited access based on the task, minimal data, and a public safety card that outlines the protections for teens.

These protections ensure older teens can access more advanced content with permission, while still guaranteeing the tools fit what teens need. Refer to risk management guides in all rules so agencies know how to monitor for compliance. Do it now to protect teens in their online lives.

Platforms should share their youth safety numbers regularly. How often do the teen-mode models block stuff they shouldn’t? How usually do crises come up, and how fast do real people jump in? What’s the rate of getting ages wrong? What are the top three areas where teens are getting wrong info, and what fixes have been made? Without these numbers, safety is just a way to sell something. If we share these numbers, safety becomes real. Independent researchers and youth safety groups should be part of the process.

Current work from people who study cyberbullying shows the new risks of companion interactions and sets a plan for schools to research this. Policymakers can provide funding to connect school districts, researchers, and companies to test teen modes in the real world and compare outcomes across different safety plans. The standard should be something everyone can see, not just something some company owns: Teen chatbot safety gets better when problems are out in the open.

The truth is, chatbots are now part of the daily lives of millions of teens. That’s not going to change. The only choice we have is whether we create systems that admit teens are using these things and do a good job of dealing with that. We already have everything we need: age checks that are better than just clicking a box, parental controls that actually link accounts, school systems that block risky stuff while pushing good habits, and audits to make sure the guardrails are doing their job. This isn’t about attacking technology. It’s just about being responsible and making sure things are safe in a world where advice can come instantly and sound super sure. Set the bar. Measure what matters. Share what you find. If we do all this, teen chatbot safety can move from being just a catchy phrase to being something real. And the odds of seeing news stories about problems we could have stopped will go way down.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Common Sense Media. (2024, June 3). Teen and Young Adult Perspectives on Generative AI: Patterns of Use, Excitements, and Concerns.

Common Sense Media. (2024, September 18). New report shows students are embracing artificial intelligence despite lack of parent awareness and…

Common Sense Media. (2024). The Dawn of the AI Era: Teens, Parents, and the Adoption of Generative AI at Home and School.

Cyberbullying Research Center. (2024, March 13). Teens and AI: Virtual Girlfriend and Virtual Boyfriend Bots.

Cyberbullying Research Center. (2025, January 14). How Platforms Should Build AI Chatbots to Prioritize Youth Safety.

NIST. (2024). AI Risk Management Framework and Generative AI Profile. U.S. Department of Commerce.

OpenAI. (2024, Dec. 11). Terms of Use (RoW). “Minimum age: 13; under 18 requires parental permission.”

OpenAI. (2024, Oct. 23). Terms of Use (EU). “Minimum age: 13; under 18 requires parental permission.”

OpenAI. (2025, Sep. 29). Introducing parental controls.

OpenAI Help. Is ChatGPT safe for all ages? “Not for under-13; 13–17 need parental consent.”

Pew Research Center. (2025, Dec. 9). Teens, Social Media and AI Chatbots 2025.

Scientific American. (2025). Teen AI Chatbot Usage Sparks Mental Health and Regulation Concerns.

The Guardian. (2025, Sep. 11). ChatGPT may start alerting authorities about youngsters considering suicide, says Altman.

The Guardian. (2025, Sep. 17). ChatGPT developing age-verification system to identify under-18 users after teen death.

TechRadar. (2025, Sep. 2 & Sep. 29). ChatGPT parental controls—what they do and how to set them up.

Comment