California’s AI Safety Bet — and the China Test

Published

Modified

SB 53 is AI safety policy that also shapes U.S. competitiveness If it slows California labs, China’s open-weight ecosystem gains ground Make it work by pairing clear safety templates with fast evaluation and public compute

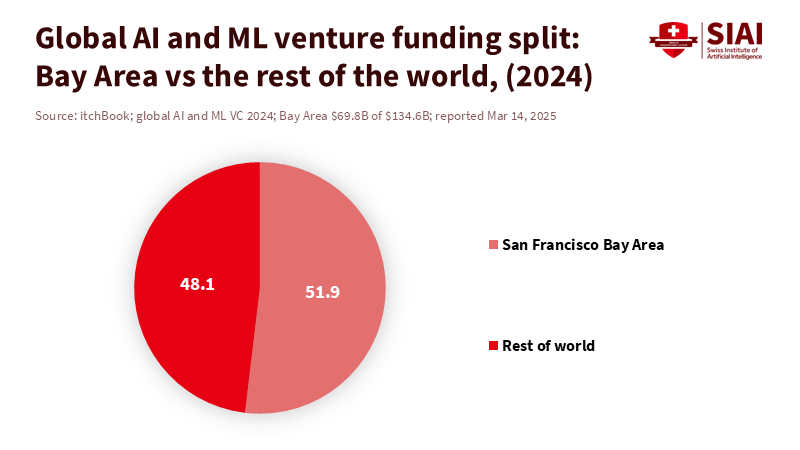

In 2024, over 50% of global venture capital for AI and machine-learning startups went to Bay Area companies. This figure shows a dependency: a small area now shapes tools for tutoring bots, writing helpers, learning analytics, and campus support. When rules in California change, the effects quickly spread through supply chains. These changes impact labs that train advanced models, startups that build on them, public schools that depend on vendor plans, and universities that design curricula around rapidly changing tools. The California AI safety law is both a safety measure and a competition decision, made in a place that still sets the global standard.

Senate Bill 53, the California AI safety law, signed on September 29, 2025, aims to reduce catastrophic risks posed by advanced foundation models through transparency, reporting, and protections for workers who raise concerns. The goal is serious, but the situation has changed. The U.S. now competes on speed, cost, and spread, as well as model quality. China is pushing open-source models that can be downloaded, fine-tuned, and used by many people at once. If California creates a complex process that mainly affects U.S. labs, the U.S. may pay for compliance while competitors profit. For education, this risk is real. Slower model cycles can mean slower safety improvements, fewer features, and higher prices for school tools.

What SB 53 does, in plain terms

SB 53, the Transparency in Frontier Artificial Intelligence Act, focuses on “large frontier developers”. A “frontier model” is defined by training compute: over 10^26 floating-point operations. A “large” developer must also have over $500 million in revenue in the prior year. These criteria target a small group of firms with many resources, not the typical edtech vendor. The compute threshold looks ahead. This matters because policy affects investment plans early on. The California AI safety law also prevents local AI rules that clash with the state framework. It centralizes expectations. SB 53 also authorizes CalCompute, a public computing resource, to broaden access and support safe innovation. Its development depends on later state action.

The law's main requirement is a published “frontier AI framework.” Firms must explain how they identify, test, and reduce catastrophic risk during development and use, and how they apply standards and best practices. It also establishes confidential reporting to the California Office of Emergency Services, as well as a way for employees and the public to report “critical safety incidents”. The law defines catastrophic risk using thresholds, such as incidents that could cause over 50 deaths or $1 billion in damage, not everyday errors. The California Attorney General handles enforcement, with penalties up to $1 million per violation. Reporting is confidential, to encourage disclosure without creating a misuse guide. This is good for safety, but it raises a tradeoff that education buyers will notice.

How the California AI safety law can tilt competitiveness

The first competitive issue is indirect. Most education startups will not train a 10²⁶ -FLOP model. They rent model access and build tools on top of it: tutoring, lesson planning, language support, grading help, and student services. If providers add review steps or slow releases to lower legal risk, products are delayed. The Stanford AI Index says that U.S. job postings needing generative-AI skills rose from 15,741 in 2023 to 66,635 in 2024. Also, large user bases quickly adopt defaults; Reuters says that OpenAI reached 400 million weekly active users in early 2025. Even minor slowdowns in release cycles can change which tools become standard in classrooms.

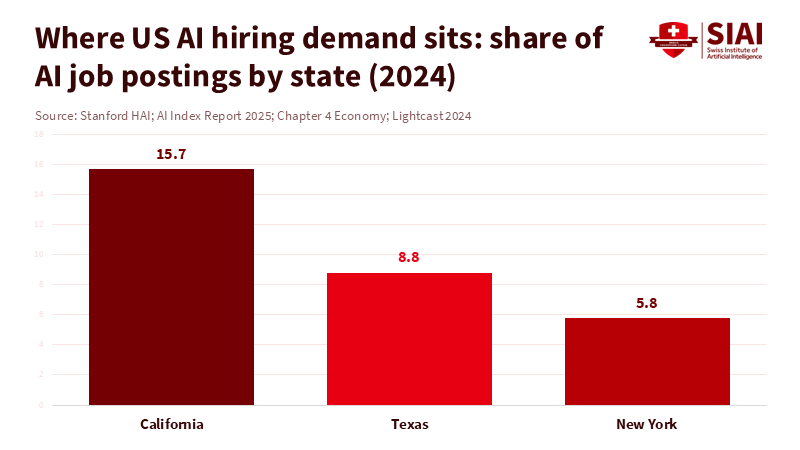

The second issue is geographic. California has the highest AI demand, accounting for 15.7% of U.S. AI job postings in 2024, ahead of Texas at 8.8% and New York at 5.8%. This does not prove that firms will leave. SB 53 targets large developers, and many startups will not be directly affected. However, ecosystems shift. The question is where the next training team is, where the next compliance staff is hired, and where the next capital is invested. If the California AI safety law introduces legal uncertainty into model development, and other states are more relaxed, the easiest path can shift. Over time, this can make “relocation” seem like a steady move.

The third issue is the market structure that schools experience. Districts and universities rarely have the staff to check model safety. They depend on vendor promises and shared standards. A good law can improve the market by making safety claims verifiable. A bad one can do the reverse, reducing competition and choice, while everyday harms continue. Those harms include student data leaks, biased feedback that reflects race or disability, incorrect citations in assignments, and risks of manipulation. Critics say SB 53 concerns catastrophic risk, not daily failures. Yet, the same governance choices shape both. If major providers limit features or change terms for education buyers, districts will have fewer options as demand rises.

China’s open-source push changes the baseline

China’s open-source strategy changes what “disadvantage” means. Stanford HAI says that Chinese open-source language models have caught up and may have surpassed others in capability and adoption. The pattern is breadth. Many people are building efficient models for flexible use rather than relying on a single platform. These models travel well, enabling local fine-tuning, private use, and quick adaptation to specific areas, such as education tools that must be private. The ecosystem is not just “one model,” but a system of reuse in which weights and tools spread quickly across firms. A state law that mainly slows a few U.S. labs can still reshape the global field.

Market signals support this, affecting education. Reuters reports that DeepSeek became China’s top chatbot, with 22.2 million daily active users, and expanded its open-source reach by releasing code. Reuters also says that Chinese universities launched DeepSeek-based courses in early 2025 to build AI skills. Governance is changing to keep things moving. East Asia Forum says that China removed an AI law from its 2025 agenda, leaning more on pilots. In late December 2025, Reuters reported that rules targeted AI services that mimic human traits, demonstrating quick oversight. California uses a single compliance method, while China can adjust controls as adoption changes.

Make safety a strength, not a speed bump

The solution is not to drop the California AI safety law, but to make safety a competitive advantage. Start with transparency for buyers. Districts need disclosures about testing that can be checked. California should advance its “frontier AI framework” toward a template that aligns with risk guidance, such as the NIST AI Risk Management Framework. It should also create a safe space for education pilots that follow privacy rules, so providers are not punished for sharing proof with schools. The federal tone also matters. In January 2025, the White House issued an order to lower barriers to American AI leadership. If Washington signals speed and Sacramento signals difficulty, firms will exploit the split. A template and alignment with norms can lower overlap without lowering standards.

California should also treat computer access as part of safety. SB 53 establishes CalCompute to expand access and support innovation. But much of this depends on a report due by January 1, 2027, and funding. If the state wants to keep research local, speed is essential. Public cloud can help universities run checks and stress-test models without relying on vendors. It can also support sharing findings without exposing student data. This shared proof bridges “catastrophic risk” and the risks that harm learners.

The opening statistic is a warning, not a boast. When AI startup capital is in one region, that region’s rules become policy. California can lead on safety and speed, but only if the California AI safety law rewards practice and lowers uncertainty for users. Education shows this tradeoff. Schools will adopt what works, at a price they can afford. If U.S. providers slow, costs rise, and open-source options will spread. The task is to make SB 53 a system for trusted adoption: templates, tests, incident learning, and compute access. That is how a safety law becomes a strategy, not a handicap.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

California State Legislature. (2025). Senate Bill No. 53: Artificial intelligence models: large developers (Chapter 138, Transparency in Frontier Artificial Intelligence Act). Sacramento, CA.

Hu, B., & Au, A. (2025, December 25). China resets the path to comprehensive AI governance. East Asia Forum.

Meinhardt, C., Nong, S., Webster, G., Hashimoto, T., & Manning, C. D. (2025, December 16). Beyond DeepSeek: China’s diverse open-weight AI ecosystem and its policy implications. Stanford Institute for Human-Centered Artificial Intelligence.

National Institute of Standards and Technology. (2023). Artificial Intelligence Risk Management Framework (AI RMF 1.0) (NIST AI 100-1). U.S. Department of Commerce.

Reuters. (2025a, September 29). California governor signs bill on AI safety.

Reuters. (2025b, February 21). DeepSeek to share some AI model code, doubling down on open source.

Reuters. (2025c, February 21). Chinese universities launch DeepSeek courses to capitalise on AI boom.

Reuters. (2025d, December 27). China issues draft rules to regulate AI with human-like interaction.

Reuters. (2025e, February 20). OpenAI’s weekly active users surpass 400 million.

Stanford Institute for Human-Centered Artificial Intelligence. (2025). AI Index Report 2025: Work and employment (chapter). Stanford University.

State of California, Office of Governor Gavin Newsom. (2025, September 29). Governor Newsom signs SB 53, advancing California’s world-leading artificial intelligence industry. Sacramento, CA.

White House. (2025, January 23). Removing barriers to American leadership in artificial intelligence. Washington, DC.

Comment