LLM-powered tutoring and the Discreet Reordering of Teaching

Published

Modified

LLM-powered tutoring is already automating routine teaching at scale The core challenge is redesigning education labor and governance around AI Without reinvestment in human expertise, automation will widen inequality

Picture classrooms where AI tutors are helping students learn. These aren't just high-tech gadgets; they're changing how teaching works. These AI tutors can quickly answer many student questions, much faster than a teacher could grade a single paper. Since 2023, studies have shown that students using AI tutors improve their skills in practice and analytical reasoning. With AI handling quick answers and explanations, schools can free teachers for more critical tasks, such as understanding each student's needs and creating better lessons. The big question isn't whether AI can teach, but how schools will use experienced teachers once AI takes over some of the routine work. If we don't plan carefully, AI could make education even more unequal and lessen the demand for skilled teachers who can create engaging lessons. AI-powered tutoring is already changing who does the teaching.

Rethinking Teaching

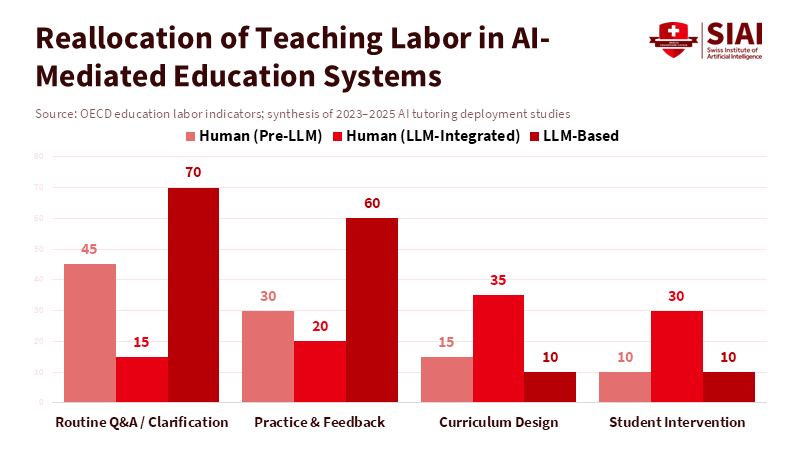

We need to rethink teaching. Instead of seeing it as a single job, we should view it as a team effort. AI tutors can handle simple, repetitive tasks, such as giving examples, answering basic questions, and providing quick feedback. Human teachers can then focus on designing the curriculum, determining each student's needs, and supporting students with social and emotional challenges. Many large educational programs already divide tasks among different roles. So, instead of asking whether AI will replace teachers, we should ask which tasks are better suited to machines and which require human involvement.

Two important considerations are teacher pay and the rate at which AI is being adopted. Teachers in many developed countries don't earn as much as other professionals with similar levels of education. A Forbes report notes that, while AI tools have been increasingly adopted in schools between 2024 and 2025, many teachers feel unprepared: 76% of teachers in the UK and 69% in the US report receiving little to no formal AI training from their schools. This means that the choices we make now will shape how students learn for years to come.

Changing Incentives

Thinking about teaching in this new way changes how everyone in a school system acts. If administrators can use AI to provide basic instruction, they should reduce the number of human teachers. This is more likely to happen if teachers are paid poorly and there isn't enough oversight. We've seen schools use pre-made lesson plans when money is tight. AI is just a faster and cheaper way to do the same thing.

But if we see teaching as a team effort, we can see where human teachers are most valuable: creating lessons and assisting students. Expert teachers should focus on designing the AI tutoring system, choosing the best materials, and helping students who are struggling. These tasks require more skill and have a bigger impact than simply following a script. Teachers who do these tasks should be paid more and get more support. If we don't reward these roles, AI will create a system in which some teachers are highly skilled, and others are not, widening the gap between rich and poor schools.

In places where school districts already decide on the curriculum, it will be easier to use AI because everyone can share and check the same materials. In the classroom, teachers can spend more time mentoring students, giving feedback, and supporting their emotional needs. These are things that machines can't do well. For those in charge of education, this means changing how we license and evaluate teachers to recognize the value of lesson design and mentoring. Sharing knowledge and materials lowers the cost of maintaining high-quality education across all schools. It also enables hiring smaller, highly skilled teams to manage the AI tutoring system.

What the Evidence Shows

The evidence from 2023 to 2025 shows both the benefits and drawbacks of AI tutoring. Studies have found that AI tutors can improve practice, understanding, and time spent on learning when used with an appropriate curriculum. A 2025 study in Nature found that an AI tutor outperformed traditional active learning in the classroom, particularly for practice-based tasks. Reviews of intelligent tutoring systems show mixed results overall, but they are more positive when the AI aligns well with the curriculum, provides clear feedback, and offers support. This is why elementary and high schools have adopted AI tutoring more quickly than colleges: their curricula are often more structured, making it easier to evaluate the AI's performance.

There are two essential things to keep in mind. First, AI tutoring can work well if schools invest in ensuring it aligns with the curriculum. This means creating shared materials, practice questions, and regular checks to ensure the AI helps students meet learning goals. Second, the best results happen when AI tutors work with human teachers. Humans set the goals, track progress, and step in when needed. This raises a question: if this mixed approach is most effective, how should we pay and promote teachers who contribute to it? If we don't address this, the blended approach could become a justification for cost cuts. AI would take over routine work, leaving the remaining human teachers underpaid and unsupported. We need to invest in both technology and people, not treat them as substitutes for each other.

The benefits of AI tutoring vary by subject and grade level. Subjects such as mathematics and tasks involving repetition show greater improvement than subjects such as writing or history. This doesn't mean AI can't help with writing or historical thinking. Still, it requires clear guidelines, scoring rubrics, and human review to ensure the feedback is valuable rather than merely superficial. We should focus on using AI in areas where it aligns more easily with the curriculum, while also testing it in other areas under careful human oversight.

The Risks of AI Tutors

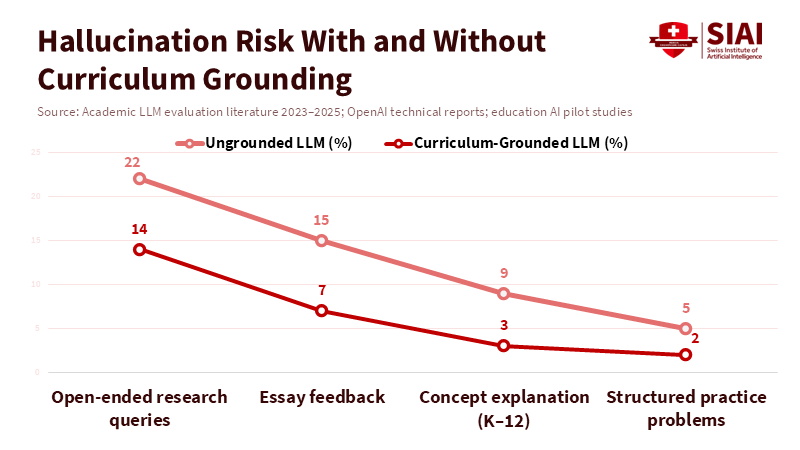

One of the most significant risks of AI tutors is that they can make mistakes. AI can provide answers that appear correct but are actually incorrect. Research from 2020 to 2025 has examined these errors, their causes, and ways to address them. But there's no single solution that eliminates the problem. The risk of errors depends on the situation. AI is less likely to make mistakes in organized tasks with reliable sources than in open-ended research questions. However, even a small number of confident errors can damage trust, lead to incorrect learning, and compromise data analysis. Schools need to plan for errors as a regular part of AI use.

To reduce errors, we need to use several safeguards:

- Grounding: Make sure the AI uses only approved, up-to-date sources.

- Human escalation: Send uncertain or essential questions to trained staff.

- Transparent audit trails: Keep records of every answer, linking it to the curriculum and source.

Changing the way we prompt the AI and using strategies to improve its information retrieval can reduce errors. But this requires ongoing work: maintaining prompt libraries, checking information sources, and updating knowledge bases. This means we need fewer routine instructors and more people who can design curricula, create prompts, and monitor the AI's performance. A small, skilled team can keep the AI tutoring system accurate for many tasks, as long as they have the authority and funding to fix problems when they arise.

For example, if an AI tutor invents a formula or makes a false historical claim, it can lead a student down the wrong path, which is hard to correct. Once trust is lost in a classroom, it's hard to regain, as parents and administrators expect accountability. That's why it's essential to have error transparency, clear ways to correct mistakes, and a system for sending uncertain outputs to humans. These requirements change how schools should buy AI systems. They should choose modular, auditable systems with shared knowledge repositories rather than systems that are difficult to understand and trace.

The Future of Teaching

The way our economy works explains why AI adoption will speed up. According to a memo from Commissioner Anastasios Kamoutsas, some teachers have not received pay increases because of what he described as "unnecessary and prolonged contract negotiations" by unions. Automation enables the maintenance of basic instruction while reducing costs. But this has consequences. According to Keith Lee, while AI can help teachers save time on tasks like creating rubrics or outlining lessons, these productivity gains will only benefit students and teachers if schools change their processes to ensure the regained time leads to better feedback, stronger curricula, and fairer outcomes instead of being diverted elsewhere. Linking automation to deliberate reinvestment is essential to make sure these benefits serve the public good.

A practical plan rests on three things. First, set standards for how AI should be used. These standards should include clear learning targets, permissible error rates, human escalation procedures, and public reporting of outcomes. Second, fund regional centers that can provide vetted knowledge bases and compliance services. This will help smaller districts access high-quality AI systems without taking on too much risk. These centers can also provide shared evaluation and benchmarks for comparing results across districts. Third, change teachers' career paths. Create and appropriately compensate roles for curriculum design, system management, and student support. There should be clear paths for teachers to move from the classroom into roles such as curriculum engineer or intervention specialist, with corresponding pay increases. Policymakers should test reinvestment clauses linked to AI pilots, so that savings are used to support people and programs.

There are lessons from the past. When standardized testing and pre-packaged curricula became common, teaching became more scripted and less creative. Automation could make this worse unless we link productivity gains to reinvestment in professional roles. To prevent this, districts should report how they allocate savings from automation and monitor the impact on disadvantaged students. This kind of conditional funding, along with transparency, will be controversial, but it's necessary if automation is to expand opportunity rather than limit it.

Remember, AI tutoring is already automating a lot of routine instructional work. This isn't necessarily a bad thing. It only becomes bad if schools use it to cut investments in human expertise and social support. If we instead design a system where AI handles routine tasks, expert humans handle design, and savings are reinvested in higher-skill roles and support, we can expand access and advance learning. The choice is ours to make.

Fund the teams that manage and oversee the AI tutoring systems, require open audits of error rates, and link automation gains to teacher career upgrades. Policymakers should set clear timelines for pilots, require open reporting of error rates, reinvest savings into staff development, and support regional hubs that pool expertise. Education leaders must require both technical audits and human-centered data that captures mentorship, trust, and access. Together, these measures will determine whether AI tutoring serves as a tool to expand opportunities or deepen existing divides.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Microsoft. (2025). AI in Education: A Microsoft Special Report.

Nature. (2025). Kestin, G., Miller, K., Klales, A., Milbourne, T., & Ponti, G. (2025). AI tutoring outperforms in-class active learning: an RCT introducing a novel research-based design in an authentic educational setting. Scientific Reports.

OECD. (2023). What do OECD data on teachers’ salaries tell us? (Education Indicators in Focus).

OECD. (2025). AI adoption in the education system. (Report).

OpenAI. (2025). Why language models hallucinate. Research blog.

Springer / academic review. (2025). The rise of hallucination in large language models: systematic review and mitigation strategies.

U.S. Department of Education, Office of Educational Technology. (2023). Artificial Intelligence and the Future of Teaching and Learning: Insights and Recommendations.

Comment