Agent AI Reliability in Education: Build Trust Before Scale

Published

Modified

Agent AI is uneven—pilot before student use Start with internal, reversible staff workflows, add human-in-the-loop and logs Follow EU AI Act/NIST; publish metrics; scale only after proof

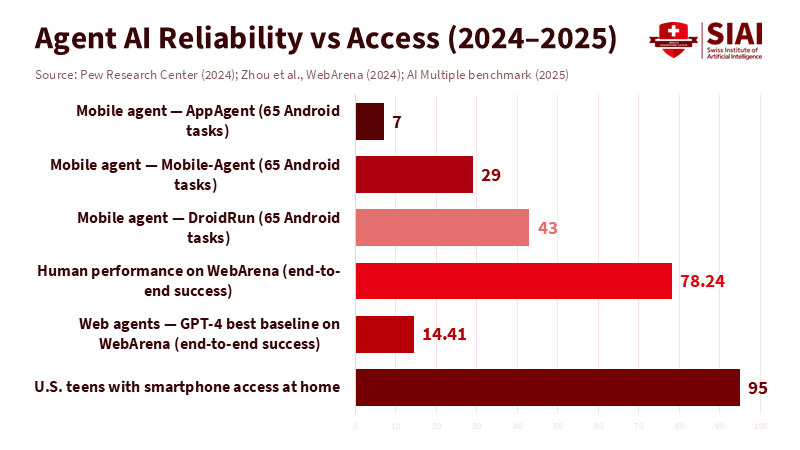

School leaders should pay attention to a clear contrast: nearly all teens have a smartphone, but agent AI still struggles with multi-step, real-world tasks. In 2024 surveys, 95% of U.S. teens reported having access to a smartphone at home. This infrastructure far outstrips any other learning technology. Meanwhile, cutting-edge web agents based on GPT-4 only completed about 14% of complex tasks in realistic website environments. Recent mobile benchmarks show some improvement, but even the most powerful phone agents completed fewer than half of 65 fundamental functions. This gap between universal access and uneven performance highlights a policy issue in education. Schools feel pressure to provide constant, on-device help, but agent AI can’t be counted on for unsupervised student use. The solution isn’t to ban or to buy these tools unthinkingly. Schools should require proof of reliability in controlled environments, publish the results, and expand use only when solid evidence exists.

Agent AI Reliability: What the Evidence Actually Says

The notable improvements in conversational models mask a stubborn reality: agent AI reliability decreases when tasks involve multiple steps, screens, and tools. For example, errors in SIS lookups or gradebook updates can lead to misrecorded student data. In WebArena, which replicates real sites for forums, e-commerce, code, and content management, the best initial GPT-4 agent achieved a success rate of only 14.41%. In contrast, humans performed above 78%. The failure points may seem mundane, but they are significant—misreading a label, selecting the wrong element, or losing context between steps. For schools, these errors matter. Tasks like gradebook updates, SIS lookups, purchase orders, and accommodation settings require long-term planning. The key measure is not eloquence; it is the reliability of agent AI.

Results on phones tell a similar story, with considerable variation. In November 2025, AI Multiple conducted a field test evaluating four mobile agents across 65 Android tasks in a standardized emulator. The top agent, “DroidRun,” completed 43% of tasks. The mid-tier agent completed 29%, while the weakest managed only 7%. They also showed notable differences in cost and response time. Method note: tasks included calendar edits, contact creation, photo management, recording, and file operations. The test used a Pixel-class emulator with a shared task list under the AndroidWorld framework. While not perfect proxies for schools, these tests reflect reality more than staged demonstrations do. The lesson is clear: reliability improves when tasks are narrow, the user interface is stable, and the required actions are limited. This is the design space that schools can control.

Consumer rollouts illustrate this caution. ByteDance’s Doubao voice agent is launching first on a single device in limited quantities, despite the widespread adoption of the underlying chatbot. Scientific American reports that the system can book tickets, open tabs, and interact at the operating system level, but it remains in beta. Reuters notes its debut on ZTE’s Nubia M153, with plans for future expansion. This approach is not a failure; it is a thoughtful way to build trust. Education should be even stricter, as errors can significantly affect students and staff.

Where Agent AI Works Today

Agent AI reliability shines in internal processes where clear guidelines are in place. This is where education should begin. Business evidence supports this idea. Harvard Business Review’s November 2025 guidance is clear: agents are not suited to unsupervised, consumer-facing work, but they can perform well in internal workflows. Schools have many such tasks. A library acquisitions agent prepares shopping lists based on usage data. A dispatcher agent suggests bus route changes after schedule updates. A facilities agent reorders supplies within a set budget. Each task is reversible, well-defined, and trackable. The agent acts as a junior assistant, not a primary advisor to a student. This distinction is why reliability can improve faster in internal settings than in more public contexts.

Device architecture also plays a significant role. Many school tasks involve sensitive information. Apple’s Private Cloud Compute showcases one effective model: perform as much as possible on the device, then offload more complex reasoning to a secure cloud with strict protections and avenues for public scrutiny. In education, this approach allows tasks such as captioning, live translation, and note organization to run locally. In contrast, more complex planning can run in a secure cloud. The goal is not to endorse one vendor; it is to minimize risk through thoughtful design. By combining limited tools, private execution when possible, and secure logs, agent AI reliability can improve without increasing vulnerability or risking student data.

For student-facing jobs, start with low-risk tasks. Reading support, language aids, captioning, and focused prompts are reasonable initial steps. These tasks provide clear off-ramps and visible indicators when the agent is uncertain. Keep grading, counseling, and financial-aid navigation under human supervision for now. The smartphone's widespread availability—95% of teens have access—is hard to overlook, but access does not equal reliability. A careful rollout that prioritizes staff workflows can still free up time for adults and promote a culture of measuring success before opening up tools for students.

Governance That Makes Reliability Measurable

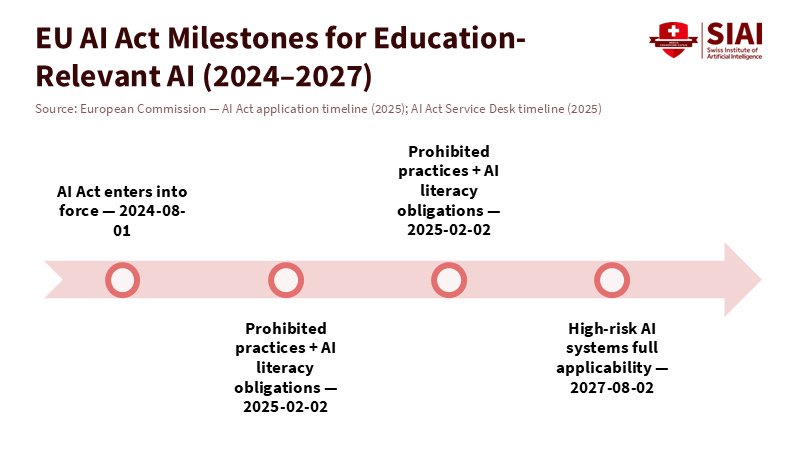

Reliability should be a monitored characteristic, not just a marketing claim. Schools need clear benchmarks, such as success rates above 80%, to evaluate AI systems effectively. The policy landscape is moving in that direction. The EU AI Act came into effect on August 1, 2024, with rules being phased in through 2025 and beyond. Systems that affect access to services or evaluations are high-risk and must demonstrate risk management and monitoring. Additionally, NIST’s AI Risk Management Framework (2023) and its 2024 Generative AI Profile offer a practical four-step process—govern, map, measure, manage—that schools can apply to agents. These frameworks do not impede progress; they require transparent processes and evidence. This aligns perfectly with the reliability standards we need.

Guidance from the education sector supports this approach. UNESCO’s 2025 guidance on generative AI in education emphasizes targeted deployment, teacher involvement, and transparency. The OECD’s 2025 policy survey shows countries establishing procurement guidelines, training, and evaluation for digital tools. Education leaders can adopt two practical strategies from these recommendations. First, include reliability metrics in requests for proposals and pilot programs: target task success rates, average handling times, human hand-off rates, and error rates per 1,000 actions. Second, publish quarterly reports so staff and families can track whether agents meet the standards. Reliability improves when everyone knows what “good” means and where issues arise.

We also need to safeguard limited attention. Even “good” agents can overwhelm students with notifications. Set limits on alerts for student devices and establish default time windows. If financial policies permit, direct higher-risk agent functions to staff computers on managed networks rather than student smartphones. These decisions are not anti-innovation. They help make agent AI reliability a system-wide property rather than just a model characteristic. The benefits are considerable: fewer mistakes, better monitoring, and increased public trust over time.

A Roadmap to Prove Agent AI Reliability Before Scale

Start with a three-month pilot focused on internal workflows where mistakes are less costly and easier to correct. Good candidates are library purchases, routine HR ticket sorting, and cafeteria stock management. Connect the agent only to the necessary APIs. Equip it with a planner, executor, and short-term memory with strict rules for data retention. Each action should create a human-readable record. From the beginning, measure four key metrics: end-to-end task success rate, average handling time, human hand-off rate, and incident rate per 1,000 actions. At the midpoint and end of the pilot, review the logs. If the agent outperforms the human baseline with equal or fewer issues, broaden its use; if not, retrain or discontinue it. This approach reflects the inside-first logic that business analysts now recommend for agent projects. It respects the strengths and limitations of the technology.

Next, prepare for the reality of smartphones without overcommitting. Devices are already in students’ hands, and integrations like Doubao show how quickly embedded agents can operate when vendors manage the entire stack. However, staged rollouts—including in consumer markets—exist for a reason: to protect users while reliability continues to improve. Schools should adopt the same cautious approach. Keep student-facing agents in “assist only” mode, with clear pathways to human intervention. Route sensitive actions—those that affect grades, attendance, or funding—to staff desktops or managed laptops first. As reliability data grows, gradually expand the toolset for students.

Finally, ensure transparency in every vendor contract. Vendors should provide benchmark performance data on publicly available platforms (e.g., WebArena for web tasks and AndroidWorld-based tests for mobile), the model types used, and any security measures intended to protect legitimate operations. Request independent red-team evaluations and a plan to quickly address performance regressions. Connect payment milestones to observed reliability in your specific environment, not to promises made in demonstrations. In 12 months, the reliability landscape will look different; the right contracts will allow schools to upgrade without starting over. The goal is straightforward: by the time agents interact with students, we need to know they can perform reliably.

We began by highlighting a stark contrast: nearly every teen has a smartphone, yet agent AI reliability in multi-step tasks still falls short. Web agents struggle with long-term tasks; mobile agents perform better but still exhibit inconsistencies; and consumer launches proceed in stages. This reality should not stifle innovation in schools; instead, it should guide it. Focus on where the tools are practical: internal workflows that are reversible and come with clear guidelines. Measure what’s essential: task success, time spent, hand-offs, and incidents. Use existing frameworks. Opt for device patterns that prioritize privacy and focus. Publish the results. Only when data prove that agents perform reliably should we allow students to use them. The future of AI in education isn’t about having a new assistant in every pocket. It’s about having reliable tools that give adults more time and provide students with efficient services. Because we have demonstrated, transparently, that agent AI reliability meets the necessary standards.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Apple Security Engineering and Architecture. (2024, June 10). Private Cloud Compute: A new frontier for AI privacy in the cloud.

Apple Security Engineering and Architecture. (2024, October 24). Security research on Private Cloud Compute.

European Commission. (2024, August 1). AI Act enters into force.

Harvard Business Review. (2025, November 25). AI Agents Aren’t Ready for Consumer-Facing Work—But They Can Excel at Internal Processes.

OECD. (2025, Mar). Policies for the digital transformation of school education: Results of the Policy Survey on School Education in the Digital Age.

Pew Research Center. (2025, Jul 10). Teens and Internet, Device Access Fact Sheet.

Reuters. (2025, December 1). ByteDance rolls out AI voice assistant for Chinese smartphones.

Scientific American. (2025, December 1). ByteDance launches Doubao real-time AI voice assistant for phones.

UNESCO. (2025, April 14). Guidance for generative AI in education and research.

Zhou, S., Xu, F. F., Zhu, H., et al. (2023). WebArena: A Realistic Web Environment for Building Autonomous Agents. arXiv.

AI Multiple (Dilmegani, C.). (2025, November 6). We tested mobile AI agents across 65 real-world tasks.

Comment