AI Industrial Policy Is National Security

Published

Modified

Treat compute and clean power as strategic infrastructure for national security. Crowd in private capital with compute purchase agreements, capacity credits, and loan guarantees Tie support to open access, safety standards, and allied coordination as China accelerates

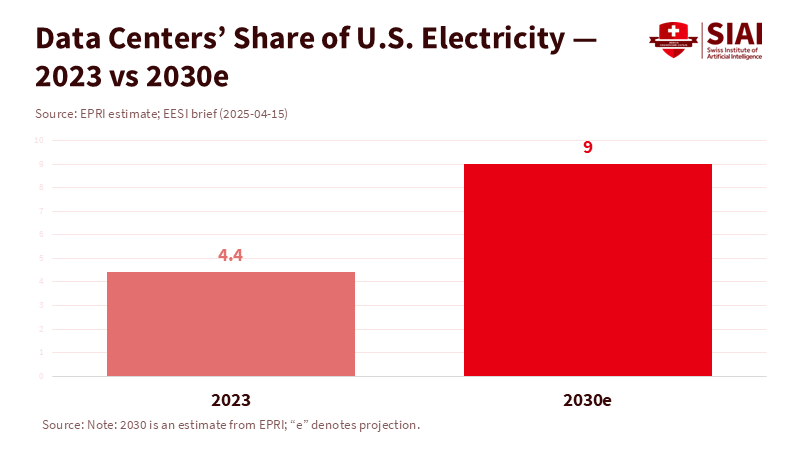

The number to pay attention to is nine. By 2030, U.S. data centers might use up to 9% of all U.S. electricity, mainly due to AI workloads. This estimate from the Electric Power Research Institute reshapes the current discussion. It’s not just about private companies securing enough funding for chips and clusters anymore. This challenge affects energy, manufacturing, and national defense. An AI industrial policy that overlooks power supply, production capacity, and fair access will not succeed. Meanwhile, China’s AI stack is advancing rapidly, from local accelerators to chatbots reaching a global audience. Although markets are thriving now, skeptical headlines could deter investment tomorrow. If we view computing as a private luxury, we invite instability. If we see it as strategic infrastructure, we can build capacity, maintain predictable prices, and set standards that benefit the public and the technological advantage of free nations.

AI industrial policy as a national security initiative

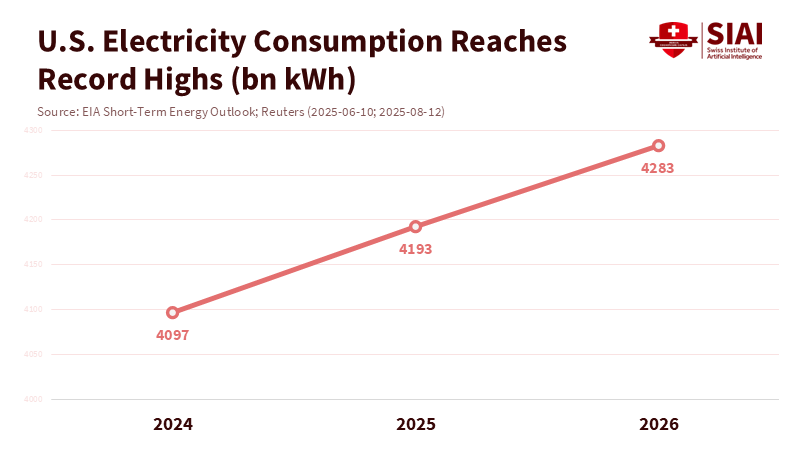

The United States must view advanced computing and its power supply as strategic resources essential for national security. The analogy is not glamorous, but it is relevant: in the 19th century, railroads determined great-power contests; in the 21st century, computing and energy will do the same. Recent policies hint at this change. Washington imposed stricter export controls on high-end AI chips and tools in 2024 and 2025 to limit military transfers and safeguard its lead in training-class semiconductors. At the same time, federal energy forecasts predict record-high electricity demand in 2025 and 2026, with AI-heavy data centers as a significant driver. These signs indicate national security is at stake. The competition is not only about model performance; it’s about supply chains, price stability, and access during crises. In this environment, AI industrial policy becomes the backbone that coordinates interests across computing, energy, and financial markets.

The next step is to ensure that security and openness work together through large-scale planning. Public funding should come with public responsibilities: fair access to critical computing resources, reasonable prices, and resilience. This idea is already reflected in the National AI Research Resource pilot, which provides researchers with computing power and data that they would not otherwise have access to. It also supports industrial grants to restore advanced chip production capacity. However, the current approach lacks the necessary scale and scope. A few grants and a research pilot will not be sufficient as workloads and energy requirements increase. AI industrial policy needs to function at the level of a comprehensive industrial expansion. This requires planning for gigawatts of stable, clean power, petaflops to exaflops of easily accessible computing, and financing that can withstand market fluctuations.

China’s rapid progress makes delay a costly option. Huawei is sending out new Ascend accelerators that domestic buyers consider credible alternatives. At the same time, DeepSeek has demonstrated how an affordable, rapidly evolving model can disrupt markets and perceptions. Western officials have raised alarms about national security threats posed by model use and the risk of chips slipping through control measures. The goal is not to incite panic but to strive for parity. Without a careful, security-focused AI industrial policy, the U.S. risks losing ground as competitors quickly integrate models across government and industry.

AI industrial policy must fund power and computing together

Policy cannot separate computing from the power that supports it. The International Energy Agency predicts global electricity demand from data centers could more than double, reaching around 945 TWh by 2030, with AI being the key factor. In the U.S., federal analysis indicates that total power consumption will hit new highs in 2025 and 2026, with data centers as a major contributor. Suppose computing power expands, but energy supply does not. In that case, prices will rise, installations will slow, and projects will become concentrated in a few locations with weak transmission capabilities. This presents a national risk. A serious AI industrial policy must connect three key elements: grid capacity, long-term procurement of clean power, and the placement of computing resources close to reliable transmission lines.

A financing model is already visible. The Loan Programs Office has used federal credit to restart large-scale, emission-free energy generation, such as the Palisades nuclear plant, through an infrastructure reinvestment program. That same approach can support grid-improving investments, nuclear restarts, upgrades, and long-gestation clean energy projects that can help AI facilities. By combining this with standardized, government-backed power purchase agreements for “AI-capable” megawatts, developers can secure lower-cost financing. On the computing side, we should increase open-access resources through a Strategic Compute Reserve that buys training-class time the same way the government purchases satellite bandwidth. This reserved capacity would be allocated to defense-critical, safety-critical, and open-research needs through clear, competitive rules. The U.S. does not need to own the clusters. Still, it requires reliable, reasonably priced access to them, especially in emergencies.

The numbers support this approach. McKinsey estimates that AI-ready data centers will require around $5.2 trillion in global investment by 2030, with over 40% of that likely to occur in the U.S. The government cannot and should not cover that entire amount. However, it can encourage private investment and address coordination challenges that markets struggle with, like long transmission timelines, uneven connection queues, and volatile power prices. Some federal guarantees, standardized contracts, and risk-sharing strategies will lower borrowing costs, stabilize construction cycles, and keep capacity operational when credit tightens.

AI industrial policy that encourages private investment—even amidst bubble fears

Today, the markets are enthusiastic. Hyperscalers plan to invest tens of billions annually, and chip manufacturers are facing multi-year backlogs. Yet, the forward trend is not guaranteed. Prominent warnings about an “AI bubble” highlight a simple reality: while the long-term narrative may hold, short-term funding cycles can create issues. A few setbacks or economic shocks could slow equity issuance and prompt lenders to withdraw. AI industrial policy must account for this potential. The solution isn’t to issue blank checks; it is to create smart, conditional, counter-cyclical safeguards that prevent key projects from stalling at critical moments.

Three strategies could help. First, compute purchase agreements: long-term federal contracts for training hours and inference capacity with qualified suppliers that meet strict security, privacy, and openness standards. These agreements would secure revenue, reduce financing costs, and prevent favoritism by focusing on delivered capacity and compliance instead of company identities. Second, capacity credits for reliable, clean energy serving certified data centers could be modeled after clean-energy tax credits but linked to reliability and location value. Third, loss-sharing loan guarantees for infrastructure near the grid—such as substations, transmission lines, and high-voltage connections—that private companies under-invest in due to the broad benefits they provide to many users.

We can see how public financing can stimulate private investment without complete nationalization. When Washington provided Intel with a mix of grants and loans through the CHIPS and Science Act, it didn’t acquire equity. It reduced the risks associated with U.S. manufacturing. Following the earnings reports, the company showcased expedited U.S. funding alongside private contributions from global partners. That’s the model: public investment for public benefit, executed by the private sector under enforceable terms. If equity markets fluctuate, the policy cushion keeps progress steady. If they rise, federal exposure remains minimal. In every scenario, AI industrial policy maintains strategic momentum.

Concerns about market excesses must be weighed against real demand. The IEA forecasts that AI-optimized data center energy consumption could quadruple by 2030. U.S. electricity usage is already nearing record highs. Microsoft alone anticipates around $80 billion in AI-driven data center investments for fiscal 2025, with other companies indicating similar trends. Bubble discussions will come and go, but the underlying forces—shifting workflows towards AI assistants, code generation, scientific simulations, and AI-intensive services—are persistent. Policy should lean into this reality by facilitating financing and linking support to openness and security.

AI industrial policy for open access, collaborative deterrence, and standards

Security goes beyond just keeping adversaries away from our technology. It also involves ensuring that crucial computing resources are accessible to innovators, universities, and small businesses that drive the ecosystem. The NAIRR pilot has validated the concept at a research level; now we should expand this idea into a long-term, well-supported facility. An enhanced NAIRR could serve as the public access point to the Strategic Compute Reserve, with strict eligibility criteria, audit processes, and privacy guidelines. Access would come with responsibilities: publishing safety findings, supporting red-team evaluations, and contributing to guidelines for responsible use.

International policy should follow that approach. The U.S. has tightened export restrictions on training-class accelerators and tools, while also rallying allies to close loopholes. Simultaneously, China’s rapid development highlights how quickly cost-effective models can proliferate and how difficult it is to regulate their downstream applications. Concerns have been raised about censorship, data management, and possible military associations. The best response isn’t isolation; it’s forming a trusted-compute network among allies that establishes shared procurement standards, security benchmarks, and auditing requirements for technology and models. If standards are met, access to allied markets, interconnections, and public contracts is granted. If not, it is denied. This strategy turns openness into an asset and positions standards as a form of deterrence.

Finally, openness must encompass markets, not just research labs. Public funding should ensure fair access to essential infrastructure. Contracts for publicly funded technology and its energy should contain favorable access conditions, set aside capacity for research and startups, and include clear rules against self-preferencing. History shows us that when public funds create private infrastructure, the public must receive more than just ceremonial gestures. It must have access that fosters competition.

Returning to the number nine. Suppose data centers expect to account for 9% of U.S. electricity by 2030. In that case, computing is already a national infrastructure, not a niche sector. We cannot support this infrastructure based on short-term trends or leave it at the mercy of energy constraints and inconsistent standards. A clear AI industrial policy offers a balanced solution. It supports robust, clean power and accessible computing together. It encourages private investment through purchase agreements, credits, and guarantees that might be modest in budget size but significant in impact. It links support to openness, security, and cooperation among allies. Finally, it prepares for funding shifts without interrupting strategic growth. China’s swift advances will not wait. Markets will not always be favorable. The grid will not self-correct. The United States needs to act now—before shortages define the conditions—to ensure computing is reliable, affordable, and accessible. Because in this competition, capacity shapes policy, and policy ensures security.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Associated Press. (2024, May 27 May 27). Elon Musk’s xAI says it has raised $6 billion to develop artificial intelligence.

Biden White House. (2024, March 20 March 20). Fact sheet: President Biden announces up to $8.5 billion preliminary agreement with Intel under the CHIPS & Science Act.

Brookings Institution — Wheeler, T. (2025, November 18 November 18). OpenAI floats federal support for AI infrastructure—what should the public expect?

Energy.gov (U.S. Department of Energy, Grid Deployment Office). Clean energy resources to meet data center electricity demand. (n.d.).

Energy.gov (U.S. Department of Energy, Loan Programs Office). Holtec Palisades. (n.d.).

Holtec International. (2024, September 30 September 30). Holtec closes $1.52B DOE loan to restart Palisades.

International Energy Agency. (2025, April 10 April 10). AI is set to drive surging electricity demand from data centres…

International Energy Agency. (2024). Energy & AI: Energy demand from AI.

Investing.com. (2025, October 23 October 23). Earnings call transcript: Intel Q3 2025 beats expectations.

Le Monde. (2025, February 24 February 24). Artificial intelligence: China’s ‘DeepSeek moment’.

McKinsey & Company. (2025, April 28 April 28). The cost of computing: A $7 trillion race to scale data centers.

McKinsey & Company. (2025, October 7 October 7). The data center dividend.

National Science Foundation. (2024). National AI Research Resource (NAIRR) pilot overview.

National Science Foundation / White House OSTP. (2024, May 6 May 6). Readout: OSTP-NSF event on opportunities at the AI research frontier.

Reuters. (2024, March 29 March 29). U.S. updates export curbs on AI chips and tools to China.

Reuters. (2025, January 13 January 13). U.S. tightens its grip on global AI chip flows.

Reuters. (2025, April 22 April 22). Huawei readies new AI chip for mass shipment as China seeks Nvidia alternatives.

Reuters. (2025, June 10 June 10). Data center demand to push U.S. power use to record highs in 2025, ’26, EIA says.

Reuters. (2025, September 9September 9). U.S. power use is expected to reach record highs in 2025 and 2026, EIA says.

Reuters. (2025, November 18 November 18). No firm is immune if the AI bubble bursts, Google CEO tells BBC.

TIME. (2024, January 26 January 26). The U.S. just took a crucial step toward democratizing AI access (NAIRR pilot).

U.S. Department of Energy (press release). (2025, September 16 September 16). DOE approves sixth loan disbursement to restart Palisades nuclear plant.

Utility Dive. (2024, March 27 March 27). DOE makes $1.5 conditional loan commitment to help Holtec restart Palisades.

Yahoo Finance. (2025, October 24 October 24). Intel’s Q3 earnings surge following U.S. government investment.

Comment