AI Capital and the Future of Work in Education

Published

Modified

AI capital cheapens routine thinking and shifts work toward physical, contact-rich tasks Gains are strong on simple tasks but stall without investment in real-world capacity Schools should buy AI smartly, redesign assessments, and fund high-touch learning

Nearly 40% of jobs worldwide are at risk from artificial intelligence. This estimate from the International Monetary Fund highlights a simple fact: the cost of intelligence has decreased so much that software can now handle a greater share of routine thinking. We can think of this software as AI capital—an input that works alongside machines and people. Intelligence tasks are the first to be automated, while human work focuses on tasks that require physical presence. The cost of advanced AI models has dropped sharply since 2023. Additionally, hardware is providing more computing power for each euro spent every year. This trend lowers the effective cost of AI capital, while classroom, lab, and building expenses remain relatively stable. In this environment, shifts in wages and hiring occur not because work is vanishing, but because the mix of production is changing. Suppose educational institutions continue teaching as if intelligence were limited and physical resources were flexible. In that case, graduates will be unprepared for a labor market that no longer exists. It's crucial that we ensure equitable AI use and resource reallocation to prevent potential disparities.

Reframing AI Capital in the Production Function

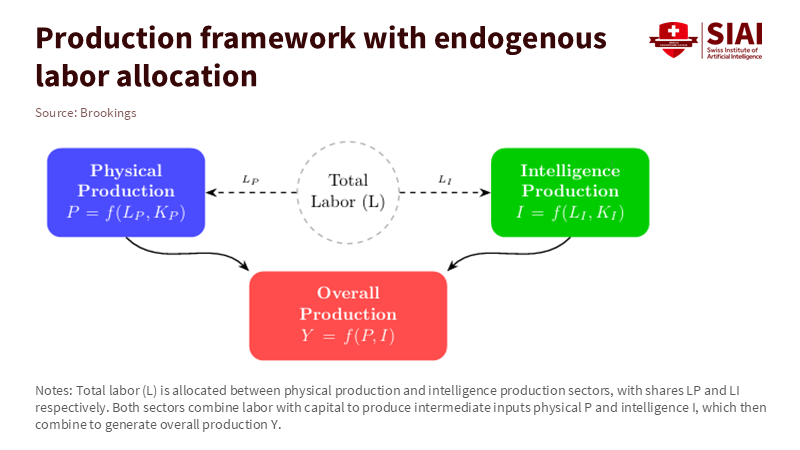

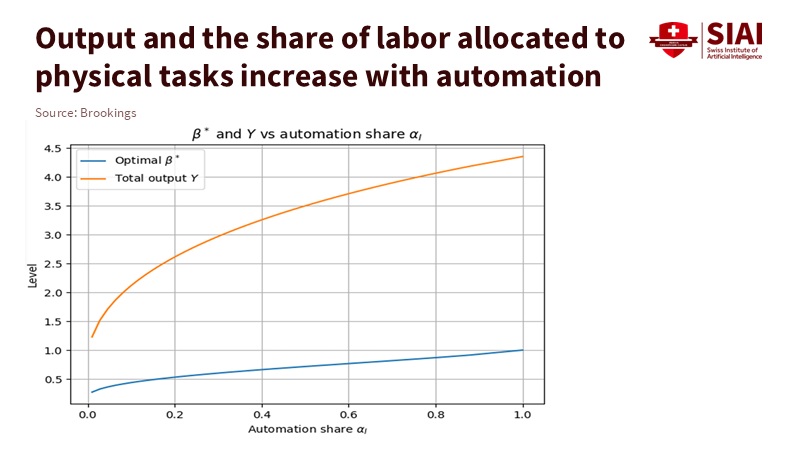

The usual story of production—a combination of labor and physical capital—overlooks a third input that we now need to recognize. Let's call it A, or AI capital. This refers to disembodied, scalable intelligence that can perform tasks previously handled by clerks, analysts, and junior professionals. In a production function with three inputs, represented as 𝑌= 𝑓(𝐿,𝐾,𝐴), intelligence tasks are the first to be automated because the price of A is dropping faster than that of K. Many cognitive tasks can also be broken down into modules, making them easier to automate. A recent framework formalizes this idea in a two-sector model: intelligence output and physical output combine to produce goods and services. When A becomes inexpensive, the saturation of intelligence tasks increases, but the gains depend on having complementary physical capacity. This leads to a reallocation of human labor toward physical tasks, creating mixed effects on wages: wages may rise initially, then fall as automation deepens. Policies that assume a simple decline in wages miss this complex pattern.

The real question is not whether AI “replaces jobs” but whether adding another unit of AI capital increases output more than hiring one additional person. For tasks that are clearly defined, the answer is already yes. Studies show significant productivity boosts: mid-level writers completed work about 40% faster using a general-purpose AI assistant. In comparison, developers finished coding tasks approximately 56% faster with an AI partner. However, these gains decrease with more complex tasks, where AI struggles with nuances—this reflects the “jagged frontier” many teams are encountering. This pattern supports the argument for prioritizing AI: straightforward cognitive tasks will be automated first. In contrast, complex judgment tasks will remain human-dominated for now. We define “productivity” as time to completion and quality as measured by standardized criteria, noting that effect sizes vary with task complexity and user expertise.

When A expands while K and L do not, the share of labor can decline even when overall output stays constant. In simple terms, the same amount of production can require fewer workers. But this isn't an inevitable outcome. If physical and intellectual outputs complement rather than replace one another, investments in labs, clinics, logistics, and classrooms can help stabilize wages. This points to a critical shift for education systems: focusing on environments and approaches where physical presence enhances what AI alone cannot provide—care, hands-on skill, safety, and community.

Evidence: Falling AI Capital Prices, Mixed Productivity, Shifting Wages

The price indicators for AI capital are clear. By late 2023, API prices for popular models had dropped significantly, and hardware performance improved by about 30% each year. Prices won’t decline uniformly—newer models might be more expensive—but the overall trend is enough to change how businesses operate. Companies that previously paid junior analysts to consolidate memos are now using prompts and templates instead. Policymakers should interpret these signals as they would energy or shipping prices: as active factors influencing wages and hiring. We estimate the “price of A” by looking at published per-token API rates and hardware cost-effectiveness; we do not assume uniform access across all institutions.

The productivity evidence is generally positive but varies widely. Controlled experiments show significant improvements in routine content creation and coding. At the same time, observational studies and workforce surveys highlight that integrating AI can be challenging, and the benefits are often immediate. Some teams waste time fixing AI-generated text or adjusting to new workflows, while others achieve notable speed improvements. The result is an increase in task-level performance coupled with friction at the system level. Sector-specific data supports this: the OECD reports that a considerable number of job vacancies are in roles heavily exposed to AI, even as skill demands change when workers lack specialized AI skills. Labor-market rewards have also begun to shift: studies show wage premiums for AI-related skills, typically ranging from 15% to 25%, depending on the market and methodology.

The impact is not evenly distributed. The IMF predicts high exposure to AI in advanced economies where cognitive work predominates. The International Labour Organization (ILO) finds that women are more affected because clerical roles—highly automatable cognitive work—are often filled by women in wealthier countries. There are also new constraints in energy and infrastructure: data center demand could more than double by the end of the decade under specific scenarios, while power grid limitations are already delaying some projects. These issues further reinforce the trend toward prioritizing intelligence, which can outpace the physical capacities needed to support it. As AI capital expands, the potential returns begin to decrease unless physical capacity and skill training keep up. We draw on macroeconomic projections (IMF, IEA) and occupational exposure data (OECD, ILO); however, the uncertainty ranges can be vast and depend on various scenarios.

Managing AI Capital in Schools and Colleges

Education is at the center of this transition because it produces both types of inputs: intelligence and physical capacity. We should consider AI capital as a means to enhance routine thinking and free up human time for more personal work. Early evidence looks promising. A recent controlled trial revealed that an AI tutor helped students learn more efficiently than traditional in-class lessons led by experts. Yet, the adoption of such technologies is lagging. Surveys show low AI use among teachers in classrooms, gaps in available guidance, and limited training for institutions. Systems that address these gaps can more effectively translate AI capital into improved student learning while ensuring that core assessments remain rigorous. The controlled trial evaluated learning outcomes on aligned topics and used standardized results; survey findings are weighted to reflect national populations.

Three policy directions emerge from the focus on AI capital. First, rebalance the investment mix. If intelligence-based content is becoming cheaper and more effective, allocate limited funds to places where human interaction adds significant value, such as clinical placements, maker spaces, science labs, apprenticeships, and supervised practice. Second, raise professional standards for AI use. Train educators to integrate AI capital with meaningful feedback rather than letting the technology replace their discretion. The objective should not be to apply “AI everywhere,” but to focus on “AI where it enhances learning.” Third, promote equity. Given that clerical and low-status cognitive jobs are more vulnerable and tend to involve a higher percentage of women, schools relying too much on AI for basic tasks risk perpetuating gender inequalities. Track access, outcomes, and time used across demographic groups; leverage this data to direct support—coaching, capstone projects, internship placements—toward students who may be disadvantaged by the very tools that benefit others.

Administrators should approach their planning with a production mindset rather than simply relying on app lists. Consider where AI capital takes over, where it complements human effort, and where it may cause distractions. Utilize straightforward metrics. If a chatbot can produce decent lab reports, it can free up time for grading to focus on face-to-face feedback. If a scheduler can create timetables in seconds, invest staff time in mentorship. If a coding assistant helps beginners work faster, redesign tasks to emphasize design decisions, documentation, and debugging under pressure. In each case, the goal is to direct human labor towards the areas—both physical and relational—where value is amplifying.

Policy: Steering AI Capital Toward Shared Benefits

A clear policy framework is developing. Start with transparent procurement that treats AI capital as a utility, establishing clear terms for data use, uptime, and backup plans. Tie contracts to measurable learning outcomes or service results rather than just counting seat licenses. Next, create aligned incentives. Provide time-limited tax breaks or targeted grants for AI implementations that free up staff hours for high-impact learning experiences (like clinical supervision, laboratory work, and hands-on training). Pair these incentives with wage protection or transition stipends for administrative staff who upgrade their skills for student-facing jobs. This approach channels savings from AI capital back into the human interactions that are more difficult to automate.

Regulators should anticipate the obstacles. Growth in data centers and rising electricity needs present real logistical challenges. Education ministries and local governments can collaborate to pool their demand and negotiate favorable computing terms for schools and colleges. They can also publish disclosures regarding the use of AI in curricula and assessments, helping students and employers understand where AI was applied and how. Finally, implement metrics that account for exposure. Track what portion of each program’s assessments comes from physical or supervised activities. Determine how many contact hours each student receives and measure the administrative time freed up by implementing AI. Institutions that manage these ratios will enhance both productivity and the value of education.

Skeptics might question whether the productivity gains are exaggerated and whether new expenses—such as errors, monitoring, and training—cancel them out. They sometimes do. Research and news reports highlight teams whose workloads increased because they needed to verify AI outputs or familiarize themselves with new tools. Others highlight mental health issues arising from excessive tool usage. The solution is not to dismiss these concerns, but to focus on design: limit AI's capital to tasks with low error risk and affordable verification; adjust assessments to prioritize real-time performance; measure the time saved and reallocate it to more personal work. Where integration is poorly executed, gains diminish. Where it is effectively managed, early successes are more likely to persist.

Today, one of the most significant labor indicators might be this: intelligence is no longer scarce. The IMF’s figure showing 40% exposure reflects the macro reality that AI capital has reached a price-performance standard for many cognitive tasks. The risk for education isn’t becoming obsolete; it’s misallocating resources—spending limited funds on teaching rare thinking skills as if AI capital were still expensive and overlooking the physical and interpersonal work where value is now concentrated. The path forward is clear. Treat AI capital as a standard resource. Use it wisely. Implement it where it enhances routine tasks. Shift human labor to areas where it is still needed most: labs, clinics, workshops, and seminars where people connect and collaborate. Track the ratios; evaluate the trade-offs; protect those who are most at risk. If we follow this route, wages won’t just fall with automation. They will rise alongside complementary efforts. Schools will fulfill their mission: preparing individuals for the reality of today's world, not an idealized version of it.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Bruegel. 2023. Skills or a degree? The rise of skill-based hiring for AI and beyond (Working Paper 20/2023).

Brookings Institution (Kording, K.; Marinescu, I.). 2025. (Artificial) Intelligence Saturation and the Future of Work (Working paper).

Carbon Brief. 2025. “AI: Five charts that put data-centre energy use and emissions into context.”

GitHub. 2022. “Research: Quantifying GitHub Copilot’s impact on developer productivity and happiness.”

IEA. 2024. Electricity 2024: Analysis and Forecast to 2026.

IEA. 2025. Electricity mid-year update 2025: Demand outlook.

IFR (International Federation of Robotics). 2024. World Robotics 2024 Press Conference Slides.

ILO. 2023. Generative AI and Jobs: A global analysis of potential effects on job quantity and quality.

ILO. 2025. Generative AI and Jobs: A Refined Global Index of Occupational Exposure.

IMF (Georgieva, K.). 2024. “AI will transform the global economy. Let’s make sure it benefits humanity.” IMF Blog.

MIT (Noy, S.; Zhang, W.). 2023. Experimental Evidence on the Productivity Effects of Generative Artificial Intelligence (working paper).

OECD. 2024. Artificial intelligence and the changing demand for skills in the labour market.

OECD. 2024. How is AI changing the way workers perform their jobs and the skills they require? Policy brief.

OECD. 2024. The impact of artificial intelligence on productivity, distribution and growth.

OpenAI. 2023. “New models and developer products announced at DevDay.” (Pricing update).

RAND. 2025. AI Use in Schools Is Quickly Increasing but Guidance Lags Behind.

RAND. 2024. Uneven Adoption of Artificial Intelligence Tools Among U.S. Teachers and Principals.

Scientific Reports (Kestin, G., et al.). 2025. “AI tutoring outperforms in-class active learning.”

Epoch AI. 2024. “Performance per dollar improves around 30% each year.” Data Insight.

University of Melbourne / ADM+S. 2025. “Does AI really boost productivity at work? Research shows gains don’t come cheap or easy.”

Comment