AI Resume Verification and the End of Blind Trust in CVs

Published

Modified

AI resume verification is now essential for hiring Verified records make algorithmic screening fair and transparent Without them, AI quietly decides who gets work

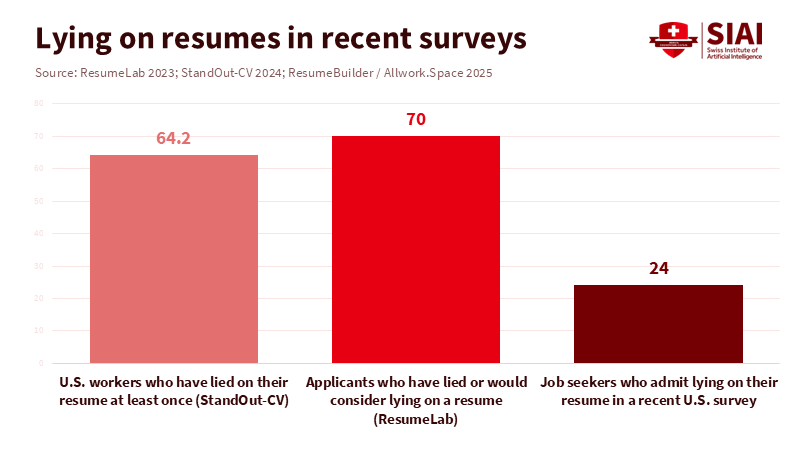

Recent surveys show that about two-thirds of U.S. workers admit they have lied on a resume at least once, or they would consider doing so. At the same time, AI is becoming the primary filter for job applications. A recent study suggests that nearly half of hiring managers already use AI to screen resumes. That number is expected to rise to 83% by the end of 2025. Another survey found that 96% of hiring experts use AI at some point in the recruiting process, mainly for resume screening and review. This creates a trust issue. Self-reported claims, often overstated or false, are evaluated by algorithms without transparency. AI resume verification is becoming important because the old belief—that an experienced human would catch the lies—is no longer true for most job applicants. If AI decides who gets seen by a human, the information it assesses must be testable and verifiable. It should be treated as essential labor-market infrastructure, not just an add-on in applicant tracking software. Emphasizing AI's potential to improve fairness can help build trust among stakeholders.

Why AI Resume Verification Has Become Unavoidable

The push to automate screening is not just a trend. Many employers in white-collar roles now receive hundreds of applications for each position. Some applicant tracking systems report more than 200 applications per job, about three times the pre-2017 level. With this flood of applications, human screeners cannot read every CV, much less investigate every claim. AI tools are used to filter, score, and often reject candidates before a human ever sees their profile. One research review projects that by the end of 2025, 83% of companies will use AI to review resumes, up from 48% today. A growing number of businesses are becoming comfortable allowing AI to reject applicants at various stages. AI resume verification is now essential because the primary hiring process has shifted to rely on algorithmic gatekeepers, even when organizations recognize that these systems can be biased or inaccurate.

The issue lies in the data entering these systems, which is often unreliable. One study estimates that around 64% of U.S. workers have lied about personal details, skills, experience, or references on a resume at least once. Another survey indicates that about 70% of applicants have lied or would consider lying to enhance their chances. References, once seen as a safeguard, are also questionable. One poll revealed that a quarter of workers admit to lying about their references, with some using friends, family, or even paid services to impersonate former supervisors. Additionally, recruiters note a rise in AI-generated resumes and deepfake candidates in remote interviews. Vendors are now promoting AI fraud-detection tools that claim to reduce hiring-related fraud by up to 85%. However, these tools are layered on top of systems still reliant on unverified self-reports. Therefore, AI resume verification is not just another feature; it is the essential backbone of a hiring system that is already automated but lacks reliability. Clarifying how verification enhances transparency can reassure policymakers and educators about its integrity.

Designing AI Resume Verification Around Verified Data

A typical response to this issue is to focus on more intelligent algorithms. The idea is that current AI screening tools will be retrained to spot inconsistencies and statistical red flags in resumes. However, recent research indicates that more complex models are not always more trustworthy. A study highlighted by a leading employment law firm found that popular AI tools favored resumes with names commonly associated with white individuals 85% of the time, with a slight bias for male-associated names. Analyses of AI hiring systems also reveal explicit age and gender biases, even when protected characteristics are not explicitly included. If AI learns from historical hiring data and text patterns that already include discrimination and exaggeration, simply adding more parameters won’t address the underlying issues. AI resume verification cannot rely solely on clever pattern recognition or self-reported narratives; it must be based on verified data from the source.

This leads to a second approach: obtaining better data, not just making the model more complex. AI resume verification means connecting algorithms to verified employment and education records that can be checked automatically. The rapid growth of the background-screening industry shows both the demand and existing challenges. One market analysis estimates that the global background-screening market was about USD 3.2 billion in 2023 and is expected to more than double to USD 7.4 billion by 2030. Currently, these checks are slow, manual, and conducted late in the hiring process. In an AI resume verification system, verified information would be prioritized. Employment histories, degrees, major certifications, and key licenses would exist as portable, cryptographically signed records that candidates control but cannot change, allowing platforms to query them via standard APIs. AI would then rank candidates primarily based on these verifiable signals—skills, tenure, and performance ratings, where appropriate—while treating unverified claims and free-text narratives as secondary. A full return to human-only screening is becoming impractical at the current volume of applicants. The real future lies in establishing standards for verified data, inspiring industry leaders and AI developers to innovate responsibly and ethically.

AI Resume Verification and the Future of Education Records

Once hiring moves in this direction, education systems become part of the equation. Degrees, short courses, micro-credentials, and workplace learning all contribute to resumes. Suppose AI resume verification becomes standard in hiring. In that case, the formats and standards of educational records will influence who stands out to employers. However, current educational data is often fragmented and unclear. The OECD’s Skills Outlook 2023 notes that the pace of digital and green transitions is outpacing the capacity of education and skills policies, and that too few adults engage in the formal or informal learning needed to keep up. When learners take short courses or stackable credentials, the results are typically recorded as PDFs or informal badges that many systems cannot reliably interpret. This creates another trust gap: AI may see a degree title and a course name, but it does not capture the skills or performance behind them.

AI resume verification can help fix this, but only if educators take action. Universities, colleges, and training providers can issue digital credentials that are both easy to read and machine-verifiable. These would be structured statements of skills linked to assessments, signed by the institution, and revocable if found to be fraudulent later. Large learning platforms and professional organizations can do the same for bootcamps, MOOCs, and continuing education. Labor-market institutions are already concerned that workers in non-standard or platform jobs lack clear records of their experience. Recent work by the OECD and ILO on measuring platform work shows how some of this labor remains invisible. If these workers could maintain verified records of hours worked, roles held, and ratings received, AI resume verification could highlight their histories rather than overlook them. For education leaders, the challenge is clear: transcripts and certificates must evolve from static documents into living, portable data assets that support both human decisions and AI-driven screening.

Governing AI Resume Verification Before It Governs Us

Having trustworthy data alone will not make AI resume verification fair. People have good reasons to be cautious about AI in hiring. A 2023 Pew Research Center survey found that most Americans expect AI to significantly impact workers, but more believe it will harm workers overall. Recent research in Australia illustrates this concern: an independent study showed that AI interview tools mis-transcribe and misinterpret candidates with strong accents or speech-affecting disabilities, with error rates reaching 22% for some non-native speakers. OECD surveys also indicate that outcomes are better when AI use is combined with consulting and training for workers, not just technology purchases. Therefore, AI resume verification needs governance guidelines alongside technical standards. Candidates should know when AI is used, what verified data is being evaluated, and how to challenge mistakes or outdated information. Regulators can mandate regular bias audits of AI systems, especially when using shared employment and education records that act as public infrastructure.

For educators and labor-market policymakers, the goal is to shape AI resume verification before it quietly determines opportunity for a whole generation. Some immediate priorities are clear. Employers can ensure that automated decisions to reject candidates are never based solely on unverifiable claims. Any negative flags from AI resume verification, such as suspected fraud or inconsistencies, should lead to human review rather than automatic exclusion. Vendors can be required to keep the verification layer separate from the scoring layer, preventing bias in ranking models from affecting the integrity of shared records. Education authorities can support the development of open, interoperable standards for verifiable credentials and employment records, making their use a condition for public funding. Over time, AI resume verification can support fairer hiring and more precise financial aid, better matching workers to retraining, and improved measurement of the benefits from different types of learning.

If two-thirds of workers admit to lying or would lie on a resume, and over four-fifths of employers are moving towards AI screening, maintaining the current system is not feasible. A choice is being made by default: unverified text, filtered by unregulated algorithms, quietly decides who gets an interview and who disappears. The solution is to view AI resume verification as a fundamental public good. This means developing verifiable records of learning and work that can move with people across sectors and borders, designing AI systems that rely on these records instead of guesswork, and enforcing rules to keep human judgment involved where it matters most. For educators, administrators, and policymakers, the urgency to take action is apparent. They must help create an ecosystem in which AI resume verification makes skills and experiences more visible, especially for those outside elite networks. Otherwise, they must accept a future where automated hiring reinforces existing biases. The technology is already available; what is lacking is the commitment to use it to build trust.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Business Insider. (2025, July). Companies are relying on aptitude and personality tests more to combat AI-powered job hunters. Business Insider.

Codica. (2025, April 1). How to use AI for fraud detection and candidate verification in hiring platforms. Codica.

Fisher Phillips. (2024, November 11). New study shows AI resume screeners prefer white male candidates. Fisher & Phillips LLP.

HRO Today. (2023). Over half of employees report lying on resumes. HRO Today.

iSmartRecruit. (2025, November 13). Stop recruiting scams: Use AI to identify fake candidates. iSmartRecruit.

OECD. (2023a). The impact of AI on the workplace: Main findings from the OECD AI surveys of employers and workers. OECD Publishing.

OECD. (2023b). OECD skills outlook 2023. OECD Publishing.

OECD & ILO. (2023). Handbook on measuring digital platform employment and work. OECD Publishing / International Labour Organization.

Pew Research Center. (2023, April 20). AI in hiring and evaluating workers: What Americans think. Pew Research Center.

StandOut-CV. (2025, March 25). How many people lie on their resume to get a job? StandOut-CV.

The Interview Guys. (2025, October 15). 83% of companies will use AI resume screening by 2025 (despite 67% acknowledging bias concerns). The Interview Guys.

Time. (2025, August 4). When your job interviewer isn’t human. Time Magazine.

UNC at Chapel Hill. (2024, June 28). The truth about lying on resumes. University of North Carolina Policy Office.

Verified Market Research. (2024). Background screening market size and forecast. Verified Market Research.

Comment