AI Hiring Discrimination Is a Design Choice, Not an Accident

Published

Modified

AI hiring discrimination comes from human design choices, not neutral machines “Autonomous” systems let organizations hide responsibility while deepening bias Education institutions must demand audited, accountable AI hiring tools that protect fair opportunity

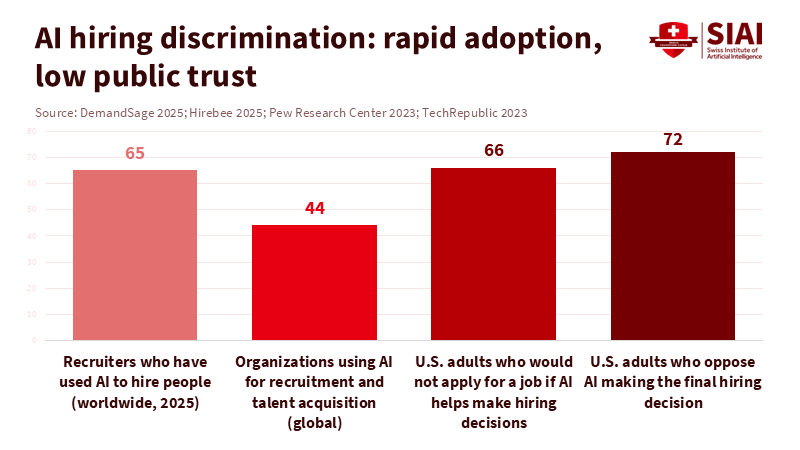

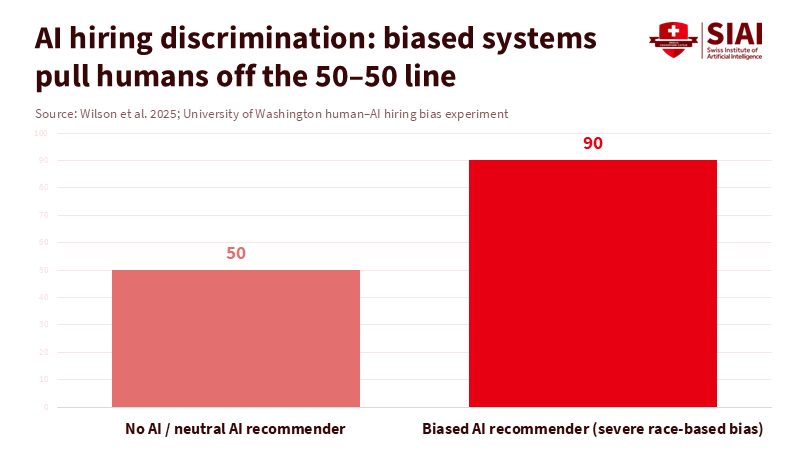

Two numbers highlight the troubling moment we are in. By 2025, more than 65% of recruiters say they have used AI to hire people. However, 66% of adults in the United States say they would not apply for a job if AI were used to decide who gets hired. The hiring process is quietly becoming a black box that many people, especially those already facing bias, are avoiding. At the same time, recent studies reveal how strongly biased tools can influence human judgment. In one extensive study, people saw racially biased AI recommendations. They chose majority-white or majority-non-white candidates over 90% of the time. Without AI, their choices were nearly fifty-fifty. The lesson is clear: AI hiring discrimination is not a side effect of neutral automation. It directly results from how systems are designed, managed, and governed.

AI hiring discrimination as a design problem

Much of the public debate still views AI hiring discrimination as an unfortunate glitch in otherwise efficient systems. That viewpoint is comforting, but it is incorrect. Bias in automated screening is not a ghost in the machine. It stems from the data designers choose, the goals they set, and the guidelines they ignore. Recent research on résumé-screening models emphasizes this point. One university study found that AI tools ranked names associated with white candidates ahead of others 85% of the time, even when the underlying credentials were the same. Another large experiment with language-model-based hiring tools showed that leading systems favored female candidates overall while disadvantaging Black male candidates with identical profiles. These patterns are not random noise. They demonstrate that design choices embed old hierarchies into new code, shifting discrimination from the interview room into the hiring infrastructure.

Seeing this issue from another angle, autonomy is not a feature of the AI system. It is a narrative people tell. Research on autonomy and AI argues that threats to human choice arise less from mythical “self-aware” systems and more from unclear, poorly governed design. When we label a hiring tool as “autonomous,” we let everyone in the chain disown responsibility for its outcomes. Yet the same experimental evidence that raises concerns about autonomy also shows that people follow biased recommendations with startling compliance. When a racially biased assistant favored white candidates for high-status roles, human screeners chose majority white shortlists more than 90% of the time. When the assistant favored non-white candidates, they switched to majority non-white lists at almost the same rate. The algorithm did not erase human agency; it subtly redirected it.

When autonomy becomes a shield for AI hiring discrimination

This redirection has real legal and social consequences. Anti-discrimination laws in the United States already treat hiring tests and scoring tools as “selection procedures,” regardless of how they are implemented. In 2023, the Equal Employment Opportunity Commission issued guidance confirming that AI-driven assessments fall under Title VII's disparate impact rules. Employers cannot hide behind a vendor or a model. If a screening system filters out protected groups at higher rates and the employer cannot justify the practice, the employer is liable. The EEOC’s first settlement in an AI hiring discrimination case illustrates this point. A tutoring company that used an algorithmic filter to exclude older applicants agreed to pay compensation and change its practices, even though the tool itself appeared simple. The message to the market is clear: AI hiring discrimination is not an accident in the eyes of the law. It is a form of design-mediated bias.

Yet the rhetoric of autonomy continues to dull the moral implications. When companies say “the algorithm made the decision,” they imply that no human intent was involved and thus bear less blame. This matters for people who already carry the burden of structural bias. Suppose Black or disabled candidates find out that automated video interviews mis-transcribe non-standard accents, with error rates up to 22% for some groups. In that case, many will simply choose not to apply. If students from low-income backgrounds hear that employers use unclear resume filters trained on past “top performers,” they may expect their non-traditional paths to count against them. Surveys indicate that two-thirds of U.S. adults would avoid applying for roles that rely on AI in hiring. Opting out is a rational choice for self-protection. Still, it also pushes people away from mainstream opportunities, undermining decades of effort to increase access.

AI hiring discrimination in education and early careers

These issues are not limited to corporate hiring. Education systems are now at the center of AI hiring discrimination, both as employers and as entry points for students. Universities and school networks increasingly use AI-enabled applicant tracking systems to screen staff and faculty. Recruitment firms specializing in higher education promote automated resume ranking, predictive scoring, and chatbot pre-screening as standard features. In international school recruitment, AI tools are marketed as a way to sift through global teacher pools and reduce time-to-hire. The promise is speed and efficiency, but the risk is quietly excluding the diverse voices that education claims to value. When an algorithm learns from past hiring data, in which certain nationalities, genders, or universities dominate, it can replicate those patterns at scale unless designers intervene.

AI hiring discrimination also affects students long before they enter the job market. Large educational employers and graduate programs are experimenting with AI-based games, video interviews, and written assessments. One Australian study found that hiring AI struggled to handle diverse accents, with speech recognition error rates of 12 to 22% for some non-native English speakers. These errors can affect scores, even when the answers' content is strong. Meanwhile, teacher-training programs like Teach First have had to rethink their recruitment because many applicants now use AI to generate application essays. The hiring pipeline is filled with AI on both sides: candidates rely on it to write, and institutions rely on it to evaluate. Without clear safeguards, that cycle can eliminate nuance, context, and individuality, particularly for first-generation students and international graduates who do not fit the patterns in the training data.

Educators cannot consider this someone else's problem. Career services are now routinely coaching students on how to navigate AI-driven hiring. Business schools are briefing students on what AI-based screening means for job seekers and how to prepare for it. At the same time, higher education recruiters are also adopting AI to shortlist staff and adjunct faculty. This dual exposure means that universities influence both sides of the AI hiring discrimination issue. They help create the systems and send students into them. This position carries a special responsibility. Institutions that claim to support equity cannot outsource hiring to unclear tools, then express surprise when appointment lists and fellowships reflect familiar lines of race, gender, and class.

Designing accountable AI hiring systems

If AI hiring discrimination is a design problem, then design is where policy must take action. The first step is to change the default narrative. AI systems should be treated as supportive tools within a human-led hiring framework, not as autonomous decision-makers. Legal trends are moving in this direction. New state-level laws in the United States, such as recent statutes in Colorado and elsewhere, treat both intentional and unintentional algorithmic harms as subjects of regulation rather than merely technical adjustments. In Europe, anti-discrimination and data-protection rules, along with the emerging AI Act, place clear duties on users to test high-risk systems and document their impacts. The core idea is straightforward: if a tool screens people out of jobs or education, its operators must explain how it works, measure who it harms, and address those harms in practice, not just in code.

Education systems can move faster than general regulators. Universities and school networks can require any AI hiring or admissions tool they use to undergo regular fairness audits, with results reported to governance bodies that include both student and staff representatives. They can prohibit fully automated rejections for teaching roles, scholarships, and student jobs, insisting that a human decision-maker review any adverse outcome from a high-risk model. They can enforce procurement rules that reject systems whose vendors will not disclose essential information about training data, evaluation, and error rates across demographic groups. Most importantly, they can integrate design literacy into their curricula. Computer-science and data-science programs should treat questions of autonomy, bias, and accountability as fundamental, rather than optional.

None of this will be easy. Employers will argue that strict rules on AI hiring discrimination slow down recruitment and hurt competitiveness. Vendors will caution that revealing details of their systems exposes trade secrets. Some policymakers will worry that strong liability rules could drive innovation elsewhere. These concerns deserve consideration, but they should not dominate the agenda. Studies of AI in recruitment already show that well-designed systems can promote fairness when built on diverse, high-quality data and linked to clear accountability. Public trust, especially among marginalized groups, will increase when people see that there are ways to contest decisions, independent audits of outcomes, and genuine consequences when tools fail. In the long run, hiring systems that protect autonomy and dignity are more likely to attract talent than those that treat applicants as mere data points.

The initial numbers show why this debate cannot wait. Most recruiters use AI to help make decisions, while most adults say they would prefer to walk away rather than apply through an AI-driven process. Something fundamental has broken in the social contract around work. The evidence showing how biased recommendations can skew human choices to extremes emphasizes that leaving design unchecked will not only maintain existing inequalities; it will exacerbate them. For education systems, the stakes are even higher. They are preparing the next generation of designers and decision-makers while depending on systems that could shut those students out. The answer is not to ban AI from hiring. Instead, we must recognize that every “autonomous” system is a series of human decisions in disguise. Policy should make that chain visible, traceable, and accountable. Only then can we move from a landscape of AI hiring discrimination to one that genuinely broadens human autonomy.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Aptahire. (2025). Top 10 reasons why AI hiring is improving talent acquisition in education.

Brookings Institution. (2025). AI’s threat to individual autonomy in hiring decisions.

Chen, Z. (2023). Ethics and discrimination in artificial intelligence-enabled recruitment. Humanities and Social Sciences Communications.

DemandSage. (2025). AI recruitment statistics for 2025.

Equal Employment Opportunity Commission. (2023). Assessing adverse impact in software, algorithms, and artificial intelligence used in employment selection procedures under Title VII of the Civil Rights Act of 1964.

Equal Employment Opportunity Commission. (2024). What is the EEOC’s role in AI?

European Commission. (2024). AI Act: Regulatory framework on artificial intelligence.

European Union Agency for Fundamental Rights. (2022). Bias in algorithms – Artificial intelligence and discrimination.

Harvard Business Publishing Education. (2025). What AI-based hiring means for your students.

Hertie School. (2024). The threat to human autonomy in AI systems is a design problem.

Milne, S. (2024). AI tools show biases in ranking job applicants’ names according to perceived race and gender. University of Washington News.

Taylor, J. (2025). People interviewed by AI for jobs face discrimination risks, Australian study warns. The Guardian.

The Guardian. (2025). Teach First job applicants will get in-person interviews after more apply using AI.

Thomson Reuters. (2021). New study finds AI-enabled anti-Black bias in recruiting.

TPP Recruitment. (2024). How is AI impacting higher education recruitment?

TeachAway. (2025). AI tools that are changing how international schools hire teachers.

U.Va. Sloane Lab. (2025). Talent acquisition and recruiting AI index.

Wilson, K., & Caliskan, A. (2025). Gender, race, and intersectional bias in AI resume screening via language model retrieval.

Comment