Algorithmic Targeting Is Not Segregation: Fix Outcomes Without Breaking the Math

Published

Modified

Optimization isn’t segregation Impose variance thresholds and independent audits Require delivery reports and fairness controls

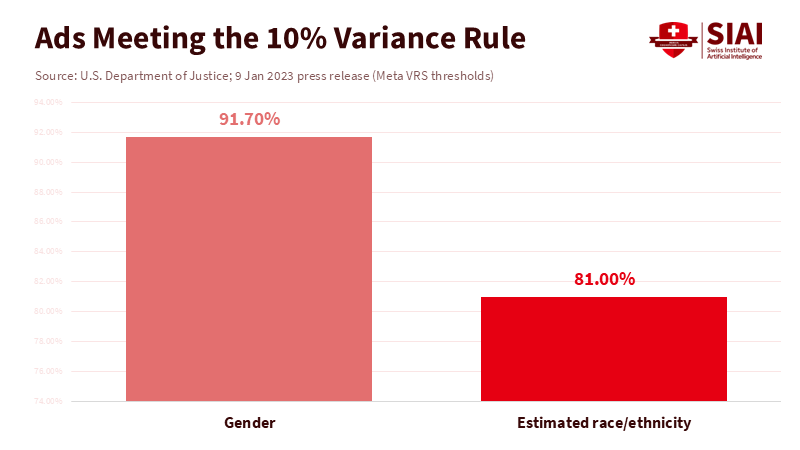

The key statistic in the public debate isn't about clicks or conversions. It's the 10% variance cap that U.S. regulators required Meta to meet for most housing ads by December 31, under a court-monitored settlement. This agreement requires the company’s “Variance Reduction System” to reduce the gap between eligible audiences and actual viewers, by sex and estimated race or ethnicity, to 10% or less for most ads, with federal oversight until June 2026. This is an outcome rule, not a moral judgment. It doesn't claim that “the algorithm is racist.” Instead, it states, “meet this performance standard, or fix your system.” As schools and governments debate whether algorithmic targeting in education ads amounts to segregation, we should remember this vital idea. The way forward is through measurable outcomes and responsible engineering, without labeling neutral, math-driven optimization as an act of intent.

What algorithmic targeting actually does

Algorithmic targeting has two stages. First, advertisers and the platform define a potential audience using neutral criteria. Then, the platform’s delivery system decides who actually sees each ad based on predicted relevance, estimated value, and budget limits. At the scale of social media, this second stage is the engine. Most ads won't reach everyone in the target group; the delivery algorithm sorts, ranks, and distributes resources. Courts and agencies understand this distinction. In 2023, the Justice Department enforced an outcome standard for housing ads on Meta, requiring the new Variance Reduction System to keep demographic disparities within specific limits and report progress to an independent reviewer. This solution targeted delivery behavior instead of banning optimization or calling it segregation. The lesson is clear: regulate what the system does, not what we fear it might mean.

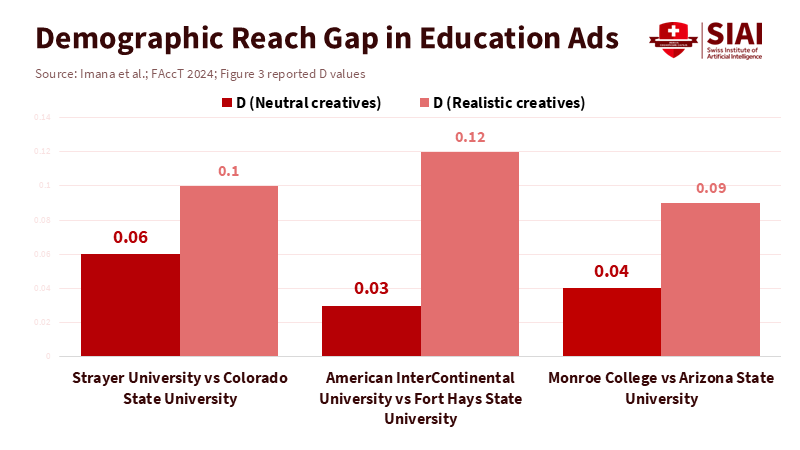

Critics argue that even neutral systems can lead to unequal results. This is true and has been documented. In 2024, researchers from Princeton and USC ran paired education ads and found that Meta’s delivery favored specific results: ads for some for-profit colleges reached a higher proportion of Black users than ads for similar public universities, even when the ads were neutral. When more “realistic” creatives were used, this racial skew increased. Their method controlled for confounding factors by pairing similar ads and analyzing aggregated delivery reports, a practical approach for investigating a complex system. These findings are essential. They illustrate disparate impact—an outcome gap—not proof of intent. Policy should recognize this difference.

The legal line on algorithmic targeting

The current case that sparked this debate claims that Meta’s education ad delivery discriminates and that the platforms, as public accommodations in Washington, D.C., provide different quality service to other users. In July, the D.C. Superior Court allowed the suit to move forward. It categorized the claims as “archetypal” discrimination under D.C. law. It suggested that nondisclosure of how the system operates could constitute a deceptive trade practice. This decision permits the case to continue but does not provide a final ruling. It indicates that state civil rights and consumer protection laws can address algorithmic outcomes. Still, it does not resolve the key question: when does optimization become segregation? The answer should rely on intent and whether protected characteristics (or close proxies) are used as decision inputs, rather than on whether disparities exist at the group level after delivery.

There is a straightforward way to draw this line. Disparate treatment, which refers to the intentional use of race, sex, or similar indicators, should result in liability. Disparate impact, on the other hand, refers to unequal outcomes from neutral processes, which should prompt engineering duties and audits. This is how the 2023 housing settlement operates: it sets numerical limits, appoints an independent reviewer, and allows the platform an opportunity to reduce variance without banning prediction. This is also the approach for other high-risk systems: we require testing and transparency, not blanket condemnations of mathematics. Applying this model of outcomes and audits to education ads would protect students without labeling algorithmic targeting as segregation.

Evidence of bias is objective; the remedy should be audits, not labels

The body of research on delivery bias is extensive. Long before the latest education ad study, audits showed that Meta’s delivery algorithm biased job ads by race and gender, even when the advertiser's targeting was neutral. A notable 2019 paper demonstrated that similar job ads reached very different audiences based on creative choices and platform optimization. Journalists and academics replicated these patterns: construction jobs mainly went to men; cashier roles to women; some credit and employment ads favored men, despite higher female engagement on the platform. We should not overlook these disparities. We should address them by setting measurable limits, exposing system behavior to independent review, and testing alternative scenarios, just as the housing settlement now requires. This is more honest and effective than labeling the process as segregation.

Education deserves special attention. The 2024 audit found that ads for for-profit colleges reached a larger share of Black users than public university ads, aligning with longstanding enrollment differences—about 25% Black enrollment in the for-profit sector versus roughly 14% in public colleges, based on data from the College Scorecard used by the researchers. This history helps clarify the observed biases but does not justify them. The appropriate response is to require education and delivery to meet clear fairness standards—perhaps a variance limit similar to housing—and to publish compliance metrics. This respects that optimization is probabilistic and commercial while demanding equal access to information about public opportunities.

A policy path that protects opportunity without stifling practical math

A better set of rules should look like this. First, prohibit inputs that reveal intent. Platforms and advertisers shouldn't include protected traits or similar indicators in ad delivery for education, employment, housing, or credit. Second, establish outcome limits and audit them. Require regular reports showing that delivery for education ads remains within an agreed range across protected classes, with an independent reviewer authorized to test, challenge, and demand corrections. This is already what the housing settlement does, and it has specific targets and deadlines. Third, require advertiser-facing tools that indicate when a campaign fails fairness checks and automatically adjust bids or ad rotation to bring delivery back within the limits. None of these steps requires labeling algorithmic targeting as segregation. All of them help reduce harmful biases.

The state and local landscape is moving towards a compliance-focused model. New York City’s Local Law 144 mandates annual bias audits for automated employment decisions and public reporting. Several state attorneys general have started using existing consumer protection and civil rights laws to monitor AI outcomes in hiring and other areas. These measures do not prohibit optimization; they demand evidence that the system operates fairly. National policymakers can adapt this framework for education ads: documented audits, standardized variance metrics, and safe havens for systems that meet the standards. This approach avoids the extremes of “anything goes” and “everything is segregation,” aligning enforcement with what courts are willing to oversee: performance, not metaphors.

What educators and administrators should require now

Education leaders can take action without waiting for final court rulings. When purchasing ads, insist on delivery reports that show audience composition and on tools that promote fairness-aware bidding. Request independent audit summaries in RFPs, not just audience estimates. If platforms do not provide variance metrics, allocate more funding to those that do. Encourage paired-ad testing, a low-cost method used by research teams to uncover biases while controlling for confounding factors. The goal isn't to litigate intent; it's to ensure that students from all backgrounds see the same opportunities. This is a practical approach, not a philosophical one. It enables us to turn a heated label into a standard that improves access where it matters: public colleges, scholarships, apprenticeships, and financial aid.

Policymakers can assist by clarifying safe harbors. A platform that clearly excludes protected traits, releases a technical paper detailing its fairness controls, and meets a defined variance threshold for education ads should receive consideration and a specific period to rectify any issues flagged in audits. In contrast, a platform that remains opaque or uses traits or obvious proxies should face penalties, including damages and injunctions. This distinction acknowledges a crucial ethical point: optimization driven by data can be lawful when it respects clear limits and transparency, and it becomes unlawful when it bypasses those constraints. The DOJ’s housing settlement demonstrates how to create rules that engineers can implement and courts can enforce.

The 10% figure is not a minor detail. It serves as a guide to regulating algorithmic targeting without turning every disparity into a moral judgment. Labeling algorithmic targeting as segregation obscures the critical distinction between intent and impact. It also hampers the tools that help schools reach the right students and aid students in finding the right schools. We do not need metaphors from a bygone era. We need measurable requirements, public audits, and independent checks that ensure fair delivery while allowing optimization to function within strict limits. Suppose courts and agencies insist on this approach, platforms will adapt. In that case, research teams will continue testing, and students—especially those who historically have had fewer opportunities—will receive better information. Avoid sweeping labels. Maintain the rules on outcomes. Let the math work for everyone, with transparency.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Ali, M., Sapiezynski, P., Bogen, M., Korolova, A., Mislove, A., & Rieke, A. (2019). How Facebook’s ad delivery can lead to biased outcomes. Proceedings of CSCW.

Brody, D. (2025, November 13). Equal Rights Center v. Meta is the most important tech case flying under the radar. Brookings Institution.

Imana, B., Korolova, A., & Heidemann, J. (2024). Auditing for racial discrimination in the delivery of education ads. ACM FAccT ’24.

Northeastern University/Upturn. (2019). How Facebook’s Ad Delivery Can Lead to Biased Outcomes (replication materials).

Reuters. (2025, October 24). Stepping into the AI void in employment: Why state AI rules now matter more than federal policy.

U.S. Department of Justice. (2023/2025 update). Justice Department and Meta Platforms Inc. reach key agreement to address discriminatory delivery of housing advertisements (press release; compliance targets and VRS).

Washington Lawyers’ Committee & Equal Rights Center. (2025, February 11). Equal Rights Center v. Meta (Complaint, D.C. Superior Court).

Comment