AI Housing Supply Needs a Different Approach

Published

Modified

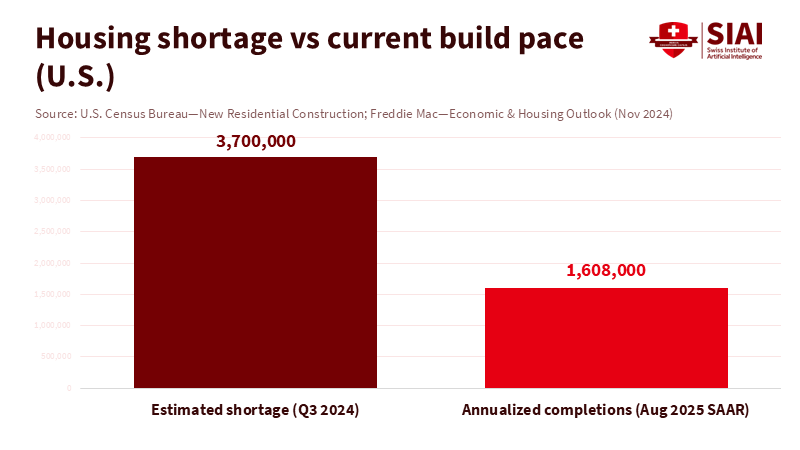

Tiny city samples won’t close the 3.7–3.9 million-home gap Use real-time public and private data under shared standards and privacy rules Governments set rails, platforms supply feeds, and weekly human review turns signals into units

Cities do not require a small survey of 50 or 100 households to address the housing shortage. They need a system that can track the market in real time. The key number is clear. The United States is short by about 3.7 to 3.9 million homes, depending on the measure used. This gap did not appear overnight, and it won't close with minor initiatives. It can only close if we utilize the data we already have — much of which is in the private sector — and establish clear guidelines for its use. We should measure, govern, and act at scale. This is the purpose of AI housing supply. It should not replicate the weaker aspects of a survey. Instead, it should strive for a comprehensive market view that updates monthly, or even weekly, and connects to permits, prices, and completions that already influence the system.

Reframing the Problem: From Sampling to Standards for AI Housing Supply

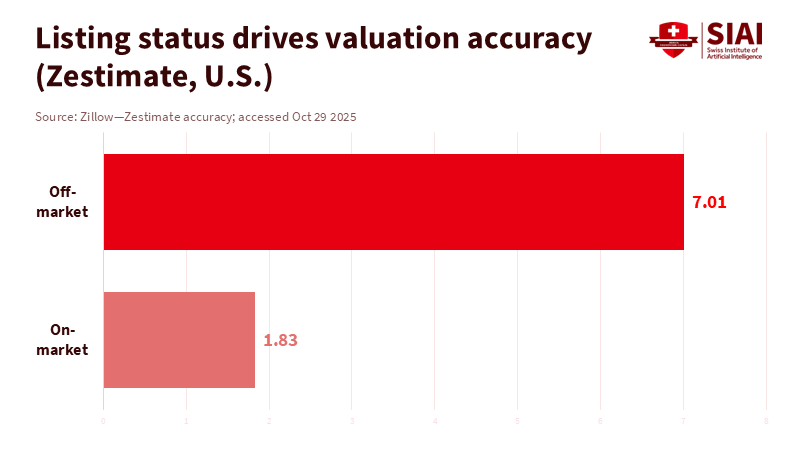

The current suggestions propose that cities create groups, harmonize a few fields, and test AI on small samples. The intention is positive. The aim is to accelerate approvals and site selections. However, relying on samples of a few homes per city cannot compete with the insights that private platforms gather from over 100 million properties every day. Zillow alone offers data on more than 110 million homes and publishes valuations on approximately 100 million, with median error rates under 2 percent for properties on the market in recent years. Redfin updates listings, demand, and price indicators weekly across different markets.

Additionally, public agencies publish timely information on completions, permits, and household counts. The issue is not a lack of data feeds. It lies in the absence of shared standards and lawful, privacy-sensitive methods for combining public and private signals. AI housing supply should begin there, not with a limited city-level sample that cannot capture the market's dynamic aspects.

The argument for smaller samples is based on quality and control. It implies that carefully curated records can address messy, biased inputs. However, this approach sacrifices broad coverage for attention to detail. Housing markets depend on marginal changes: the extra lot that gets rezoned, the small multifamily project that finishes construction, and the changes in price-to-income ratios that allow more buyers to enter the market. If your data falls short of these margins, your policies will not align with the market. Shared standards—such as standardized schemas, reference IDs, and governance—enable us to maintain broad coverage while improving quality. This is the necessary reframing at this moment.

What the Data Already Shows—and How to Use It

Start with the supply. Federal data tracks completions and new construction by unit type and region. In August 2025, the United States completed homes at an annualized rate near 1.6 million units. The single-family rate averaged about 1.09 million units, while the multifamily rate averaged around 500,000 units. Although these figures fluctuate monthly, they remain quick, public, and consistent. They provide a framework for cities to monitor supply and demand. They also allow us to evaluate whether zoning changes or fee modifications appear in future pipelines. AI systems do not need to guess these trends when the data is already available. They should incorporate this information, coordinate it with local rules, and identify where approvals are delayed.

Now let's examine demand and prices. Private platforms track listings, tours, concessions, and closing spreads almost in real time. Zestimates for listed homes maintain low median error rates and span both urban and rural markets. Redfin provides weekly updates on shifts in supply, sale-to-list ratios, and price reductions. When cities decide where to speed up reviews or allow higher housing density, these signals are crucial. They reveal market stress, slack, and how quickly policy changes reach the closing table. If we separate public systems from these private feeds, we limit the effectiveness of the AI tools we hope will assist us. The best approach is to establish lawful, transparent data-sharing with enforceable privacy protections, then train systems using the combined data.

The demand-supply gap is significant and persistent. Freddie Mac's latest analysis estimates the shortage at about 3.7 million homes as of late 2024. Up for Growth's independent estimate shows underproduction nearing 3.85 million in 2022, a slight decrease for the first time in several years. We do not require small samples to recognize the obvious. We need guidelines that allow local leaders to use high-coverage, high-frequency data to direct their limited time to the most pressing issues: land, permits, utilities, and financing timelines. AI can help prioritize cases and identify where an additional inspector or a small code change could create hundreds of units. But only if the models have a complete view.

Governance First: Privacy, Accuracy, and the Public-Private Divide in AI Housing Supply

Privacy poses a real risk on both sides. The federal government has acknowledged this in its policies. Executive Order 14110 directs agencies to manage AI risks, including privacy issues. The Office of Management and Budget has issued guidance on responsible federal AI use. The Census Bureau has strong disclosure controls, including differential privacy, for its core data products. These methods protect individuals but can impact accuracy in small areas if not correctly adjusted. That is a governance lesson, not a reason to shy away from standards. Cities should adopt privacy terms that align with federal practices, require vendor model evaluations, and maintain a simple register outlining "what data we use and why." The goal is balance: public entities share only what is necessary; private partners document their models and controls; and the public can audit both.

Accuracy is the second principle. Zillow's documentation outlines how coverage and error vary by market and listing status. Redfin explains when metrics are weekly, rolling, or updated. Public datasets indicate error ranges and revisions. The solution is not to assume that a hand-picked sample is more reliable. Instead, every data field in an AI pipeline should have an accuracy note, a last-updated tag, and a link to its methodology. This enables human reviewers and auditors to assess each signal. It also prevents models from overly relying on a single source in a thin market. Cities can specify these requirements in procurement and can reject claims lacking documentation. This is the kind of straightforward governance that makes AI valuable.

Next comes defining the public-private divide. The government should establish rules for common parcel IDs, zoning and entitlement schemas, timelines for permit statuses, and open APIs for decision-making and appeals. It should not attempt to recreate national market feeds that brokerages and platforms already assemble and refresh. Instead, cities should integrate those feeds into their regulations through contracts, complete with audit rights and sunset clauses. This division of labor respects expertise and accelerates learning. It also reduces the temptation to create a flashy but ineffective government app that wastes funds and stalls after a pilot.

What This Means for Regions—For Universities, School Districts, and City Halls

An education journal should focus on training systems, not just on models. Universities can lead this effort by creating cross-disciplinary studios where planning, data science, and law students develop “living codes” for AI housing supply. These studios can outline a region's permitting steps, label each with data fields, and provide an open schema that any city can implement. They can also assess model biases with real zoning cases and publish the results. This work is practical. It provides cities with ready-made resources and opens pathways for students to pursue meaningful careers in public problem-solving.

School districts have a direct interest in this issue. Housing developments affect enrollment, bus routes, and staffing. Districts should participate when cities establish data standards, ensuring school capacity and equitable access goals are considered alongside sewer maps and transit plans. When AI models highlight growth areas, districts can anticipate teacher hiring and special needs services earlier. They can also verify that new housing aligns with safe walking zones. This is how “AI housing supply” becomes beneficial for families rather than just a distant technical discussion.

City halls can take three actions. First, they should publish a clear, machine-readable entitlement map with parcel IDs and decision timelines. Second, they should sign agreements for data sharing with one or two major private feeds and one public feed that together cover listings, permits, and completions. Third, they should form a small human team that reviews flagged models weekly and updates the public about changes: a rule modified, a utility conflict resolved, or an appeal settled. The weekly frequency is crucial. It fosters transparency and turns data into actionable units.

The objections to these proposals are predictable and deserve responses. One concern is privacy. Here, federal practices demonstrate both the risks and the solutions. Strong disclosure controls can protect individuals while still providing valid aggregate data, and cities can require similar safeguards from vendors. Another objection is that private data might reflect platform interests and potentially include biases. This is valid, which is why contracts should demand documentation of training data, regular error updates, and the possibility of independent audits. A third objection claims that the government must “own” the core models. This confuses ownership with control. Clear standards, transparent contracts, and public dashboards provide absolute control without forcing cities to become data brokers themselves.

There is also the belief that a public cohort will build expertise. It can, but only if it focuses on the correct elements: standards, IDs, APIs, and governance. Much of the necessary framework already exists. Zillow provides extensive coverage in its valuation system; Redfin updates its market data weekly; and federal datasets track counts and completions. The innovative public strategy is to integrate these resources rather than replicate them. And when a private dataset disappears, such as Zillow’s ZTRAX for open research, the response should not be to create a weaker copy. Instead, it is essential to require portable schemas and maintain a minimal public “backbone” of critical fields, so cities can seamlessly exchange data sources.

The timeline is essential. In September 2025, the Census Bureau released new one-year ACS estimates. In August 2025, completions were approximately 1.6 million SAAR. These are current signals, not historical artifacts. They should now be part of city dashboards. When a governor extends a missing-middle incentive or a council adjusts parking requirements, we should see results in permit statuses within weeks and in construction starts within months. AI can help to identify these shifts faster and filter out noise. But standards and data access are necessary for this detection to be effective.

The “home genome” metaphor is compelling, but it risks misinterpretation. The Human Genome Project required new measurements to uncover what was previously invisible. Housing is different. Much of it is already visible. Parcels, zoning, utilities, permits, listings, prices, rents, starts, and completions are available, albeit scattered. The challenge is not discovery but rather integration and governance. This is the leap we need to take: stop acting as if we need to gather a new organism; instead, focus on wiring the system we already have.

Build the Infrastructure, Not Another Sample

Return to the number: a shortage of about 3.7 to 3.9 million homes. That is the key takeaway. AI housing supply must target that scale. A small sample cannot handle this challenge, no matter how well curated it is. The most efficient route is to create public infrastructure—standards, IDs, APIs, and privacy regulations—and apply the best available public and private data to it. Cities maintain control by setting the rules and auditing the models. Platforms should continue what they do best: gathering signals and updating them. Educators should train the workforce that can maintain this infrastructure and question the models. If we succeed, weekly dashboards will translate into construction starts and new residents. If we fail, we will spend another cycle on pilots that yield little and provide less help. The choice is clear: build the infrastructure now and let the market's complete signals guide our efforts.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Brookings Institution. “A home genome project: How a city learning cohort can create AI systems for optimizing housing supply.” Accessed Oct. 29, 2025. Brookings

Census Bureau. “American Community Survey (ACS). 2024 1-year estimates released.” Sept. 11, 2025. Census.gov

Census Bureau. “New Residential Construction: August 2025.” Sept. 17, 2025. Census.gov

Census Bureau. “Simulation, Data Science, & Visualization (research program pages).” 2025. Census.gov

Census Bureau. “Disclosure Avoidance and the 2020 Census.” 2023. Census.gov

Community Solutions. “Can AI Help Solve the Housing Crisis?” Sept. 23, 2025. Community Solutions

Executive Office of the President. “Executive Order 14110: Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence.” Nov. 1, 2023. Federal Register

Freddie Mac. “Economic, Housing and Mortgage Market Outlook—Updated housing shortage estimate (3.7 million units as of Q3 2024).” Nov. 2024 and Jan. 2025 updates.

HUD & Census Bureau. “American Housing Survey (AHS) 2023 release and topical modules.” Sept. 25, 2024.

Redfin. “Data Center: Downloadable Housing Market Data.” Accessed Oct. 29, 2025.

Up for Growth. “Housing Underproduction in the U.S. (2024).” Oct. 30, 2024.

Zillow. “Why Doesn’t My House Have a Zestimate?” Accessed Oct. 29, 2025.

Zillow. “Zestimate accuracy.” Accessed Oct. 29, 2025.

Zillow. “Building the Neural Zestimate.” Feb. 23, 2023.