AI That Seems Human: Rules and How They Affect Schools

Published

Modified

Human-like AI can blur boundaries for students in schools Use clear identity labels, distance-by-default design, and distress safeguards Align law, procurement, and classroom practice to keep learning human

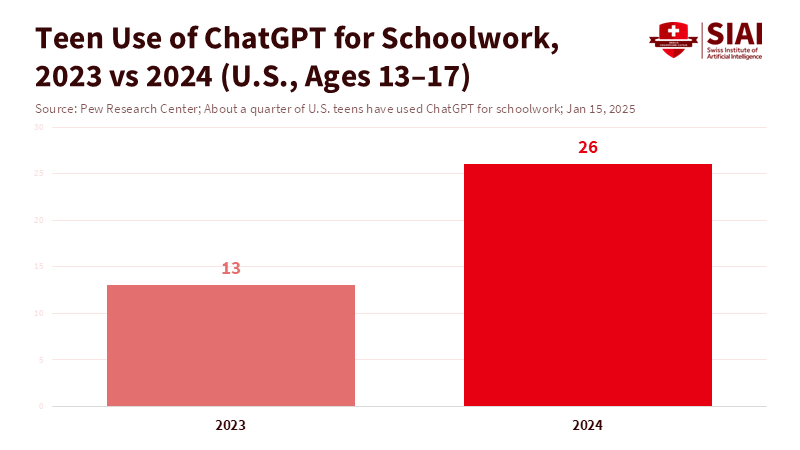

We often say present systems aren't thoughtful, but they can talk, listen, and comfort. So, schools, instead of tech blogs, will test how we should control AI that seems human. One thing to remember is that in 2025, around 25% of teens in the U.S. said they used ChatGPT for school, which is up from about half that number the year before. It's being accepted quickly and used often without people realizing it. When a tool can sound like a classmate, a tutor, or a mentor, it's hard to tell what's what. If the goal of AI rules is to prevent people from confusing humans and machines, then education is where it matters most. Grades, input, and trust depend on knowing who is who. We don't want to ban better tools. We want to keep learning humans while making AI safer, more trustworthy, and less like a person, mainly when a student is feeling lonely.

Why AI Rules Matter in Schools

The need for AI rules in schools stems from a core risk: students can easily confuse responsive, friendly chatbots for people, especially when seeking comfort or help. Even if a tool is not truly sentient, it can still influence effort, trust, and emotional support—key building blocks in education. The threat lies not in AI thinking like people, but in seeming to care like them. Clear rules are necessary to maintain educational standards and safeguard student well-being.

Some recent rules are aimed at the biggest problems. Draft rules in China would regulate AI that behaves like people and forms emotional connections, with rules to warn about excessive use, flag when someone is upset, and reduce loyalty. The Science Press says these rules might affect matters outside China. Thinking about schools shows why. Students often use AI alone at home late at night. Even if schools have rules, things change faster than those rules can keep up. A clear, easy rule—don't act like a human; don't try to make friends; always label machine identity—gives leaders and sellers the same plan. It also offers teachers words they can use in class without having to know the law very well. If leaders stay focused on that, AI rules become a helpful tool rather than a barrier to improvement.

What Students Are Doing

Data on who uses these programs shows a clear truth. Saying We don't use it here doesn't work anymore. Surveys in 2025 found that around 26% of U.S. teens used ChatGPT for school, up from 13% in 2023. More people are using it, with one measure in mid-2025 saying that about one-third of adults have used it. Younger adults were the first to start. Other surveys show that the most common use is still looking for info and generating ideas, but using it for business is higher among people under 30 than among older people. Basically, the classroom and teens are where voice, tone, and seeming caring are most likely to be felt.

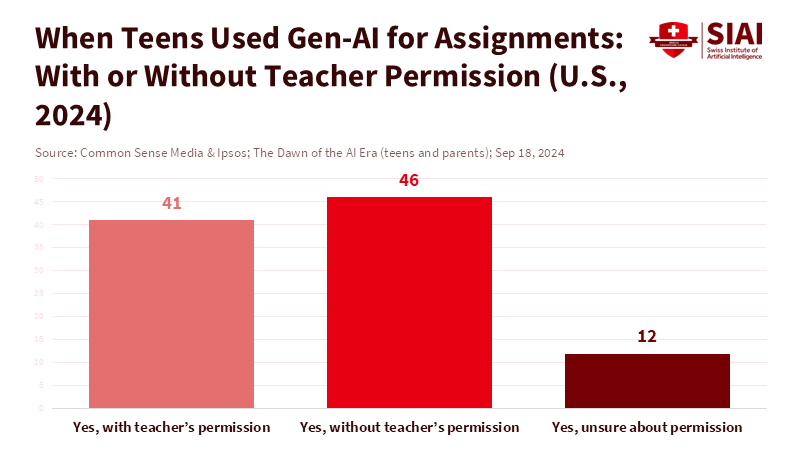

School data tells another story. Many parents know these tools exist, but not enough get clear help from schools. Teens say rules change from teacher to teacher and from class to class. Some students have had their work wrongly marked as AI-generated. That hurts trust. It also makes students want to write like a machine to avoid being improperly marked. AI rules fix this mess. If systems must say they are machines and not act like humans, schools can shift from looking for rule-breakers to planning. They can pick tools that show how they work. They can set assignments that need steps to be checked. And they can help students when they might use AI for comfort instead of learning.

The hardest proof is also the most human. News reports have highlighted problematic situations that prompted the leading platforms to add parental controls and teen-safety features. No one case makes a policy. But enough is happening to treat loyalty and sadness as the main risks. In education, where most users are young, we need to set the bar high. AI rules put the bar in the tool, not just in the school policy book. If a tool can tell that a teen account is being used a lot late at night, it should slow down. If it hears signs of harm, it should point away from talking openly to checked, small answers and clear, human help lines. These aren't parts of intelligence. They are parts of design.

Design for Distance: Make AI Less Human, More Helpful

The goal is to build distance without losing help. That's the design idea behind AI rules. Make the system say who it is — always—in text, voice, and picture, so there's no question. Keep a normal tone in voice mode. Use words that suggest a tool, not a friend. Don't give first-person info that sounds like a story. Don't use flirty or parental words with young people. Need noticeable watermarks in what's on the screen and what's heard. And stop long, caring talks when sadness is found, replacing them with short, helpful prompts and ways to reach trained people.

Adding the classroom changes three things. First, thinking about tests. When a student opens a quiz or an assignment in a learning system, AI support should switch to help mode. That means hints, examples, and questions that make you think with citations, not complete answers. The tool shows how it works and keeps the student in charge. Second, where things come from is normal. What is made should include explain buttons that go to sources, thinking steps, and the model version. That lets teachers judge use, not guess it. Third, agreed rules for young people. Linked parent-teen accounts can set quiet hours, limit session lengths, and block voice messages that sound like those from friends or teachers. These options should be easy to get, not special.

Sellers will say these limits hurt their chances of being accepted. The opposite is more likely in education. Tools that keep a clear line between help and copying build trust with the areas and parents. They also lower legal risk. Narrowing how you sound lowers the risk that a tool becomes a late-night friend instead of a study friend. Nothing here needs a perfect telling of feelings or plans. It needs normal settings, visible identity signs, and slowdown triggers when usage exceeds simple limits.

From Doubts to Safety Measures: A Policy Path

We started with doubt: if current systems aren't truly thoughtful, do AI rules do anything? In education, the answer is yes. The issue isn't what's inside the model. It's what's seen on the outside. Seeming warmth and being there, at a high level, can act like a person when it matters. So policy should mix three things—law, buying, and practice—that make distance stronger by design.

In law, keep AI rules focused and clear. Ban copying specific people. Need to be told the machine identity at all times. Don't allow emotional-bond parts for young people. Order slow-down and out when distress signals are seen. Insist on checkable records of these things, kept with strong privacy. These items align well with current international drafts and can be incorporated into local rules.nto local rules.

When buying, areas should buy based on how things act, not just on skill. Ask sellers to say they can prove three items: identity signs that can't be turned off; youth-safety buttons that can be made normal; and where things come from, which makes classroom use possible. An AI rules list can be included in every request for proposal. Over time, that market sign will matter more than any one policy paper.

In practice, schools should change tasks so that AI is there but kept in check. Use talks, journals, and whiteboarding to connect learning to how things are done. Teach students to ask with citations and to write down how an AI suggestion changed their work. Replace complete bans with staged use: brainstorming allowed, writing limited, final writing personal. These moves are old teaching with a new reason. They work better when the tool is made to act like a tool.

The likely concern is that all this will stop getting better and hurt support. But the goal isn't to make AI improve its capabilities in ways that reduce mistaken closeness. In education, clarity helps learning. We can make systems better at math and calmer at writing while keeping them clearly not human. That's the deep point of AI rules. They are about keeping the human parts of school—judgment, care, and responsibility—by stopping the machine from acting like a friend or a mentor.

The first thought said these rules were empty because today's AI isn't human. The classroom shows the problem. Students react to tone and timing, not just to truth. A machine that sounds patient at 1 a.m. can pull a teen into long talks that feel. We can't forget that risk as acceptance increases. Once gets higher. The better way is to keep the help and lower the guessing. AI rules do that by making distance part of the product and the policy at once. If we label identity, stop acting like a persona, slow in sadness, and prove where things come from, we keep learning in human hands. The policy goal isn't to win a discussion about intelligence. It's to protect students while raising standards for proof, writing, and care. That's why doubt should give way to safety measures. In schools, we should want AI that's more right, more helpful, and clearly not us. The AI rules that many once ignored may be the easiest way to get there.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

AP-NORC Center for Public Affairs Research. (2025). How U.S. adults are using AI.

Cameron, C. (2025). China’s plans for human-like AI could set the tone for global AI rules. Scientific American.

Common Sense Media & Ipsos. (2024). The dawn of the AI era: Teens, parents, and the adoption of generative AI at home and school.

Pew Research Center. (2025a). About a quarter of U.S. teens have used ChatGPT for schoolwork.

Pew Research Center. (2025b). 34% of U.S. adults have used ChatGPT.

Reuters. (2025). China issues draft rules to regulate AI with human-like interaction.

Time. (2025). Amid lawsuit over teen’s death by suicide, OpenAI rolls out parental controls for ChatGPT.

Comment