From Model Risk to Market Design: Why AI Financial Stability Needs Systemic Guardrails

Published

Modified

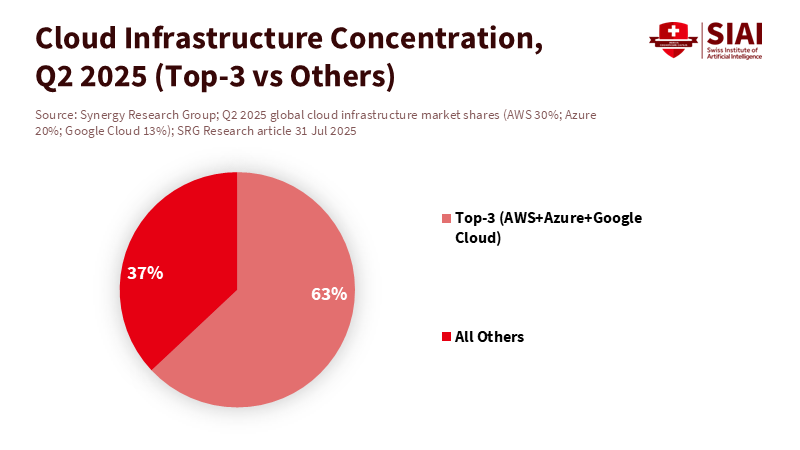

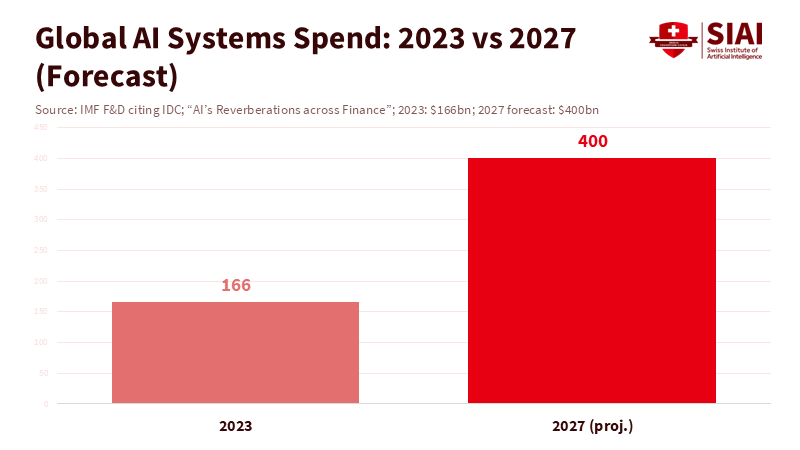

AI adoption is concentrated on a few cloud and model providers, creating systemic fragility Correlated behavior and shared updates can amplify shocks across markets Regulators should stress-test correlation, mandate redundant rails, and map dependencies to safeguard AI financial stability

Three vendors now support most of finance’s machine learning systems. By the second quarter of 2025, AWS, Microsoft Azure, and Google Cloud together held about two-thirds of the global cloud infrastructure market. Banks, brokers, insurers, and market utilities increasingly use AI on the same infrastructure, with identical toolchains, often trained on similar data. This is not just about operational ease; it is a market design choice with broader implications. When systems learn from the same patterns and operate on shared frameworks, errors and feedback loops can escalate quickly. Central banks are starting to take notice. The Bank of England has highlighted the need to stress-test AI. The Financial Stability Board points to a lack of visibility into usage and new vulnerabilities. The BIS urges supervisors to balance rapid adoption with strong governance. The challenge for policymakers is straightforward: can we move from fixing models individually to implementing system-level safeguards that support AI financial stability before the next crisis hits?

AI Financial Stability: Reframing the Risk Map

The old perspective views “AI risk” as a series of technical glitches: bias in scorecards, chatbots that misinterpret data, or models that stray from their intended use. The new perspective takes a broader view. Suppose the financial system shifts price discovery, risk transfer, and customer flows onto uniform, centralized AI pipelines. In that case, rare events will change as well. System outages, update failures, or parameter changes at one provider can affect several firms simultaneously. Similar models can respond to the same signals simultaneously, leading to herding. The IMF warns that this can amplify price changes and push leverage and margining frameworks beyond the levels anticipated by older standards. In essence, AI financial stability is not just about better models. It's about shared infrastructures, correlated behaviors, and the speed of collective responses, intended or unintended.

This new perspective is essential now because AI adoption is widespread. Supervisors report significant AI use in credit, surveillance, fraud detection, and trading. ESMA has informed EU firms that boards are accountable for AI-driven decisions, even when tools are sourced from third parties. The FSB’s 2024 assessment highlights monitoring gaps and vendor concentration as structural issues. Meanwhile, the BIS outlines how authorities are adopting AI for policy tasks. A system that runs on AI while being governed by AI offers opportunities for better coordination but also poses risks of correlated failures when inputs are unreliable or stressed. This is a question of stability, not just compliance.

AI Financial Stability: Evidence on Pressure Points (2023–2025)

First, consider concentration. By mid-2025, the top three cloud providers controlled about two-thirds of the global infrastructure market. While this doesn’t specifically reflect finance, it highlights systemic exposure, as many regulated firms are moving their analytics and data operations to these providers—both the FSB and Bank of England flag third-party and vendor risks in AI. Additionally, IMF discussions prioritize herding and concentration as key concerns. When you combine these factors, the takeaway is straightforward: upstream concentration and downstream uniformity increase risk during stress events. Method note: We use the share of global infrastructure as a proxy for potential concentration in financial AI hosting, following FSB guidance on proxy indicators when direct metrics are unavailable.

Second, focus on speed and amplification. The IMF has warned that AI can accelerate and amplify price movements. Bank of England officials have suggested including AI in stress tests, as widespread use in risk and trading could increase volatility during stressful conditions. The FSB adds that limited data on AI adoption hampers oversight. These concerns are not just theoretical; they relate to familiar situations: one-sided order flow from similarly trained agents, sudden deleveraging when risk limits are hit simultaneously, and operational correlations when many firms patch to the same model update. Method note: these insights come from official speeches and reports from 2024–2025 and align with established surveillance tools. They don’t assume unnoticed failures; they interpret current policy signals as early warnings that need to be incorporated into macroprudential design.

AI Financial Stability: What to Do Now—Design, Not Band-Aids

The first policy shift should move supervision from model risk to market structure. Today’s guidelines focus on validation, documentation, and local explainability. Those are important, but macroprudential policy must also address three design questions: How many independent compute infrastructures support key market functions? How varied are the training data and objectives across major dealers and funds? Can critical services function properly during a failure of a provider or model? These answers will guide the use of known tools: sector-wide scenario analysis that includes correlated AI shocks, system-level concentration limits when feasible, and redundancy requirements for essential infrastructure. The Bank of England’s interest in stress testing AI is a start. The goal is to scale this into a shared, international standard that aligns with FSB monitoring. This will make AI financial stability part of a cohesive macroprudential program, promising a more secure and stable financial future.

The second move is to close the data gap without over-collecting proprietary information. Authorities should establish a minimum observatory for AI use: identifying hosting locations by importance, model categories tied to critical functions, change-management schedules, and dependency maps for key datasets and vendors. The FSB has suggested monitoring indicators; these could serve as a standard regulatory return. ESMA has clarified board accountability under MiFID. Following that logic, firms should be able to confirm their AI dependency maps just as they do for cyber and cloud risks. The BIS’s work cataloging supervisory AI can help measure improvements in regulatory technology and identify where shared models might create supervisory blind spots. We don’t need every parameter—we need a clear map —and closing the data gap is a crucial step toward achieving it.

AI Financial Stability: Anticipating the Pushback

One critique suggests that the benefits outweigh the risks. AI is already reducing fraud, speeding up compliance, and enhancing service. Surveys indicate that banks expect significant profit increases in the coming years. While that’s true and positive, benefits don’t eliminate tail risk; they change it. Fraud detection systems that rely on shared models can create single points of failure in payments. Faster client onboarding across the sector can synchronize risk appetites near the peak. In trading, even a slight alignment of objectives can lead to larger price movements more quickly. As Yellen pointed out, scenario analysis must account for opacity and vendor concentration as critical aspects. The goal of a stability regime is not to hinder productivity; it is to protect it.

Another critique argues that existing regulations already cover this area: model risk, outsourcing, and cyber concerns. To some extent, that’s correct. But fragmentation is the issue. Outsourcing rules do not require industry-level redundancy for AI compute used in critical market functions. Model risk regulations do not confront herding among multiple firms, even if each model is sound individually. Cyber frameworks focus on malicious threats, not benign failures that follow a shared update. Policy can adapt quickly. ESMA’s 2024 statement assigns ultimate accountability to the board. The Bank of England is advocating for stress tests. The FSB has defined indicators for adoption. We should integrate these into a macroprudential standard that addresses current market dynamics—one in which AI financial stability hinges on effective correlation management.

The statistic that is introduced in this essay is not just an interesting fact. It forms the core of today’s risk landscape. When a few providers host most of the industry’s AI, when many firms adjust similar models using overlapping data, and when policy itself operates on machine systems, fragility becomes a systemic issue. We do not need to fear AI to address this; we need to view AI financial stability as an essential design task. The steps are straightforward: stress-test correlations, not just capital; require redundant systems where concentration exists; map dependencies as a standard return; and ensure board accountability aligns with industry outcomes. Benefits will expand, not contract, when the market trusts these systems. The next crisis will not wait for perfect data. It will test whether our safeguards match the structure we have chosen. If we act now, we can secure AI’s advantages and mitigate its risks. Delaying may lead to the subsequent surge in speed and herding, catching us off guard even more quickly.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Bank of England. (2025, April 9). Financial Stability in Focus: Artificial intelligence in the financial system.

Breeden, S. (2024). Banks’ use of AI could be included in stress tests [Interview/coverage]. Financial Times.

BIS. (2025, October 8). Artificial intelligence and central banks: monetary and financial stability.

BIS FSI. (2025, June 26). Financial stability implications of artificial intelligence — Executive summary.

ESMA. (2024, May 30). EU watchdog says banks must take full responsibility when using AI [Guidance coverage]. Reuters.

FSB. (2024, November 14). The financial stability implications of artificial intelligence (Report and PDF).

FSB. (2025, October 10). Monitoring adoption of AI and related vulnerabilities (Indicators paper).

IMF. (2024, September 6). Artificial intelligence and its impact on financial markets and financial stability (Remarks).

IMF. (2024, Oct.). GFSR, Chapter 3: Advances in AI — Implications for capital markets (Outreach findings on concentration risk).

Statista. (2025, August 21). Worldwide market share of leading cloud infrastructure providers, Q2 2025.