AI Labor Cost Is the New Productivity Shock in Education

Published

Modified

AI labor cost has collapsed, making routine knowledge work pennies Schools should meter tokens, track accepted outputs, and redirect savings to student time Contract for pass-through price drops and keep human judgment tasks off-limits

The price of machine work has dropped faster than most education leaders understand. In 2024, many firms paid around $10 per million tokens to automate text tasks using AI. By March 2025, typical rates of about $2.50 were standard, marking a 75% decrease. On some major platforms, the price is now as low as $0.10 per million input tokens and $0.40 per million output tokens. This enables a variety of routine writing, summarizing, and coding tasks to be completed for just a few cents each at scale. This is not about impressive demonstrations; it’s about costs. When a fundamental input for white-collar work becomes so inexpensive, it acts like a sudden wage cut for specific tasks across the economy. This sudden and significant decrease in the cost of AI labor is what we refer to as the 'AI labor cost shock'. For education systems that heavily invest in knowledge work—such as curriculum development, administrative services, IT support, and student services—the budget is affected first, well before teaching methods catch up.

AI labor cost is the productivity shock we’re overlooking

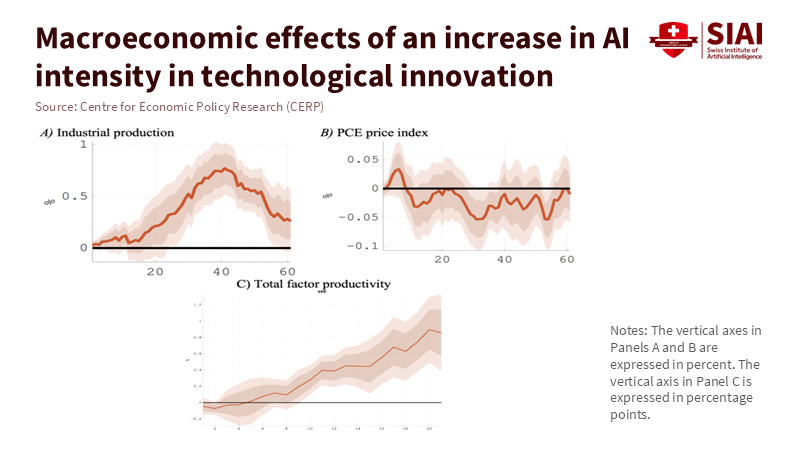

Macroeconomists have observed that AI innovation operates as a supply push, increasing output and lowering prices as total productivity improves over several years. This macro view is essential for schools, colleges, and education vendors because it shows the connection: productivity gains involve not just more innovative tools but also cheaper task hours. The AI labor cost channel, a term we use to describe the direct impact of AI on reducing costs for routine, text-based tasks, such as drafting policies, answering tickets, cleaning data, writing job postings, or generating preliminary code, is a key aspect to understand. Recent studies demonstrate the impact of applying these tools in real-world work settings. In customer support, a generative AI assistant improved the number of issues resolved per hour by about 15% on average, with gains exceeding 30% for less-experienced staff. In controlled writing assignments, time decreased by approximately 40% while quality improved. These findings aren’t isolated cases; they prove that specific, clearly defined tasks are already experiencing lower costs comparable to wages.

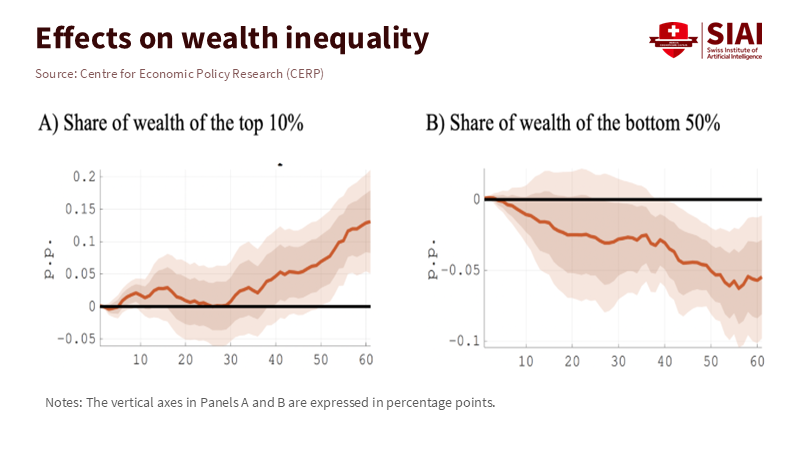

Examining costs also informs the discussion of equity. Labor is the primary input for knowledge production. In sectors that rely heavily on research and development, over two-thirds of expenses are allocated to labor compensation; in the broader U.S. nonfarm business sector, labor’s share of income remained close to its long-term average through mid-2025. If AI labor costs rapidly decline for our most common tasks—editing, synthesizing, answering questions, coding—the basic expectation is that early adopters will see profit margins expand, followed by price pressure as competitors catch up. Educational institutions serve as both buyers and producers, purchasing services and creating curricula, assessments, and large-scale student support. Being mindful of costs is essential; it determines whether AI helps expand quality and access or whether savings are lost through widespread discounts.

AI labor cost in classrooms, back offices, and budgets

The first benefits show up where outputs can be standardized and reviewed. Support chats for students, financial aid Q&A, updates to IT knowledge bases, drafting syllabus templates, creating boilerplate for grants, and generating initial code for data dashboards all fit this mold. Here, the AI labor cost story is straightforward: pay per token, track usage, and measure costs per accepted output. Public pricing makes budgeting manageable. One major vendor currently lists $0.15 per million input tokens in a low-cost tier; another offers $0.10 per million input tokens in an even cheaper tier. With the use of prompt libraries and caching, marginal costs can be further reduced. A practical note: track three metrics for each case—tokens for accepted outputs, acceptance rates after human review, and staff time saved compared to the baseline. The policy shift should move from “hours budgeted” to “accepted outputs per euro,” allowing humans to focus on exceptions and judgments.

However, not every human hour can be easily replaced. New evidence from Carnegie Mellon in 2025 highlights the limitations of replacing humans with language models in qualitative research roles. When researchers attempted to use models as study participants, the results lacked clarity, omitted context, and raised concerns about consent. In software engineering, research has also shown that models can mimic human reviewers on specific coding tasks, but only in tightly controlled situations with clear guidelines. The lesson for education is clear: AI labor cost can take over routine, defined tasks that fit templates, but it should not replace student voices, personal experiences, or ethical inquiry. Procurement policies must establish clear boundaries to protect tasks that involve human judgment, emphasizing the value and integral role of human judgment tasks in the process.

Budgets should also account for price fluctuations. A price war is on the horizon: one major competitor cut off-peak API rates by up to 75% in 2025, prompting established companies to respond with cheaper “flash” or “mini” tiers and larger context windows. Yet costs don’t only decrease. As workflows become more automated, usage can increase significantly, and heavy users may exceed their flat-rate plans. For universities testing automated coding teachers or bulk document processing, this means two controls are crucial: caps on usage at the account level and policies for managing workflows effectively when those caps are reached. Treat AI labor costs as a market rate that could rise or fall based on features, rather than a permanent discount. This strategic approach to managing AI labor costs enables you to maintain control over your budget and operations, ensuring a more effective and efficient use of resources.

AI labor cost, prices, and what’s next

If education vendors experience significant increases in profit margins, will prices for services drop? Macro evidence indicates that AI innovation leads to decreases in consumer prices over time as productivity increases take effect. However, the timing hinges on market structure. In competitive areas, such as content localization, transcription, and large-scale assessments, price cuts are likely to occur sooner. In concentrated markets, savings may be redirected to product development before they reach buyers. For public systems, a more effective approach is to include AI labor cost metrics in contracts that specify prices for accepted items, allowed model types, cache hit ratios, and clauses to adjust for decreases in token prices. This turns unpredictable tech changes into manageable economic factors, offering a hopeful outlook for the future.

Finally, let’s consider the workforce. Most productivity gains so far have benefited less-experienced workers who adopt AI tools, consistent with a catch-up narrative. This supports a training strategy that targets the first two years on the job, focusing on training in prompt patterns, review checklists, and judgment exercises that enhance tool output to meet institutional standards. However, the risks associated with exposure are uneven. Analyses from the OECD and the ILO indicate that lower-education jobs and administrative roles, which women disproportionately hold, are at a higher risk of automation. Responsible adoption means redeploying staff instead of discarding them: retaining human-centered work where empathy, discretion, and context are essential, and supporting these positions with savings from tasks that AI can automate.

Toward a practical cost strategy

The shift in perspective is clear: stop questioning whether AI is “good for education” in general and start examining where AI labor cost can enhance access and quality for every euro spent. Begin with three immediate actions. First, redesign workflows so that models handle the routine tasks while people provide oversight. Use the evidence from writing and support as a benchmark. If a pilot isn’t demonstrating double-digit time savings or quality improvements upon review, adjust the workflow or terminate the pilot. Create dashboards that track accepted outputs per 1,000 tokens and the time saved through human review for each unit. Always compare these numbers to a consistent pre-AI baseline to avoid shifting targets.

Second, approach purchases like a CFO rather than a lab. Set maximum limits on monthly tokens, require vendors to disclose which model families and pricing tiers they offer, and automatically review prices when public rates drop by a specified amount. This makes enforcing contracts easier. Combine prompt caching with lower-tier models for drafts and higher-tier reviews for final outputs; this blended AI labor cost will outperform single-tier spending while maintaining quality. Include limits for any workflow that begins to make too many calls and risks exceeding budget limits.

Third, draw clear lines on tasks that cannot be replaced. The findings from Carnegie Mellon serve as a cautionary example: using language models in place of human participants muddies what we value. In schools, this applies to counseling, providing qualitative feedback on assignments connected to identity, and engaging with the community. Keep these human. Assign AI to logistics, drafts, and data preparation. In software education, models can act as code reviewers under established guidelines. However, students still need to articulate their intent and rationale verbally. The guiding principle should be that when the task requires judgment, AI labor cost should not dictate your purchasing decisions.

These decisions are made within a broader macro context. As AI innovation increases productivity and lowers prices, specific sectors are expected to witness higher wages and increased hiring. In contrast, others will experience higher turnover rates. For public education systems, this is a design decision. Use contracts and budgets to prioritize savings for teaching time, tutoring services, and student support. Allocate funds for small-group instruction by utilizing the hours saved from paperwork handled by AI. Invest in staff training so that the most significant gains—those benefiting new workers who access practical tools—also support early-career teachers and advisors rather than just central offices.

A budget is a moral document. Use the savings for students

We return to the initial insight. Prices for machine text work have plummeted at key tiers, and the typical effort required for white-collar tasks—like editing, summarizing, or drafting—now costs mere pennies at scale. This is the AI labor cost shock. Macro data indicate that productivity improvements can lead to increased output and lower prices over time; micro studies reveal that targeted task substitutions already save time and enhance quality; ethical research notes that substitutions have firm limits where human voices and consent are concerned. Taken together, the policy is clear. Treat AI as a measured labor input. Track accepted outputs instead of hype. Include clauses to capture price declines in contracts. Safeguard tasks that require judgment. And focus the saved resources where they matter most: human attention on learning. If done correctly, education can transform a groundbreaking technology into a quiet revolution in costs, access, and quality—one accepted output at a time.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Acemoglu, D. (2024). The Simple Macroeconomics of AI. NBER Working Paper 32487.

Anthropic. (2025a). Pricing.

Anthropic. (2025b). Web search on the Anthropic API.

Business Insider. (2025, Aug.). ‘Inference whales’ are eating into AI coding startups’ business model. (accessed 1 Oct 2025).

Carnegie Mellon University, School of Computer Science. (2025, May 6). Can Generative AI Replace Humans in Qualitative Research Studies? News release.

Federal Reserve Bank of St. Louis (FRED). (2025). Nonfarm Business Sector: Labor Share for All Workers (Index 2017=100). (updated Sept. 4, 2025).

Gazzani, A., & Natoli, F. (2024, Oct. 18). The macroeconomic effects of AI innovation. VoxEU (CEPR).

Google. (2025). Gemini 2.5 pricing overview.

International Labour Organization. (2025, May 20). Generative AI and Jobs: A Refined Global Index of Occupational Exposure. (accessed 1 Oct 2025).

Noy, S., & Zhang, W. (2023). Experimental evidence on the productivity effects of generative artificial intelligence. Science, 381(6654).

OpenAI. (2025a). Platform pricing.

OpenAI. (2025b). API pricing (fine-tuning and scale tier details). (accessed 1 Oct 2025).

Ramp. (2025, Apr. 15). AI is getting cheaper. Velocity blog. (accessed 1 Oct 2025).

Reuters. (2025, Feb. 26). DeepSeek cuts off-peak pricing for developers by up to 75%. (accessed 1 Oct 2025).

U.S. Bureau of Labor Statistics. (2025, Mar. 21). Total factor productivity increased 1.3% in 2024. Productivity program highlights. (accessed 1 Oct 2025).

Wang, R., Guo, J., Gao, C., Fan, G., Chong, C. Y., & Xia, X. (2025). Can LLMs Replace Human Evaluators? An Empirical Study of LLM-as-a-Judge in Software Engineering. arXiv:2502.06193.