The Vanishing Middle of Software Work and What Schools Must Do About It

Published

Modified

AI is collapsing routine “middle” software work as adoption soars Schools must teach systems thinking, safe AI use, and verification-first delivery Employers will favor small, senior-led teams; therefore, curricula must reflect this reality

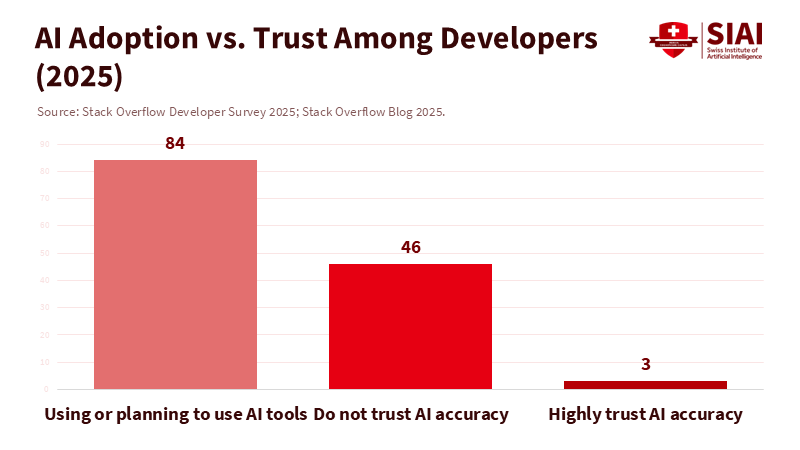

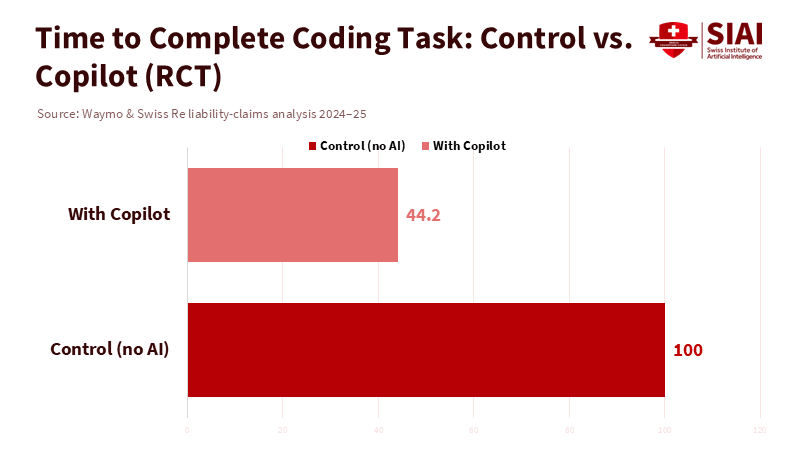

The key number in computing education right now is 84. That’s the percentage of developers in 2025 who use or plan to use AI tools at work, with most professionals using them daily. The trend is increasing. These new habits are lasting. The impact is real. Randomized trials have shown that AI-assisted pair programming can make specific coding tasks approximately 56% faster. Broader studies with knowledge workers show significant improvements in output and quality for well-defined tasks. If routine programming can be done in half the time, often by people who are still learning, the job market will not balance out. It will split. Junior and mid-level tasks are being automated or absorbed into smaller teams led by senior professionals. Employers are also indicating this shift: job postings in tech remain significantly lower than pre-pandemic levels, experience requirements are increasing, and corporate leaders are openly stating that AI enables them to accomplish more with fewer employees. The middle is disappearing, and education policy needs to determine whether to train for a shrinking area or redesign it.

We must be clear about what is disappearing and what is not. Government and industry data indicate that the most noticeable job losses are in “programmer” roles, which involve converting specifications into code, rather than in broader “software developer” roles that encompass design, integration, product judgment, and collaboration with stakeholders. This distinction matters for schools because it relates to the skills that AI struggles to replace: scoping, decomposition, security, systems thinking, and the social aspects of software delivery. It also aligns with regional statistics that may seem contradictory at first but are consistent underneath: the EU still reports millions of ICT specialists employed and ongoing hiring gaps, but firms also report challenges in finding the right mix of senior skills, rather than more entry-level workers. In short, demand is moving up the ladder.

The Middle Is Collapsing, Not the Profession

The near-term market signals are stark. Tech job postings on Indeed are weak, down about a third from early 2020 levels, after a significant pullback in 2023-2024. Where postings do exist, the requirements have increased: roles seeking 2-4 years of experience dropped from 46% to 40% between mid-2022 and mid-2025. In comparison, postings requiring over 5 years of experience rose from 37% to 42%. This is not a general “no more developers” situation; it suggests a “fewer average developers” reality. It reflects a production model where a small senior team, equipped with reliable AI tools, accomplishes what used to take multiple junior staff members. This trend is also evident from the leadership of major software companies, where finance leaders openly discuss AI as a way to create leaner organizations.

We also have evidence from learning curves to explain why entry-level positions are the most vulnerable to change. In field tests with generative AI, less-experienced workers often see the most significant boost in productivity on well-defined tasks. This effect can temporarily flatten parts of the experience gradient. Suppose a novice using an AI tool can complete the same narrow task as a mid-level employee. In that case, the firm's logical choice is to reduce mid-level roles or move them overseas. At the same time, these studies also show uneven gains for tasks that are more complex—such as setting specifications, solving ambiguous requirements, and managing unique challenges. These tasks still require significant human involvement and demand higher skill levels. This is why we are witnessing a decline in routine programming roles. At the same time, higher-leverage developer work remains stable or even grows over the medium term. Projections from the U.S. Bureau of Labor Statistics still indicate double-digit growth for developers through 2034, even as “programmer” employment declines. The market is not disappearing; it is changing.

The team structure is shifting, too. The modern technology stack allows a lead engineer to manage agents, code generators, and testing frameworks throughout the development process. GitHub’s Copilot RCT recorded a 55.8% time reduction on a specific JavaScript task; data from Octoverse reveals a rise in AI-related repositories and tools. Insights from leaders suggest that AI has become an integral part of daily workflows, rather than a novelty. As a result, “ticket factories” staffed by layers of average workers are being replaced by small, senior-led teams that oversee automation, manage architecture, and address risks like security, privacy, and governance.

A New Skills Bar for Schools and Employers

If the middle is disappearing, entry into the field must not rely on the tasks that are vanishing. The old model—introduction to computer science, followed by two years of basic ticket work—no longer fits the market. Stack Overflow’s 2025 data show nearly all developers have experience with AI tools; even Gen Z’s early career paths are being redefined around tools rather than just spending time on repetitive tasks. However, the same surveys reveal a trust gap: almost half of developers do not trust the accuracy of AI outputs, and many lose time fixing generated code. This combination—high usage with low trust—highlights the need for AI literacy, verification workflows, and secure integration habits. Education must shift from “using the tool” to “designing a process that guides the tool.”

Curricula should adapt in three practical ways. First, shift evaluation from isolated coding to complete delivery under constraints. Students should define requirements with stakeholders, prompt responsible development, verify results through tests, and deliver minimal increments. Second, introduce systems thinking earlier. This means making architecture, interfaces, observability, and performance trade-offs understandable to first- and second-year students, not just in capstone projects. Third, formalize human-in-the-loop methods: code reviews, testing for model errors, and reproducible logs for prompts. These are not just extras; they are essential tasks. Since trust is a primary issue, we should include “explain-and-verify” as a skill, focusing on executable specifications, property-based tests, and static analysis, along with code generation. The aim is to produce fewer “average coders” and more junior systems engineers who can manage automation responsibly.

Employers must also rethink their design for early-career roles. Successful corporate experiments demonstrate that AI performs best when combined with guidelines and collaborative learning, including clear task-fit criteria, libraries of approved prompts, and structured peer coaching. Rotations should focus on integration, security reviews, and on-call simulations, rather than just completing tasks. Apprenticeships can evolve into “automation residencies” where new hires learn to connect tools, review outputs, and address unique challenges. If we do this, AI can help early-career talent advance faster in areas of the job that truly matter—ownership, judgment, and communication—rather than getting stuck on tasks that automation can easily take over.

What Education Must Do Now

The policy direction should not be to resist the use of AI in the classroom. It should focus on enforcing real-world use with clear outcomes. We should require that accredited computing programs teach and assess responsible AI use from the start: students must disclose any assistance, maintain logs of prompts and tests, and explain how they verified results. Programs should place more weight on design quality, test coverage, and adaptability to change, rather than just counting lines of code. This aligns with the job market's signal that developers, not programmers, will create the most value. It also prepares students for the tools they will encounter immediately after graduation.

Funding should support cross-disciplinary build studios where education, health, climate issues, and public-sector partners present real problems. Students should work in small teams mentored by senior professionals, using AI freely but justifying each decision with automated tests and risk notes. The studios would publish open rubrics, datasets, and evaluations to boost quality across educational institutions. Since the EU reports both a large ICT workforce and continuous skill shortages, these studios should be regionally focused, addressing public needs, and open to apprentices and those seeking to upskill—not just degree-seekers. This approach will widen opportunities while avoiding training for tasks that are quickly disappearing.

Teacher development is the critical missing link. We need fast, practice-focused certification for “AI-integrated software teaching,” along with grants for redesigning test-heavy courses. The technology stack is essential: IDE plugins that log prompts and changes, CI pipelines that conduct static analysis and property-based tests, and dashboards that highlight insecure dependencies or data leaks. This is not about monitoring students; it is about professionalism. This is how we can transform the trust gap from a concern into a teachable skill: “never trust, always verify.”

Critics may argue that this is just a passing trend and that hiring for junior positions will rebound. There is some truth to that; economic cycles do matter, and developer jobs are expected to keep growing over the next decade. However, the shift in job composition is genuine. U.S. data shows that programming roles have lost more than a quarter of their jobs in two years, even as developer positions remain steady. Job postings remain low, and experience requirements are increasing.

Meanwhile, leaders from companies like Microsoft and SAP describe AI as already woven into everyday operations and cost management. Relying on a return to pre-AI job structures is not wise; it is evasion. The best move is to focus on the tasks that are hardest to automate and most crucial to oversee.

A final practical step is to align assessment with the job markets where students will actually work. Utilize open-source contributions as graded Projects. The Octoverse data indicate that AI-related projects are increasing rapidly, and students should have a tangible record of genuine collaboration. Promote internships that resemble “integration sprints,” not just bug-fixing marathons. Evaluate what truly matters for employability in an AI-driven world: the ability to break down problems, understand risk, prioritize testing, manage tools, and communicate trade-offs with non-engineers. These are valuable skills. These are the habits that enable small teams to achieve what larger groups could without overwhelming the system—or the students.

A more minor team, a higher standard, a stronger school

We began with 84, a clear indicator that AI is now a standard part of software work. We should conclude with another number that keeps us grounded: fifty-six. That’s how much time can be saved on a real coding task in a randomized trial of AI pair-programming. When productivity gains of this magnitude occur within companies, the market will not accommodate “average” workers completing routine tasks. It will favor those who can lead projects, integrate systems, and verify results. Suppose education continues to prepare students for roles that are fading away. In that case, we will let them down twice—once by misunderstanding the job market and again by not equipping them with the necessary habits to make AI effective and secure. The alternative is achievable. Teach students to take ownership of problems, to use AI openly and transparently, and to plan for both failure and success. The teams of the future will be smaller. Let our schools empower them to be stronger.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

BCG / Harvard Business School (2023). Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on Knowledge Worker Productivity and Quality. Harvard Business School Working Paper 24-013.

Brainhub (2025). Is There a Future for Software Engineers? The Impact of AI on Software Development. Brainhub Library.

Brynjolfsson, E., Li, D., & Raymond, L. (2023). Generative AI at Work. NBER Working Paper 31161.

Fortune (2025, Sept. 2). Microsoft CEO Satya Nadella reveals 5 AI prompts that can supercharge your everyday workflow.

GitHub (2022). Research: Quantifying GitHub Copilot’s Impact on Developer Productivity and Happiness. GitHub Blog.

GitHub (2024). Octoverse 2024: The State of Generative AI. GitHub Blog.

Indeed Hiring Lab (2025, July 30). The U.S. Tech Hiring Freeze Continues. Indeed Hiring Lab (2025, July 30). Experience Requirements Have Tightened Amid the Tech Hiring Freeze.

MIT News (2023, July 14). A study finds that ChatGPT boosts worker productivity for specific writing tasks.

Peng, S., Kalliamvakou, E., Cihon, P., & Demirer, M. (2023). The Impact of AI on Developer Productivity: Evidence from GitHub Copilot. arXiv:2302.06590.

Stack Overflow (2024). Developer Survey—AI.

Stack Overflow (2025). Developer Survey—AI.

Stack Overflow Blog (2025, Sept. 10). AI vs Gen Z: How AI has changed the career pathway for junior developers.

U.S. Bureau of Labor Statistics (2025, Aug. 28). Employment Projections—2024–2034; Software Developers, QA Analysts, and Testers. Occupational Outlook Handbook.

Washington Post (2025, Mar. 14). More than a quarter of computer-programming jobs just vanished. What happened?

Yahoo Finance (2025). CFO of $320 billion software firm: AI will help us “afford to…” (SAP workforce comments).

Eurostat (2024–2025). ICT specialists in employment: Towards Digital Decade targets for Europe.

ITPro & TechRadar (2025). Developers adopt AI while trust declines; 46% don’t trust AI outputs.