When the Feed Is Poisoned, Only Proof Can Heal It

Published

Modified

Trusted news wins when fakes surge Make “proof” visible—provenance, corrections, and methods—not just better detectors Adopt open standards and clear labels so platforms, schools, and publishers turn credibility into a product feature

By mid-2025, trust in news is about 40% across 48 countries. This figure has remained steady for three years, even as more people get their news from quick, engaging social video. In 2020, 52% of people consumed news via social video; this year, it’s 65%. The amount of content has surged, but trust has not. This stagnation presents an opportunity for policy change. When audiences confront the reality of AI-generated misinformation, they do not just feel despair; many turn to transparent brands—those where the source, corrections, and accountability are transparent, verifiable, and consistent. In the coming decade, news strategy will depend less on the best detection models and more on making “trust” a visible and verifiable product feature. In a media landscape flooded with low-quality content, credibility becomes rare, and scarcity drives up its value.

While the battle against misinformation is often likened to a race, with better detection tools pitted against more convincing fakes, it's crucial to remember that this race is not the only strategy. Branding, the public promise of methods and standards, takes center stage. If the public often verifies questionable claims by turning to “a news source I trust,” then policy should encourage newsrooms to compete on proof, not just on speed or scale. A brand becomes more than just a logo; it becomes a reliable process, a record of corrections, and a consistent user experience that helps readers quickly identify what is original, what is fake, and what has been confirmed. This is the potential of branding, turning today’s anxiety into future loyalty and hope for a more credible media landscape.

The Scarcity Premium of Trust

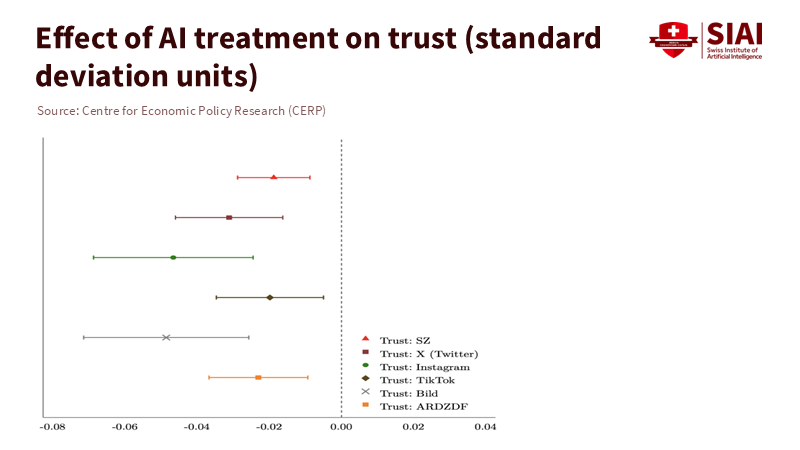

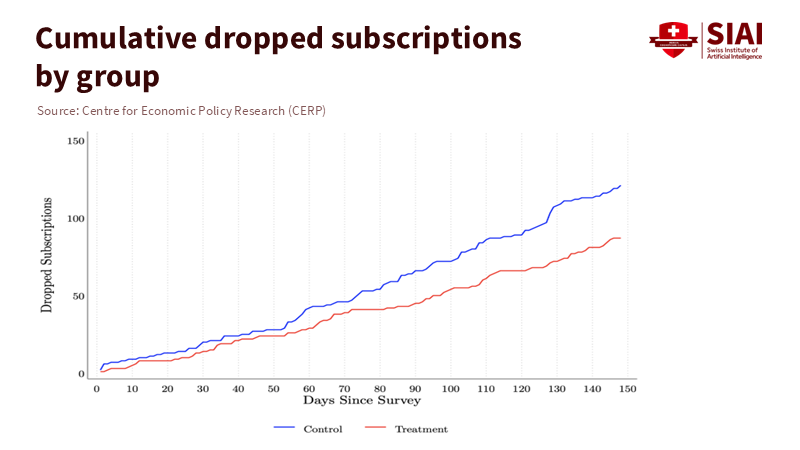

Recent evidence from Germany supports this idea. In an experiment with Süddeutsche Zeitung, readers were shown a message highlighting how difficult it is to distinguish authentic images from AI-generated ones. The immediate result was mixed: concern about misinformation increased by 0.3 standard deviations, and trust in news dropped by 0.1 s.d., even for SZ itself; however, behavior diverged from sentiment. In the days following this exposure, daily visits to SZ rose by about 2.5%. Five months later, subscriber retention was 1.1% higher—a one-third drop in churn. This means that when the danger of fake content becomes clear, the value of a brand that helps users sort truth from falsehood increases, and some readers reward that brand with their time and money. Method note: these effects are intention-to-treat estimates from random assignment; browsing and retention are actual outcomes, not self-reports.

This pattern reflects broader audience behavior. The Reuters Institute’s 2025 report shows overall trust remaining around 40%, with more people turning to social video. Critically, “a news source I trust” emerged as the top source for verifying questionable claims (38%), far ahead of AI tools. For policymakers and publishers, this means they should not abandon AI, but should focus editorial investment on what the public already uses to determine what is real: brand reputation and clear standards. When credibility is rare, its value—in terms of visits, retention, and willingness to pay—increases when contamination is evident. The market signal is clear: compete on credibility, not just on the volume of content. This emphasis on the importance of competition in establishing credibility motivates the audience to contribute to a more trustworthy media environment.

Branding as Infrastructure, Not Cosmetics

If branding is to play a bigger role, it cannot be superficial. It must be foundational: provenance by default, easy-to-find corrections, and apparent human oversight. Progress is being made in adopting open standards. The Coalition for Content Provenance and Authenticity (C2PA) is establishing a growing ecosystem: Adobe’s Content Authenticity Initiative surpassed 5,000 members this year; camera makers like Leica now produce devices that embed Content Credentials; major platforms are testing labels based on provenance; and many newsrooms have pilot projects underway. Policy plays a crucial role in fostering this progress by tying subsidies, tax breaks, or public-interest grants to the use of open provenance standards in editorial processes. This emphasis on policy reassures the audience about the potential for change and improvement in the media landscape.

The risks we are trying to manage are no longer hypothetical. A recent comparative case study documents how AI-generated misinformation manifests differently across countries and formats—voice cloning in UK politics, image manipulation and re-contextualization in German protests, and synthetic text and visuals fueling rumors on Brazilian social platforms. Fact-checkers in all three contexts identified similar challenges: the rapid spread on closed or semi-closed networks, the difficulty of proving something is fake after initial exposure, and the resource drain of repeated debunks. A nicer homepage will resolve none of these issues. They will be addressed when the brand promise is linked to verifiable assets: persistent Content Credentials for original content, a real-time correction index where changes are timestamped and signed, and explanations of the reporting that clarify methods, sources, and known uncertainties. Those features transform a brand into a tool for the public.

Remove the Fuel: Labeling, Friction, and Enforcement

A second key element lies outside the newsroom: platform and regulatory policies that reduce the spread of synthetic hoaxes without limiting legitimate speech. China’s new labeling law, effective September 1, 2025, requires that AI-generated text, images, audio, and video have visible labels and embedded metadata (watermarks) on major platforms like WeChat, Douyin, Weibo, and Xiaohongshu, with enforcement by the Cyberspace Administration of China. Regardless of opinions about Chinese information controls, the technical basis is transferable and straightforward: provenance should accompany the file, and platforms should display it by default. Democracies may not—or should not—replicate this speech structure. Still, they can adopt the infrastructure: standardized labels, consistent user interfaces, penalties for deceptive removal of credentials, and transparency reports on mislabeled content.

A democratic version would include three components. First, defaults: platforms would auto-display Content Credentials when available and encourage uploaders to attach them, particularly for political ads and news-related content. Second, targeted friction: when provenance is missing or altered on high-risk content, the system would slow its spread—reducing algorithmic boosts, limiting sharing, and providing context cards that direct users to independent sources. Third, accountability: fines or removals for commercial entities that strip credentials or mislabel synthetic assets, with safe harbors for satire, art, and protected speech that are clearly labeled. This is not an abstract wish list. Standards are available, adoption is increasing, and the public has indicated that when they doubt something, they go to a trusted source. Policy should help more outlets earn that trust.

The education sector has a specific role. Students, teachers, and administrators now interact with AI in various aspects of their information consumption, from homework help to campus rumors. Curricula can be adapted quickly to include lessons on provenance literacy alongside traditional media literacy, such as how to read a Content Credential, verify if a photo has a signature, and differentiate between verified and unverifiable edits. Procurement guidelines for schools and universities can require C2PA-compatible tools for communications teams. Public institutions can create “trust labs” that collaborate with local news organizations to determine which user interface cues—labels, bylines, correction banners—help different age groups distinguish real from synthetic. The goal is not to transform everyone into a forensic expert, but to make the brand signals of trustworthy outlets clear and to teach people how to use them.

Critics may raise several objections. Labels and watermarks can be removed or forged. This is true. However, open standards make removal detectable, and the goal is not perfection; it’s to make truth easier to verify and lies more costly to maintain. Others may argue that provenance favors large incumbents. It might—if adoption costs are high or if the standards are proprietary. That is why policy should support open-source credentialing tools for small and local newsrooms and tie public advertising or subsidies to their use. Skeptics may also claim that audiences will not care. The German experiment suggests otherwise: the visibility of AI fakes diminished self-reported trust but also encouraged real engagement and retention with a trustworthy outlet. Establish the framework, and usage will follow. Finally, some may argue that this focus is misplaced; the real issue is not isolated deepfakes but the constant stream of low-effort misinformation. The solution is both: provenance helps catch serious fraud, and clear brand signals assist audiences in quickly filtering out low-level noise.

The final objection comes from those who seek a purely technological solution. However, audiences have consistently indicated in surveys that they feel uneasy about AI-generated news and would prefer to verify claims through sources they already trust. Detection will improve—and should—but making trust apparent is something we can address today. Practically, this means newsrooms must commit to providing user-facing proof: ongoing Content Credentials for original content, a permanent corrections index, and explanations outlining what we know, what we don’t, and how we know it. It also involves setting platform defaults that promote these signals and regulatory measures that penalize deceptive removal or misuse. The aim is not to outsmart the fakers; it’s to out-communicate them.

We began with a persistent statistic—40% trust—against the backdrop of increasing social-video news consumption and an overflow of synthetic content. We conclude with a practical approach: compete on proof. The German experiment shows that when the threat is made clear, trustworthy brands can maintain and even grow audience attention. The public already turns to trusted outlets when facts are uncertain. Standards like C2PA give us the technical basis to ensure authenticity is portable. Even the most rigorous labeling systems being introduced abroad provide a simple lesson: provenance should go with the file and be displayed by default. If education systems, platforms, and publishers work together around these signals, we can regain ground without silencing discussions or expecting extraordinary vigilance from every reader. The cost of fabrication has dropped near zero. The value of trust, however, remains high. Let’s create brands, products, and policies that make that value clear and, even better, easy to choose.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Adobe (Content Authenticity Initiative). (2025, August 8). 5,000 members: Building momentum for a more trustworthy digital world.

Campante, F., Durante, R., Hagemeister, F., & Sen, A. (2025, August 3). GenAI misinformation, trust, and news consumption: Evidence from a field experiment (CEPR Discussion Paper No. 20526). Centre for Economic Policy Research.

Cazzamatta, R. (2025, June 11). AI-Generated Misinformation: A Case Study on Emerging Trends in Fact-Checking Practices Across Brazil, Germany, and the United Kingdom. Journalism & Mass Communication Open, SAGE.

Coalition for Content Provenance and Authenticity (C2PA). (n.d.). About C2PA.

GiJN. (2025, July 10). 2025 Reuters Institute Digital News Report: Eroding public trust and the rise of alternative ecosystems. Global Investigative Journalism Network.

Reuters Institute for the Study of Journalism. (2025, June 17). Digital News Report 2025 (Executive summary & full report). University of Oxford. https://reutersinstitute.politics.ox.ac.uk/digital-news-report/2025

SCMP. (2025, September 1). China’s social media platforms rush to abide by AI-generated content labelling law. South China Morning Post.

Thomson Reuters. (2024, June 16). Global audiences suspicious of AI-powered newsrooms, report finds. Reuters.

Thomson Reuters. (2025, March 14). Chinese regulators issue requirements for the labeling of AI-generated content. Reuters.

VoxEU/CEPR. (2025, September 16). AI misinformation and the value of trusted news.