AI Trust in News: Germany’s Path to Credibility in the Synthetic Era

Input

Modified

Germans distrust AI-made news but reward transparent, human-led outlets Trust grows when provenance and labeling are clear EU rules now make this transparency mandatory

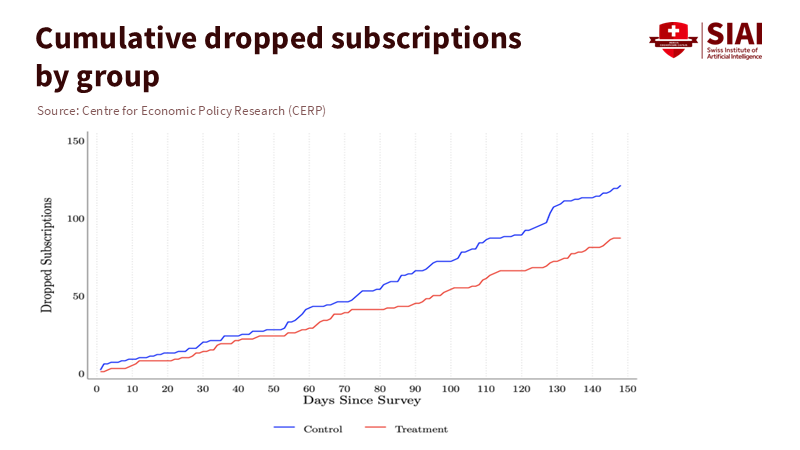

A striking number tells the story. In January 2025, a survey of German internet users revealed that 54% felt somewhat or very uncomfortable reading news primarily produced by AI, even when a human editor was involved. This discomfort isn’t just a minor issue; it pairs with stable but cautious trust in news and a growing fear of fakes. Simply put, people want journalism they can verify, and they don’t want to guess how much a machine influenced the content. When the danger of AI misinformation becomes clear, audiences tend to rely more on outlets they already trust. In a real-world test with Süddeutsche Zeitung, readers who faced the challenge of spotting AI-generated images increased their visits by 2.5% and were 1.1% more likely to maintain their subscriptions in the following months. Trust, not novelty, drives this change. This is crucial for policy and practice: AI trust in news depends on transparency, origin, and human accountability.

AI trust in news starts with what Germans already feel

Start with attitudes, not hopes. German audiences exhibit a consistent mix of trust and skepticism regarding the use of automation in newsrooms. In 2025, 45% of the online population believed that most news could be trusted, with public broadcasters and local newspapers leading in brand trust. However, the same survey highlighted that people find human-produced news more trustworthy than news generated by AI. This isn’t just a viewpoint of older audiences or tech skeptics; it reflects a social norm regarding accountability. When people think of “AI-made” news, they associate it with thin, error-prone writing and glitchy images. They also envision a lack of accountability for mistakes. The message is clear: if publishers want to establish trust in AI news, they must keep humans visible and accountable at every crucial step, and they must communicate this clearly in their products.

The concern is genuine and growing. In 2024, 43% of German adults said that “most news” is trustworthy, the lowest figure since this question was first asked nationally. Additionally, 42% expressed concerns about distinguishing between fake news and real news online. A quarter of the respondents reported encountering false or misleading information about migration and politics. Given this context, discomfort with AI in the news is understandable—the public struggles to differentiate between AI-generated and human-made images. A German study found that people often misidentify AI-generated images as being human-created and regard human-made images as more realistic and trustworthy. The gap between perception and reality poses a significant risk. Audiences believe they can “spot the fake,” but often they are unable to. The solution isn’t to make readers digital forensics experts. It’s for newsrooms to take on the responsibility of verification and provide clear signals.

AI trust in news increases when credibility is scarce

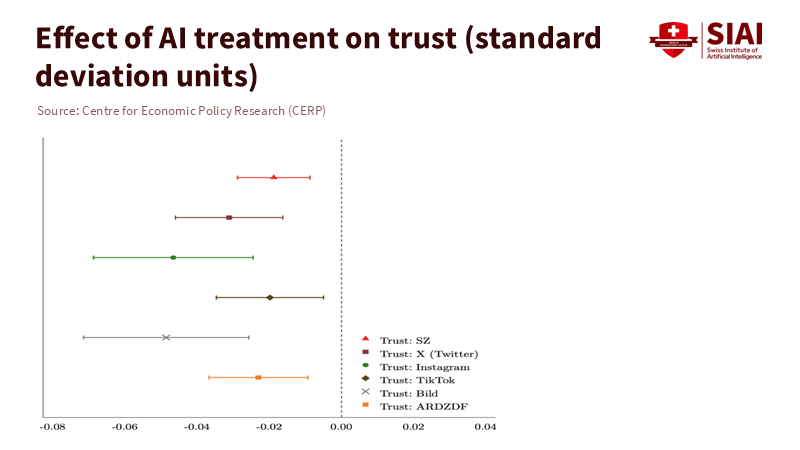

The Süddeutsche Zeitung field experiment is particularly informative because it tracks behavior, not just beliefs. When readers were shown how challenging it is to distinguish between AI and reality, their trust in many outlets declined. However, their use of a trusted outlet increased along with their likelihood of maintaining their subscriptions. Scarcity economics explains this phenomenon. As believable forgeries flood the market, the value of a verified source rises. This doesn’t suggest that scaring audiences is a sound growth strategy. Instead, it means trust in AI news grows for brands that demonstrate their filters work, provide transparency, and uphold their commitments when mistakes happen. A newsroom that is clear about where AI contributes and where humans make decisions earns something audiences will pay for: assurance that someone with a name and a standard has checked the next thing they see.

This logic extends beyond a single publisher. Both global and German data support this view. The Reuters Institute’s surveys indicate widespread discomfort with AI-generated news, particularly on sensitive topics such as politics, alongside a preference for human-led production that utilizes AI as a tool. The key is not to ban the tool but to set limits—topic by topic—and to translate those limits into product signals that readers can easily recognize. Suppose the story involves elections, immigration, health, or war. In that case, it should be written and edited by humans, with any AI assistance clearly disclosed. For sports recaps or weather reports, a different approach may be acceptable if labeled appropriately. Data suggests that when newsrooms align with audience concerns about risk, engagement does not decrease. Instead, it shifts to the outlets that clarify their processes.

Regulation and origin can turn AI trust in news into practice

Regulations now support this understanding. The EU AI Act took effect on August 1, 2024, and introduces obligations incrementally through August 2, 2026. Prohibitions and AI literacy requirements will take effect on February 2, 2025, while rules for general-purpose AI models will apply starting August 2, 2025. Importantly, Article 50 mandates transparency for AI-generated or manipulated content. This includes visible notices and, where possible, technical markings to inform users when they interact with AI or encounter synthetic media. German legal guidelines reflect this stance. For newsrooms, this translates to clear labels for AI-generated visuals, consistent disclosures when AI assists with text, and internal logs to demonstrate compliance. These steps are not mere formalities; they fulfill the promise that audiences want: disclose the machine, show the chain of custody, and keep humans in charge.

Origin technology makes this promise verifiable. The C2PA standard—Content Credentials—allows publishers to attach cryptographically signed “nutrition labels” to images, as well as to videos and audio. Platforms and infrastructure are evolving: Cloudflare now supports Content Credentials across its network, and Adobe’s free web app enables creators and newsrooms to apply and check credentials at scale. Major companies, such as Google, are also incorporating provenance indicators into their consumer products. This isn’t foolproof; metadata can be stripped. However, with watermark “soft binding” and growing cross-platform support, the baseline for trust is improving. In a world where trust in AI news depends on proof, origin technology makes that trust something a reader can click.

(Method note: To roughly translate the SZ effect into a newsroom ROI, consider a publisher with 200,000 digital subscribers, €180 ARPU/year, and an annual churn rate of 9%. A 1.1% relative reduction in churn decreases it to around 8.90%, preserving about 220 subscriptions or roughly €39,600 in revenue, before factoring in any costs for origin or labeling. This estimate uses the study’s retention effect sizes and a linear scaling; actual results will vary based on audience mix and pricing.)

Designing the human–AI balance that sustains AI trust in news

The right balance combines human leadership, AI assistance, and context awareness. The signals from Germany’s audience are clear: only 4% use AI chatbots weekly for news, and comfort with AI increases only when humans guide the process. Editors should establish tiered guidelines based on topic sensitivity and make them publicly available. For sensitive subjects—such as elections, migration, health, and conflict—content should be human-written and edited, with AI limited to research support or translation, and this should always be disclosed. For low-risk topics, such as structured sports summaries, weather, and financial news, more automation may be acceptable if it is accompanied by provenance and a named editor’s approval. Across the site, a single “How we use AI” page should detail the tools, use cases, safeguards, and an error-correction protocol. The central promise is straightforward: humans decide, machines assist, and readers can see the difference.

Trust also grows when newsrooms view readers as partners in the verification process. Provide audiences with a “content credentials” icon they can inspect. Add explanatory text the first time an AI label appears. Create a visible correction log that clearly distinguishes between human errors and model errors, ensuring a clear understanding of the source of each error. Invest in newsroom training so each editor can read a C2PA manifest and identify a missing signature. Collaborate with regulators and peers on shared standards before the next election cycle. Germans don’t want to monitor origin on their own, nor should they have to. In surveys, 60% express uncertainty about the trustworthiness of online content because AI may be used to generate it, and 67% fear that elections could be influenced by AI-generated content. Newsrooms can ease those worries by making every step of their process clear and then being consistently reliable.

The public’s message is clear. Most Germans feel uneasy about AI-generated news, yet they support brands that prove their value during times of misinformation. The most significant statistic in this discussion remains the 54% of respondents who are uncomfortable with AI-led news. It captures a caution that comes from experience: people have encountered too many altered images and false claims. However, the Süddeutsche Zeitung experiment highlights the other side: when uncertainty rises, credibility becomes more valuable, not less. The path forward is practical and straightforward. Keep humans in charge of the topics that shape democracy. Clearly state when and how AI assists, every time. Ensure provenance remains intact as it is transferred from CMS to the feed. Meet the EU’s transparency guidelines as a genuine product feature, not just a regulatory checkbox. If editors and policymakers take these actions, trust in AI-powered news can grow even as synthetic media becomes more prevalent. Trust isn’t a longing for pre-AI journalism; it’s a commitment we can build—label by label, decision by decision—until readers no longer have to wonder.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Adobe. (2024, October). Introducing Adobe Content Authenticity: A free web app to help creators protect their work, gain attribution and build trust. Adobe Newsroom.

Adobe. (2025, April). Adobe Content Authenticity, now in public beta. Adobe Blog.

Campante, F., Durante, R., Hagemeister, F., & Sen, A. (2025, September 16). AI misinformation and the value of trusted news. VoxEU/CEPR.

CISPA Helmholtz Center for Information Security. (2024, May 21). Humans barely able to recognize AI-generated media. Press release.

Coalition for Content Provenance and Authenticity (C2PA). (2025). C2PA Technical Specification; Content Credentials overview.

Cloudflare. (2025, February). Cloudflare is making it easier to track authentic images online. The Verge coverage.

European Commission. (2024–2026). AI Act: Application timeline and obligations. Shaping Europe’s Digital Future.

Heuking Kühn Lüer Wojtek. (2025, May 7). Artificial Intelligence: These transparency obligations must be observed.

KPMG. (2025, May). Trust, attitudes and use of AI — Germany snapshot. KPMG International.

Leibniz Institute for Media Research (HBI). (2024, June 17). Reuters Institute Digital News Report 2024 — Findings for Germany.

Leibniz Institute for Media Research (HBI). (2025, June 17). German findings of the Reuters Institute Digital News Report 2025.

White & Case. (2024, July 16). Long-awaited EU AI Act becomes law after publication in the EU’s Official Journal.