European Economics Journals Reform: Build Quality Before Prestige

Input

Modified

European economics journals reform must reward method and openness over brand prestige Tie hiring and grants to reproducibility—open code, preregistration, independent replications Build EU benchmarks and nimble society journals so reliable work earns global reach

Recent research on authorship in economics journals indicates that approximately two-thirds of articles in the top tier are written by scholars based in the United States. This share remains essentially unchanged, except for the very top; representation from other countries has increased primarily in journals outside the top 25. This pattern highlights the pressing policy issue that European economies face today. This is why reforming European economics journals should prioritize method and governance over expanding brand recognition. If the field remains concentrated, adding more ranked lists or adopting an American hierarchy won't change incentives and might even reinforce them. Europe funds exceptional research, as evidenced by the robust number of European Research Council awards in 2024. Yet, its evaluation system still favors a narrow range of credentials. The current challenge is to create a parallel, open, and method-focused pathway to recognition that can stand independently of existing frameworks.

European economics journals reform needs clearer incentives

Europe will not overcome gatekeeping by introducing more gates. The standard solution is to "broaden the list" of acceptable journals for hiring and promotion. While this can make a marginal difference, it still rewards the same approach: publishing where the label is well-known, not where the argument is strongest. Evidence suggests that reputational bottlenecks can have a significant impact on careers. A classic study indicates that the "top five" journals carry considerable weight in tenure decisions, even when considering a scholar's overall publication quality. More recent bibliometric work reveals that non-U.S. authors have grown least in the highest-ranked journals and more in those ranked 100th or lower. Editorial power is also held by a small number of institutions and individuals at the top journals. Taken together, this paints a picture: the competition is fierce at the top, its judges are few, and entry into the core is slow. Europe can challenge this by changing its evaluation criteria. Instead of simply expanding the list, universities and ministries should tie promotions to three measurable principles: transparent methods, open data and code, and confirmed robustness, regardless of the journal where the article is published. When hiring and funding calls embrace these principles, the focus shifts from "brand capture" to "research that withstands scrutiny." This change can be implemented during the current academic year.

Europe also has the capacity to enforce new incentives at scale. ERC Starting, Consolidator, and Advanced Grant statistics for 2024 highlight hundreds of awards across social sciences and humanities, with success rates approaching 14%. Horizon Europe has expanded this pool, and the U.K.'s re-association in 2024–2025 has further broadened the collaborative network. These programs can do more than finance projects—they can make reproducibility, registered reports, and code release the standard practice, and they can test the portability of "method points" across hiring committees. A candidate who earns ERC-verified badges for open code, pre-analysis plans, and external robustness checks should receive hiring credit, even if their paper appears in a respected but non-traditional venue. That is how a funding group becomes an evaluation group.

Method first, prestige later

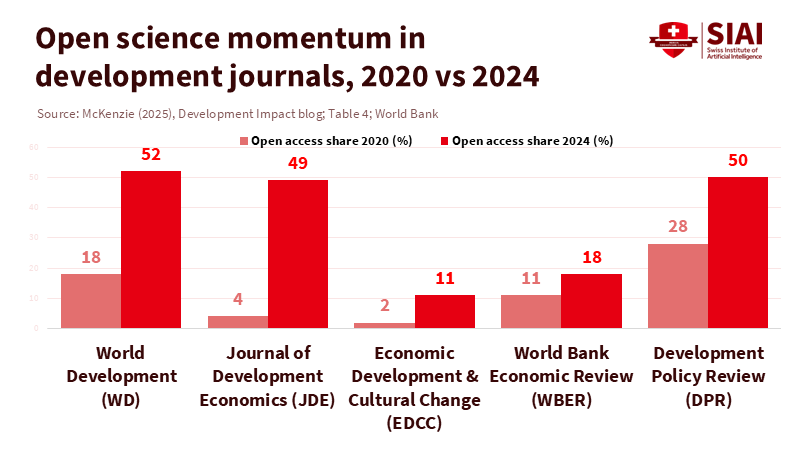

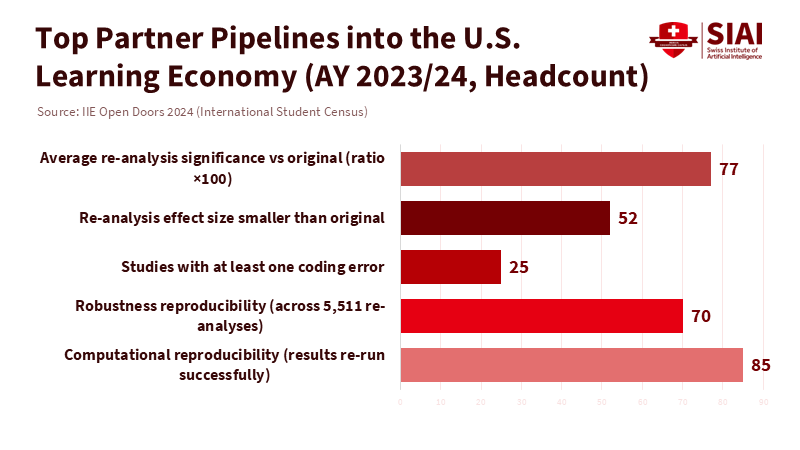

Reforming European economics journals must prioritize reliability over reputation. The replication record in social sciences is mixed but improving. Several multi-paper replication initiatives in 2024–2025 report success rates of approximately half to three-fifths, with average replication effect sizes smaller than those of the originals. In economics and political science, a recent large-scale reproducibility study found over 85% computational reproducibility when data editors review code and data, and roughly 70% robustness in re-analyses. Leading journals have tightened their policies: mandatory data and code disclosures, pre-publication checks, and, in some cases, registered-report tracks. Europe should adopt these standards as a baseline across its hiring and promotion systems, then elevate them with three enhancements: a pan-European replication fund to pay teams that re-analyze accepted papers; a "negative results + corrections" section in society journals; and a public dashboard that tracks code availability, re-run outcomes, and robustness changes for funded work. The message to early-career scholars becomes clear: they will be rewarded for robust arguments, not for being associated with specific journals.

Quality control is not a matter of culture; it is a matter of operational effectiveness. Desk-rejection rates at leading development journals average approximately 72%, with acceptance rates after external review at around 39%. In some journals, overall acceptance can drop as low as 3%. Submissions have surged since 2016, putting pressure on editorial capacities. In this environment, speed and fairness stem from a disciplined process: short-paper tracks with binary decisions, editorial triage to verify design preregistration, and strict time limits on initial choices. Society-run journals have demonstrated how quickly a reputation can improve when rigorous processes are in place. Seven new society journals from major associations have rapidly climbed in rankings since their launch, primarily because they combined reliable editors, clear goals, and enforceable policies, rather than chasing brand recognition. Europe can adopt a similar strategy with the EEA, RES, CEPR, and national societies, prioritizing operational excellence and method standards first, allowing prestige to follow.

European economics journals reform: from gatekeeping to benchmarking

One obvious critique is that a European "board of boards" might risk becoming too insular. If it creates its own top five, the publications may cater only to themselves. While this concern is valid, it does not justify maintaining a system that inadequately rewards rigorous work published outside a select few U.S.-based journals. The solution is to benchmark goals externally while governing internally. Benchmark externally by aligning European evaluations with neutral, field-level indicators that are challenging to manipulate: independent replication outcomes, verified code re-runs, and citation-distribution metrics that lessen the impact of celebrity outliers. Govern internally by rotating editors from various countries and institutions, limiting consecutive terms, and publishing anonymized decision statistics based on field, method, gender, and geography. We already possess datasets that facilitate broad editorial transparency. These should be used to audit how boards operate, not just what they publish.

Another critique suggests that methodology is just one element; novelty remains a crucial factor. This is true. However, novelty without design discipline is weak. The aim should be to make registered reports and pre-analysis plans standard practices in empirical fields and to create fast tracks for papers focused on clean identification and careful measurement, even if they are not groundbreaking. The goal is to create a portfolio that values both innovation and verification. Recent replication efforts have demonstrated that many published results can be reproduced computationally; however, the effect sizes often diminish in magnitude. An evaluation framework that counts robustness alongside publications will encourage researchers to pursue robust designs. It will also hasten corrections when results do not hold.

A third critique warns that Europe may be overlooked. Yet visibility follows usefulness. If European journals become the best places to learn how to conduct credible work—and the most reliable repositories for reusable code and clean data—global readership will grow. The rise of new society journals in recent years illustrates how quickly a journal can gain influence by addressing the genuine concerns of authors and readers. With a European replication fund and ERC-style badges that hold weight in hiring decisions, a respected alternative path will emerge. This will also offer scholars from Asia, Africa, and Latin America a more equitable route into the mainstream—one that focuses more on skill than geography. This matters because global authorship at top journals has not shown significant diversification. A method-first Europe can open doors for everyone.

The practical steps are clear. First, ministries and university leaders should revise promotion criteria this year to recognize verified reproducibility and open-science compliance as key indicators of quality. Second, European societies should establish or enhance short-format journals with rigorous selection processes, explicit scopes, and dedicated data editing teams. Third, major funding bodies should finance independent re-analyses of a select number of accepted papers and publish the results with DOIs, allowing authors to reference them. Fourth, editorial boards should implement term limits, disclose their conflict-of-interest policies, and provide annual statistics on decision-making by subfield. Lastly, Europe should leverage its funding capacity to establish a permanent "benchmarks board" to maintain shared metrics for robustness, data availability, and code clarity across journals. Each step may seem small, but together they can transform the landscape.

The stakes are as much about education as they are about scholarship. Economics courses teach by example. When graduate students see that careers depend on a narrow range of journal logos, they learn to optimize for labels. When they see public code, careful design, and credit for replication, they focus on the quality of work. This shift affects how we teach statistics, train research teams, and engage with findings in ministries and central banks. In a fast-paced policy environment, we require research that is clear, reusable, and easily revisable. Europe can lead by not simply mirroring an established hierarchy but by redefining the standards of quality.

In summary, build a standard worth following. The problem we face is straightforward: the top journals are primarily controlled by one geographical region, and diversification at the very top has progressed slowly. Simply expanding a list won't change that. Setting method-first incentives can. Europe has the funding and institutional reach to connect careers to reliability: open code, reproducible designs, and external validation. It can enforce these standards in grants, reward them in hiring, and incorporate them in journals that operate with efficiency and clarity. If these changes occur, prestige will follow because users—scholars, educators, and policymakers—will gravitate toward what serves them best. The call to action is therefore clear: adopt method-first promotion criteria in 2025–2026; establish replication budgets within ERC and Horizon frameworks; and require every European department to publish its evaluation rules before the next hiring round. The mainstream is not a fact of life; it is a set of incentives that shape our perceptions and influence our understanding of reality. Change those incentives, and the landscape will change accordingly.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Aigner, E., Greenspon, J., & Rodrik, D. (2025). The global distribution of authorship in economics journals. World Development (advance online). Summary and access points.

Ankel-Peters, J., Knaus, T., & Lohse, J. (2025). Is economics self-correcting? Replications in the American Economic Review, 2010–2020. Economic Inquiry.

Brodeur, A., Leeper, T. J., & Miguel, E. (2024). Promoting reproducibility and replicability in political science (Working paper; pooled replication rate ≈50%).

CEPR (2021). Greenspon, J., & Rodrik, D. A note on the global distribution of authorship in economics journals (CEPR DP16676).

Ductor, L., Goyal, S., & Prummer, A. (2023). Concentration of power at the editorial boards of economics journals. Journal of Economic Surveys.

Ham, J. C., et al. (2023). Documenting and explaining the dramatic rise of the new society journals in economics (IZA DP16337).

Hamermesh, D. S. (2012). Six decades of top economics publishing: Who and how? (NBER Working Paper 18635).

Heckman, J. J., & Moktan, S. (2018/2019). Publishing and promotion in economics: The tyranny of the top five (NBER Working Paper 25093; revised 2019). See also AEA Research Brief (2020).

Hylmö, A., et al. (2024). The quality landscape of economics: The top five and beyond. Research Evaluation.

Journals' data and code policies. American Economic Association (policy page) and Journal of Political Economy (data policy).

McKenzie, D. (2025). The state of development journals 2025: Quality, acceptance rates, review times, open access, and what's new. World Bank Development Impact blog.

Nature Human Behaviour (2025). Holzmeister, F., et al. Examining the replicability of online experiments selected by a decision market.

ERC (2024–2025). ERC Starting, Consolidator, and Advanced Grants—Statistics.

VoxEU (2025). Reforming the education of economists in Europe: Breaking the tyranny of the top five.