Education and the AI Bubble: Talk Isn't Transformation

Published

Modified

The AI bubble rewards talk more than results Schools should pilot, verify, and buy only proven gains using LRAS and total-cost checks Train teachers, price energy and privacy, and pay only for results that replicate

A single number should make us pause: 287. That's how many S&P 500 earnings calls in one quarter mentioned AI, the highest in a decade and more than double the five-year average. However, analysts note that for most companies, profits directly linked to AI are rare. This situation highlights a classic sign of an AI bubble. Education is proper in the middle of it. Districts are getting pitches that reflect market excitement. If stock prices can rise based on just words, so can school budgets. We cannot let talk replace real change. The AI bubble must not become our spending plan. The first rule is simple: talk does not equal transformation; improved learning outcomes do. The second is urgent: establish strict criteria before making large expenditures. If we make a mistake, we risk sacrificing valuable resources for headlines and later face parents with explanations for why the results never materialized. The AI bubble is real, and schools must avoid inflating it. Setting high standards for AI adoption is crucial, and it's our commitment to excellence and quality that will guide us in this journey.

The AI bubble intersects with the classroom

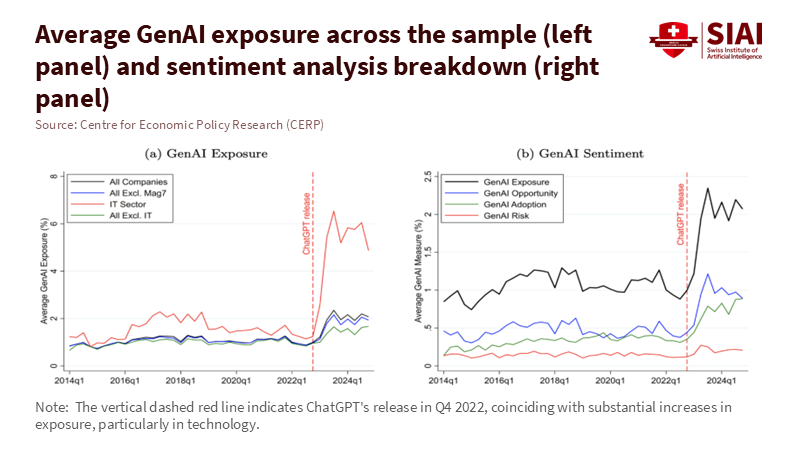

We need to rethink the discussion around incentives—markets reward mentions of AI. Schools might imitate this behavior, focusing on flashy announcements instead of steady progress. It's easy to find evidence of hype. FactSet shows a record number of AI references on earnings calls. The Financial Times and other sources report that many firms still struggle to clearly articulate the benefits in their filings, despite rising capital spending. At the same time, the demand for power in AI data centers is expected to more than double by 2030, with the IEA estimating global data-center electricity use to approach 945 TWh by the end of the decade. These are the real costs of pursuing uncertain benefits. When budgets tighten, education is often the first to cut long-term investments, such as teacher development and student support, in favor of short-term solutions. That is the bubble's logic. It rewards talk while postponing proof.

But schools are not standing still. In the United States, the number of districts training teachers to use AI nearly doubled in a year, from 23% to 48%. However, the use among teachers is still uneven. Only about one in four teachers reported using AI tools for planning or instruction in the 2023-24 school year. In the UK, the Department for Education acknowledges the potential of AI but warns that evidence is still developing. Adoption must ensure safety, reliability, and teacher support. UNESCO's global guidance offers a broader perspective: proceed cautiously, involve human judgment, protect privacy, and demand proof of effectiveness. This approach is appropriate for a bubble. Strengthen teacher capacity and establish clear boundaries before scaling up purchases. Do not let vendor presentations replace classroom trials. Do not invest in "AI alignment" if it doesn't align with your curriculum. Thorough evaluation is key before scaling up AI investments, and it's our responsibility to ensure it's done diligently.

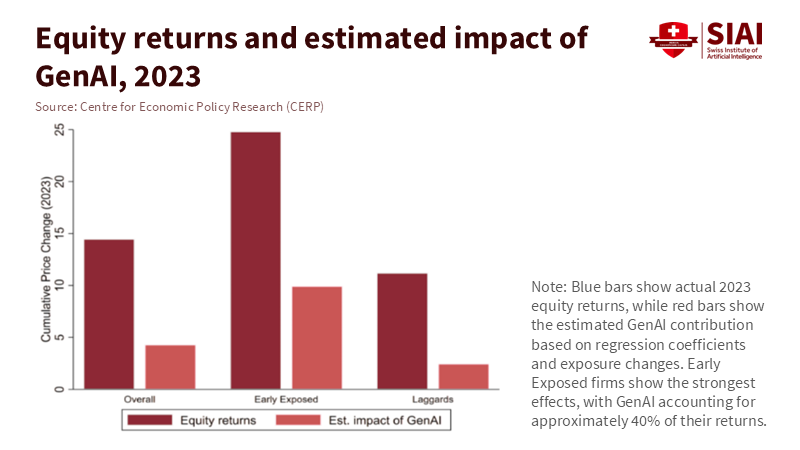

The macro signals send mixed messages. On the one hand, investors are pouring money into infrastructure, while the press speculates about a potential AI bubble bursting. On the other hand, careful studies report productivity gains under the right conditions. A significant field experiment involving 758 BCG consultants found that access to GPT-4 improved output and quality for tasks within the model's capabilities, but performance declined on tasks beyond its capabilities. MIT and other teams report faster, better writing on mid-level tasks; GitHub states that completion times with Copilot are 55% faster in controlled tests. Education must navigate both truths. Gains are real when tasks fit the tool and the training is robust. Serious risks arise if errors go unchecked or when the task is inappropriate. The bubble grows when we generalize from narrow successes to broad changes without verifying whether the tasks align with schoolwork.

From hype to hard metrics: measuring the AI bubble's learning ROI

The main policy mistake is treating AI like a trend rather than a learning tool. We should approach it in the same way we do any educational resource. First, define the learning return on AI investment (LRAS) as the expected learning gains or verified teacher hours saved per euro, minus the costs of training and integration. Keep it straightforward. Imagine a district is considering a €30 monthly license per teacher. If the tool reliably saves three teacher hours each week and the loaded hourly cost is €25, the time savings alone amount to €300 per teacher per term. This looks promising—if it's validated within your context, rather than based on vendor case studies. Measurement method: track time saved with basic time-motion logs and random spot checks; compare with student outcomes where relevant; adjust self-reports by 25% to account for optimism bias.

This approach also applies to student learning. A growing body of literature suggests that well-structured AI tutors can enhance outcomes. Brookings highlights randomized studies showing that AI support led to doubled learning gains compared to strong classroom models; other trials indicate that large language model assistants help novice tutors provide better math instruction. However, the landscape is uneven. The BCG field experiment cautions that performance declines when tasks exceed the model's strengths. In a school context, utilize AI for drafting rubrics, generating diverse practice problems, and identifying misunderstandings; however, verify every aspect related to grading and core content. Require specific outcome measures for pilot programs—such as effect sizes on unit tests or reductions in regrade requests—and only scale up if the improvements are consistent across schools.

Now consider the system costs. Data centers consume power; power costs money. The IEA forecasts that global data-center electricity use could more than double by 2030, with AI driving much of this growth. Local impacts are significant. Suppose your region faces energy limitations or rising costs. In that case, AI services might come with hidden "energy taxes" reflected in subscription fees. The Uptime Institute reports that operators are already encountering power limitations and rising costs due to the demand for AI. A district that commits to multi-year contracts during an excitement phase could lock in higher prices just as the market settles.

Finally, compare market signals with what's happening on the ground. FactSet indicates a record-high number of AI mentions; Goldman Sachs notes a limited direct profit impact so far; The Guardian raises questions about the dynamics of the bubble. In education, HolonIQ is tracking a decline in ed-tech venture funding in 2024, the lowest since 2014, despite an increase in AI discussions. This disparity illustrates a clear point. Talk is inexpensive; solid evidence is costly. If investment follows the loudest trends while schools chase the noisiest demos, we deepen the mistake. A better approach is to conduct narrow pilots, evaluate quickly, and scale carefully.

A better approach than riding the AI bubble

Prioritize outcomes in procurement. Use request-for-proposal templates that require vendors to clearly define the outcome they aim to achieve, specify the unit of measurement they will use, and outline the timeline they will follow. Implement a step-by-step rollout across schools: some classrooms utilize the tool while others serve as controls, then rotate. Keep the test short, transparent, and equitable. Insist that vendors provide raw, verifiable data and accept external evaluations. Consider dashboards as evidence only if they align with independently verified metrics. This is not red tape; it's protection against hype. UK policy experiments are shifting towards this approach, emphasizing a stronger evidence base and guidelines that prioritize safety and reliability. UNESCO's guidance is explicit: human-centered, rights-based, evidence-driven. Include that in every contract.

Prepare teachers before expanding tool usage. RAND surveys indicate forward movement alongside gaps. Districts have doubled their training rates year over year, but teacher use remains uneven, and many schools lack clear policies. The solution is practical. Provide short, scenario-based workshops linked to essential routines, including planning, feedback, retrieval practice, and formative assessments. Connect each scenario to what AI excels at, what it struggles with, and what human intervention is necessary. Use insights from the BCG framework: workers performed best with coaching, guidelines, and explicit prompts. Include a "do not do this" list on the same page. Then align incentives. Acknowledge teams that achieve measurable improvements and simplify their templates for others to follow.

Address energy and privacy concerns from the outset. Require vendors to disclose their data retention practices, training usage, and model development; select options that allow for local or regional processing and provide clear procedures for data deletion. Include energy-related costs in your total cost of ownership, because the IEA and others anticipate surging demand for data centers, and operators are already reporting energy constraints. This risk might manifest as higher costs or service limitations. Procurement should factor this in. For schools with limited bandwidth or unreliable power, offline-first tools and edge computing can be more reliable than always-online chatbots. If a tool needs live connections and heavy computing, prepare fallback lessons in advance.

A steady transformation

Anticipate the main critique. Some may argue we're underestimating the potential benefits of AI and that it could enhance productivity growth across the economy. The OECD's 2024 analysis estimates AI could raise aggregate TFP by 0.25-0.6 percentage points a year in the coming years, with labor productivity gains being somewhat higher. This is not bubble talk; it represents real potential. Our response is not to slow down unnecessarily but to speed up in evaluating what works. When reliable evidence emerges—such as an AI assistant that consistently reduces grading time by a third without increasing errors, or a tutor that achieves a 0.2-0.3 effect size over a term—we should adopt it, support it, and protect the time it saves. We aim for acceleration, not stagnation.

A second critique suggests schools fall behind if they wait for perfect evidence. That is true, but it doesn't represent our proposal. The approach is to pilot, validate, and then expand. A four-week stepped-wedge trial doesn't indicate paralysis; it shows momentum while retaining lessons learned. It reveals where the frontier lies in our own context. The findings on the "jagged frontier" illustrate why this is crucial: outputs improve when tasks align with the tool, and fall short when they don't. The more quickly we identify what works for each subject and grade, the more rapidly we can expand successes and eliminate failures. This is how we prevent investing in speed without direction.

A third critique may assert that the market will resolve these issues. That is wishful thinking within a bubble. In public services, the costs of mistakes are shared, and the benefits are localized. If markets reward mentions of AI regardless of the outcome, schools must do the opposite. Reward outcomes, irrespective of how much they are discussed. Ed-tech funding trends have already decreased since the peak in 2021, even as conversations about AI grow louder. This discrepancy serves as a warning. Build capacity now. Train teachers now. Create contracts that compensate only for measured improvements—design effective and impactful audits that drive meaningful change. The bubble may either burst or mature. In either case, schools that focus on outcomes will be fine. Those who do not will be left with bills and no visible gains.

Let's return to the initial number. Two hundred eighty-seven companies discussed AI in one quarter. Talk is effortless. Education requires genuine effort. The goal is to convert tools into time and time into learning. This means we must set high standards while keeping it straightforward: establish clear outcomes, conduct short trials, ensure accessible data, provide teacher training, and account for total costs, including energy and privacy considerations. We must align the jagged frontier with classroom tasks and resist broad claims. We need to build systems that develop slowly but scale quickly when proof arrives. The AI bubble invites us to purchase confidence. Our students need fundamental skills.

So, we change how we buy. We invest in results. We connect teachers with tools that demonstrate value. We do not hinder experimentation, but we are strict about what we retain. If the market values words, schools must prioritize evidence. The measure of our AI decisions will not be the number of mentions in reports or speeches. It will be the quiet improvement in a student's skills, the extra minutes a teacher gains back, and the budget allocations that support learning. Talk is not transformation. Let's transform the only thing we invest in.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Aldasoro, I., Doerr, S., Gambacorta, L., & Rees, D. (2024). The impact of artificial intelligence on output and inflation. Bank for International Settlements/ECB Research Network.

Boston Consulting Group (2024). GenAI increases productivity & expands capabilities. BCG Henderson Institute.

Business Insider (2025). Everybody's talking about AI, but Goldman Sachs says it's still not showing up in companies' bottom lines.

Carbon Brief (2025). AI: Five charts that put data-centre energy use and emissions into context.

FactSet (2025). Highest number of S&P 500 earnings calls citing "AI" over the past 10 years.

GitHub (2022/2024). Measuring the impact of GitHub Copilot.

Guardian (2025). Is the AI bubble about to burst – and send the stock market into freefall?

HolonIQ (2025). 2025 Global Education Outlook.

IEA (2025). Energy and AI: Energy demand from AI; AI is set to drive surging electricity demand from data centres.

MIT Economics / Noy, S., & Zhang, W. (2023). Experimental evidence on the productivity effects of generative AI (Science; working paper).

OECD (2024). Miracle or myth? Assessing the macroeconomic productivity gains from AI.

RAND (2025). Uneven adoption of AI tools among U.S. teachers; More districts are training teachers on AI.

UK Department for Education (2025). Generative AI in education: Guidance.

UNESCO (2023, updated 2025). Guidance for generative AI in education and research.

Uptime Institute (2025). Global Data Center Survey 2025 (executive summaries and coverage).

Harvard Business School / BCG (2023). Dell’Acqua, F., et al. Navigating the jagged technological frontier: Field experimental evidence… (working paper).

Comment