"No Reason, No Model": Making Networked Credit Decisions Explainable

Published

Modified

Network credit models aren’t “inexplicable”—they can and must give faithful reasons Adopt “no reason, no model”: require per-decision reason packets and auditable graph explanations Regulators and institutions should enforce this operational XAI so that denials are accountable and contestable

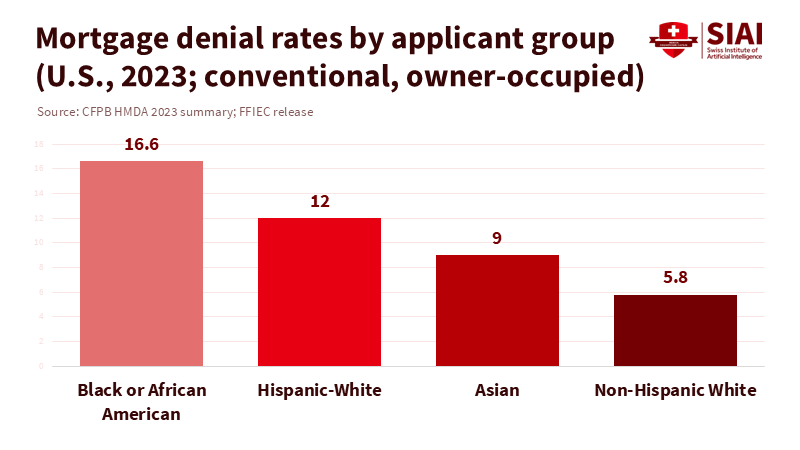

Consider this striking statistic: in 2023, the denial rate for Black applicants seeking conventional, owner-occupied home-purchase mortgages was 16.6%, nearly three times the 5.8% rate for non-Hispanic White applicants. These figures, reported under HMDA across millions of applications, underscore a significant disparity. As lenders shift from traditional scorecards to deep-learning models that comprehend relationships, these denials increasingly stem from network patterns—such as co-purchases, shared devices, and merchant clusters—rather than from three simple factors. Some institutions may label these systems as 'inexplicable.' However, the law does not permit complexity as an excuse. U.S. creditors are still required to provide specific reasons for denials. Europe's AI Act now categorizes credit scoring as a 'high-risk' activity, introducing phased requirements for logging and transparency. The central policy question isn't about accuracy versus explanations. It's whether we allow 'the network made me do it' to shield us from explanation, responsibility, and learning—especially in credit markets related to education that determine who completes degrees, starts programs, or maintains operations at community colleges.

We should change how we discuss this issue. Graph neural networks and other network models are not magical; they consist of linear transformations and simple nonlinear functions that communicate through connections. Near any given decision, these systems act like nested, piecewise-linear regressions. This means you can identify the critical variables and subgraphs, even if it requires more work than simply stating "debt-to-income ratio too high." The real issue is how operational: will lenders create systems that convert these technical explanations into clear, understandable reasons at the time of decision? The urgency and necessity of this issue cannot be overstated. Or will we accept a lack of clarity because it is easier to claim the networks cannot be made clear?

From Rule-Based Reasons to Graph-Based Reasons

For many years, adverse-action notices resembled a short checklist. When a loan was denied, institutions typically cited three reasons: insufficient income, a short credit history, and low collateral value. This approach worked because older underwriting models were additive and largely separable by feature. Network models change the process, not the obligation. When a GNN flags a loan, it might be because the applicant is two steps away from a group of high-risk merchants or because the transaction patterns mimic those that failed at a specific bank-merchant-device combination. Those are still reasons. To say "the model is inexplicable" confuses workflow choices with mathematical impossibility. Regulation B under ECOA requires creditors to provide "specific reasons" for denials. The CFPB has repeatedly warned that vague terms or generic reasons do not meet the law's standards—even for AI. Regulators play a crucial role in ensuring that the reasons provided are clear, understandable, and meet the law's standards. A regulator should hear "GNN" and say, "Fine—show me the subgraph and features that influenced the score and translate them into a consumer-friendly reason."

Technically, we know how to achieve this. Methods like LIME and SHAP create local surrogate models that approximate the complex model in the vicinity of a single decision. Integrated Gradients connects a prediction to input features along a path from a baseline. For graphs, GNNExplainer identifies a compact subgraph and feature mask that most influences the prediction. At the same time, SubgraphX uses Shapley values to find an explanatory subgraph with measured accuracy. Recent benchmarks in Scientific Data show that subgraph-based explainers produce more accurate and less misleading explanations than gradient-only methods. Newer frameworks, such as GraphFramEx, aim for standardized evaluations. None of this comes at no cost—searching for subgraphs can take several times longer than a single forward pass—but cost and complexity do not equal impossibility. Compliance duties do not lessen just because GPUs are expensive.

Making Networks Clear: Auditable XAI for Credit

The primary policy objective should be operational clarity: reasons that align with the model and are comprehensible to people, generated at the time of decision, and retained for audits. A practical framework consists of three key components. First, every high-stakes model should generate a structured' reason packet' that pairs feature attributions with an explanatory subgraph, outlining key connections, edges, and motifs that influenced the decision. Second, enforce 'reason passing' throughout the credit stack. Suppose a fraud or identity risk model impacts the underwriting process. In that case, it must transfer its reason packet to ensure the lender cannot simply attribute the decision to unnamed upstream risks. Third, compile reason packets into compliant notices: map features to standard reason codes when possible and add brief, clear network explanations when necessary (for instance, 'Recent transactions heavily concentrated in a merchant-device group linked to high default rates'). Vendors already provide XAI toolchains to facilitate this; regulators should mandate such systems before lenders implement network models at scale.

Methodological details are crucial. How reliable are these reasons? Subgraph-based explainers can be validated by removing the identified subgraph and checking the decline in the model's risk score. Auditors should sample decisions, run validations, and ensure that reasons are not just plausible but also practical in counterfactual scenarios. How quickly can this operate? SubgraphX has been reported to take multiple times the base inference time; in practice, lenders can use quicker explainers for every decision and reserve heavier audits for a select sample, with strict real-time requirements for adverse actions. How do we ensure privacy? Reason packets should be modified for notices, using terms like 'merchant category group' rather than specific store names, while keeping full details for regulators. The EU AI Act already mandates significant logging and documentation for high-risk systems. Maintaining accuracy is fundamental, but without reliable, testable reasons, accuracy becomes unsubstantiated—and that represents a governance failure.

The regulatory framework is already present. In the U.S., the CFPB has clarified that companies using complex algorithms must provide specific, accurate reasons for adverse actions; "black box" complexity is not acceptable. SR 11-7's Guidance on model risk still applies: banks must validate models, understand their limits, and monitor performance changes—responsibilities that easily extend to the explainability aspect. Europe's AI Act is scheduled to take effect in August 2024, with its obligations phased in over a period of time. Credit scoring is categorized as high-risk, triggering requirements for risk management, data governance, logging, transparency, and human oversight. Critical milestones are set for 2025-2027. NIST's AI Risk Management Framework offers organizations a structured approach to integrating XAI controls into their existing policies. The direction is clear: if you cannot explain it, you should not use it for important decisions, such as credit for students, teachers, and school staff.

Education stakeholders have a unique role because credit influences access, retention, and school finances. Rising delinquencies and stricter underwriting—seen in the first ongoing drops in average FICO scores since the financial crisis—push for quicker risk decisions in student lending, tuition payment plans, and teacher relocation loans. Faculty and administrators can promote model understanding in their curricula, develop credit-risk projects where students implement and audit GNNs, and collaborate with local lenders to test reason-passing systems. Schools that manage emergency aid or income-share agreements should request decision-specific reason packets from vendors rather than just receiving PDFs of ROC curves. Regulators and accreditors can encourage a shared set of reason codes that covers patterns from graphs without becoming vague. If we teach students to ask "why," our institutions should demand the same when an algorithm says "no."

A Practical Mandate: No Reason, No Model

The guiding principle for policy and procurement should be this: no model should influence a credit decision unless it can provide reasons that people can understand, contest, and learn from. "No reason, no model" is more than a phrase; it is a compliance standard that can be incorporated into contracts and regulatory examinations. Lenders would ensure that every model in the decision chain generates a reason packet, that these packets are stored and auditable, and that consumer-facing notices clearly communicate those packets in terms aligned with the model's logic. Regulators would verify a sample of denials by independently running explainers and counterfactuals to confirm the accuracy of the bank's system. If removing the identified subgraph does not alter the score, then the "reason" is not a valid reason. This method respects proprietary models while rejecting obscurity as a business practice.

Predictably, there will be objections. One is cost: subgraph explanations can take longer, and building the necessary systems is complex. Yet compliance costs are mandatory. The time required for advanced explainers is manageable—seconds, not days—especially if lenders use varied strategies (quick local attributions for all decisions and deeper subgraph audits for a statistically valid sample). Another concern relates to intellectual property, arguing that revealing subgraphs discloses trade secrets. This is a distraction. Consumer notices don't need to show raw graphs; they must deliver transparent and trustworthy statements. Regulators can access the whole packet under confidentiality agreements. The final concern is performance: some argue that enforcing explainability might lower accuracy. However, benchmarks indicate that reliable explanations lead to better learning, and nothing in ECOA or the AI Act allows sacrificing people's right to reasons for a slight improvement in AUC. The responsibility to provide valid reasons lies with those who oppose them, not with those who demand them.

What should we do now? Regulators should provide Guidance that puts "no reason, no model" into practice for credit and related decisions, with clear testing procedures and sampling plans. They should also coordinate with NIST's AI RMF to specify requirements for documentation, resilience checks, and reporting of explanation failures, not just prediction mistakes. Lenders should create model cards that include metrics on explanation accuracy and subgraph validation tests, committing to reason-passing in vendor agreements. Universities and professional schools should establish "XAI for finance" programs that audit real models under NDAs and develop open-source reason packet frameworks. Civil society can assist consumers in challenging denials by utilizing the logic in those packets, translating complex graphs into practical steps ("spread transactions across categories," "avoid merchant groups known to relate to charge-offs"). This approach fosters governance that educates while making decisions.

A 16.6% denial rate in a significant market segment is more than just a number; it reflects the power dynamics at play. Network models can channel that power through connections too subtle for human observation. That is why we must insist on explanations that simplify complexity into accountable language. U.S. law already mandates this. European law is gradually introducing it. The science of explainability—local surrogate models, attributions, and subgraph identification—makes it achievable. When lenders claim "the network is inexplicable," they are not describing an unchangeable truth; they are choosing convenience over rights. We can choose differently. We can demand reason packets, reason passing, and accountable notices. We can train the next generation of data scientists and regulators to create and evaluate them. Suppose an organization asserts that its model cannot provide explanations. In that case, the solution is straightforward: that model should not be part of the market. No reason, no model.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Agarwal, C., et al. (2023). Evaluating explainability for graph neural networks. Scientific Data. https://doi.org/10.1038/s41597-023-01974-x.

Consumer Financial Protection Bureau. (2022, May 26). The CFPB acts to protect the public from black-box credit models that use complex algorithms.

Consumer Financial Protection Bureau. (2023, Sept. 19). Guidance on credit denials by lenders using artificial intelligence.

Consumer Financial Protection Bureau. (2024, July 11). Summary of 2023 data on mortgage lending (HMDA).

European Commission. (2024–2026). AI Act regulatory framework and timeline.

FICO. (2024, Oct. 9). Average U.S. FICO® Score stays at 717.

Lundberg, S., & Lee, S.-I. (2017). A unified approach to interpreting model predictions (SHAP).

Lumenova AI. (2025, May 8). Why explainable AI in banking and finance is critical for compliance.

National Institute of Standards and Technology. (2023). AI Risk Management Framework (AI RMF 1.0).

Skadden, Arps. (2024, Jan. 24). CFPB applies adverse action notification requirement to lenders using AI.

U.S. Federal Reserve. (2011). SR 11-7: Supervisory Guidance on model risk management.

Ying, R., et al. (2019). GNNExplainer: Generating explanations for graph neural networks.

Yuan, H., et al. (2021). On explainability of graph neural networks via subgraph explorations (SubgraphX).

Turner Lee, N. (2025, Sept. 23). Recommendations for responsible use of AI in financial services. Brookings.