Build the Neutral Spine of AI: Why Europe Must Stand Up Its Own Stack

Published

Modified

Europe’s schools rely on foreign AI infrastructure, creating vulnerability A neutral European stack with local compute and governance can secure continuity This ensures resilient, interoperable education under global tensions

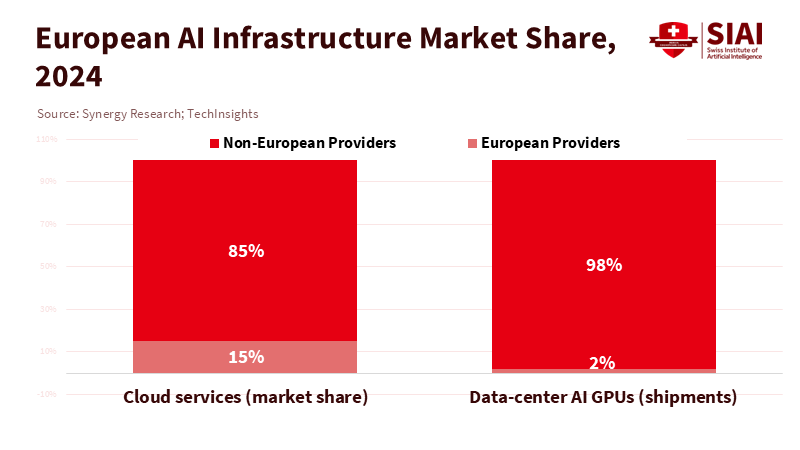

The most critical number in European AI right now is fifteen. That is the share of the continent's cloud market held by European providers in 2024; the remaining eighty-five percent is controlled by three U.S. companies. Pair that dependency with another figure: NVIDIA shipped roughly ninety-eight percent of all data-center AI GPUs in 2023. This reveals an uncomfortable truth: Europe's schools, universities, and public labs depend on foreign hardware and platforms that affect every lesson plan, grant proposal, and research workflow we care about. As U.S.–China technology tensions grow and export controls expand, this isn't just a pricing issue; it's a problem of reliability, access, and values for education systems that cannot pause when the rules change. Europe has the necessary pieces to change this situation—laws, computing infrastructure, research, and governance. Still, it must assemble them into a coherent, education-friendly "third stack" that can withstand geopolitical shifts and is designed to work together.

From Consumer to Producer: Why Sovereignty Now Means Interoperability

The case for a European stack isn't about isolation. It is a response to two converging trends: stricter export controls around advanced chips and new AI regulations in Europe with hard, upcoming deadlines. The United States tightened access to advanced accelerators and supercomputing items with rules in October 2022 and October 2023. Clarifications in 2024 and updates in 2025 expand the rules and introduce restrictions on AI model weights. Meanwhile, the EU's AI Act will come into effect on August 1, 2024, with restrictions and AI education duties starting in February 2025, general-purpose model obligations from August 2025 (with systemic-risk thresholds at 10^25 FLOPs), and high-risk system rules essentially landing by August 2026. To be clear, restrictions at the hardware level and requirements at the application level are arriving simultaneously, and education must not get caught in between. A European stack ensures that classrooms and labs have lawful, reliable access to compute and models, even as geopolitical circumstances change.

There's also a compatibility risk that rarely shows up in procurement documents. If two AI superpowers develop competing, non-compatible ecosystems—different chips, interconnects, tools, and model documentation norms—then educational content, evaluation pipelines, and safety tools won't move smoothly across borders. Analysts now view AI as a matter of national security, with incentives for separation being high, especially as "block economies" reappear. Education is downstream of this competition, relying on standard file formats, portable model cards, and shared inference runtimes. The more innovative approach is not to pick a side but to build a neutral spine that can translate between them and keep European teaching and research ongoing under pressure.

Crucially, Europe is not starting from scratch. The EuroHPC program has created world-class systems, led by JUPITER—Europe's first exascale supercomputer—launched in September 2025 in Jülich, and Switzerland's "Alps" system at CSCS, launched in 2024. These platforms aren't just valuable for their processing power; they allow governments and universities to test procurement, scheduling, and governance on a continental scale. They demonstrate that Europe can establish computing resources on its soil, under European law, while still using top-tier components.

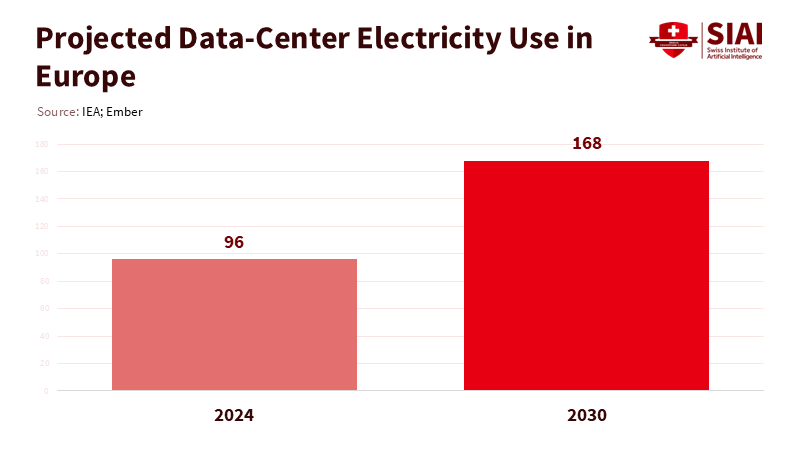

A neutral European stack must, therefore, focus on four practical ideas. First, portability: standardize open exchange formats and evaluation artifacts so models trained or refined on European hardware can run on multiple platforms without months of additional work. Second, governed access: compute should be allocated through institutions and grant programs already in use by the education sector, with visible queue service-level agreements and commitments so coursework runs when needed. Third, energy awareness: data-center power demand in Europe is set to rise significantly this decade, with several forecasts suggesting a doubling or near-tripling by 2030; education workloads should prioritize efficient open-weight models, shared fine-tunes, and "green windows" that align with renewable energy surpluses. Finally, transparent data governance: a Gaia-X style labeling system can show whether a tool keeps student data in-region, supports audits by data protection officers, and manages copyrighted material appropriately. These are not ideological choices; they are protective measures against disruption.

What Schools, Ministries, and Regulators Should Do Next

Start with the procurement process. Treat model providers and cloud capacity like essential services, not optional apps. This means securing major contracts with at least one European provider for each layer: EU-based cloud for storage and inference, EuroHPC resources for training and research, and a mix of European model vendors—both open and proprietary—so curricula and research aren't reliant solely on foreign licenses. This is achievable: European model companies have scale and momentum. In September 2025, Mistral raised €1.7 billion in a Series C led by ASML, leading to a valuation of about €11.7 billion. Germany's Aleph Alpha closed a Series B exceeding $500 million in late 2023. A resilient education stack should provide ways to use and examine such models in line with European law and practice.

Next, set clear, immediate goals that convert strategy into reality in the classroom. Over the next twelve months, every teacher-education program and university department with a digital curriculum should have access to and training on an approved set of models and data services hosted in Europe, specifically for educational use. The focus should not be on familiarity with slideshows; it should be about instructor assignments that run on the designated services at predictable costs. Where reliable data is lacking, we can create transparent estimates: if a national system wishes to offer 100,000 students a modest set of 300 classroom inference tasks over a semester at about 10^11 FLOPs per task for efficient models, the total would be around 3×10^16 FLOPs—comfortably supported by a mix of local inference on open weights and scheduled inquiry on shared clusters. The point of detailing such calculations is to highlight that most educational use doesn't require extreme-scale training runs that trigger "systemic risk" thresholds in EU law; it requires reliable, documented, mid-sized capacity.

Next, invest in the translation layers. The fastest way to lose a year is to find that your curriculum relies on a model or SDK that your provider cannot legally support. National ed-tech teams should maintain a "portability panel" comprising engineers and teachers tasked with ensuring that lesson-critical models and datasets are convertible across platforms and clouds, with model cards and evaluation tools stored under European oversight. This concern is not abstract; when one vendor dominates the accelerator market and the leading clouds run proprietary systems, a licensing change can disrupt classrooms overnight. The more Europe insists on portable inference and well-documented build processes, the more resilient its teaching and research will be.

Regulators can help close the loop. The AI Act's phased rules are not a burden to navigate; they outline a product roadmap for an education-friendly stack. The requirements for general-purpose models—technical documentation, cybersecurity, testing for adversarial issues, serious-incident reporting, and summaries of training data—reflect what schools and universities should demand anyway. Oversight bodies can expedite alignment by offering sector-specific guidance and by funding "living compliance" sandboxes, where universities can test documentation, watermarking, and red-teaming practices using EuroHPC resources. The Commission's decision not to pause the Act—and its guidance schedule for models with systemic risk—offers helpful certainty for planning syllabi and budgets for two academic years.

The Neutral Spine We Build Now

Energy management and site selection will determine whether an education-friendly stack becomes a public benefit or remains just a concept. Global data-center electricity demand is expected to more than double by 2030, with the IEA's baseline scenario estimating about 945 TWh—around the current energy usage of Japan—with a significant portion of the growth occurring in the U.S., China, and Europe. Within Europe, studies project demand could rise from about 96 TWh in 2024 to about 168 TWh by 2030. Several analyses predict a near-tripling in specific Northern markets, leading to over 150 TWh of consumption across the continent by the end of the decade. An education-first policy response is clear: prioritize efficient, European-hosted models for daily classroom use, create "green windows" for training and extensive inference that match renewable energy surpluses, and require providers to disclose energy estimates for each task alongside their costs so departments can plan effectively.

Location and legal frameworks are just as crucial as power consumption. Switzerland serves as a practical center for cross-border research and educational services. It is central to Europe's knowledge networks, has updated its Federal Act on Data Protection to match GDPR standards, and benefits from an EU adequacy decision. This simplifies managing cross-border data flows. Adding a Gaia-X-style trust label that indicates where student data is stored, how audits are conducted, and how copyrighted materials are handled in training, provides an operational model that districts and deans can adopt without needing to hire legal experts in AI. This is what a neutral spine looks like when built thoughtfully: legally sound, energy-conscious, and designed for portability.

Anticipating the pushback clarifies the policy choice. Some will see a third stack as redundant given the scale of global platforms. This view underestimates risk concentration. When three foreign firms control most of the local cloud market and one U.S. vendor supplies nearly all AI accelerators, any geopolitical or licensing shock can quickly affect schools. Others may worry about costs. Yet the cost of losing continuity—canceled lab sessions, frozen grants, untestable curricula—rarely appears in bid comparisons. The way forward is not to eliminate foreign tools; it is to ensure European classrooms can maintain their teaching and European labs can continue their research when changes occur elsewhere. Existing examples—JUPITER, Alps, and a growing number of European model companies—show that the essential elements are in place. The work now involves integration, governance, and teaching the next million students how to use them effectively.

In the end, the number fifteen serves as both a warning and an opportunity. The warning is about dependency: an education system that relies on others for most of its computing and cloud resources will one day realize that someone else has made its choices. The opportunity is to build: an interoperable, education-friendly European stack—legally grounded, geopolitically neutral, and energy-aware—that utilizes JUPITER-class capability and Swiss-based governance to keep classes running, labs effective, and research open. The implementation timeline is clear; the AI Act's deadlines are public and imminent, and energy limitations are tightening. The choice is equally straightforward: move forward now with procurement, compute scheduling, and model portfolios while prices and regulations are stable, or wait for the next round of export controls and accept that curriculum and research will change on someone else's timetable. The students in our classrooms deserve the first option, as do the teachers who cannot afford another year of uncertainty.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Aleph Alpha. (2023, November 6). Aleph Alpha raises a total investment of more than half a billion U.S. dollars (Series B announcement).

ASML. (2025, September 9). ASML and Mistral AI enter strategic partnership; ASML to invest €1.3 billion in Mistral's Series C.

Bureau of Industry and Security (U.S. Department of Commerce). (2024, April 4). Implementation of Additional Export Controls: Certain Advanced Computing Items; Supercomputer and Semiconductor End Use (corrections and clarifications).

Bureau of Industry and Security (U.S. Department of Commerce). (2025, January 13). Framework for Artificial Intelligence Diffusion (Public Inspection PDF summarizing December 2024 expansions including HBM and AI-related controls).

CSCS – Swiss National Supercomputing Centre. (2024, September 16). New research infrastructure: "Alps" supercomputer inaugurated.

DataCenterDynamics (via TechInsights). (2024, June 12). NVIDIA data-center GPU shipments totaled 3.76 million in 2023 (≈98% market share).

EDÖB/FDPIC (Swiss Federal Data Protection and Information Commissioner). (2024, January 15). EU adequacy decision regarding Switzerland.

EuroHPC Joint Undertaking. (2025, September 5). JUPITER: Launching Europe's exascale era.

European Commission. (2025, August 1). EU rules on GPAI models start to apply: transparency, safety, accountability (AI Act GPAI obligations and systemic-risk threshold).

Gaia-X European Association. (2024, September). Compliance Document: Policy rules, labelling criteria, and trust framework.

IEA. (2025, April 10). Energy and AI: Data-centre electricity demand to 2030 (news release and analysis).

McKinsey & Company. (2024, October 24). The role of power in unlocking the European AI revolution.

Mistral AI. (2025, September 9). Mistral AI raises €1.7 billion (Series C) to accelerate technological progress.

RAND Corporation. (2025, August 4). Chase, M. S., & Marcellino, W. Incentives for U.S.–China conflict, competition, and cooperation across AGI's five hard national-security problems.

Reuters. (2025, July 4). EU sticks with timeline for AI rules despite calls for delay.

Synergy Research Group. (2025, July 24). European cloud providers' local market share holds steady at ~15% (2024 market ~€61 billion).

Tomorrow's Affairs. (2024, October 26). The legacy of the Cold War: Economics stuck between two worlds.

Ember. (2025, June 19). Grids for data centres in Europe (EU demand projection: 96 TWh in 2024 → 168 TWh by 2030).

Comment