AI and Earnings Inequality: The Entry-Level Squeeze That Education Must Solve

Published

Modified

AI is erasing junior tasks, widening wage gaps Inside firms gaps narrow; across markets exclusion grows Rebuild ladders: governed AI access, paid apprenticeships, training levies

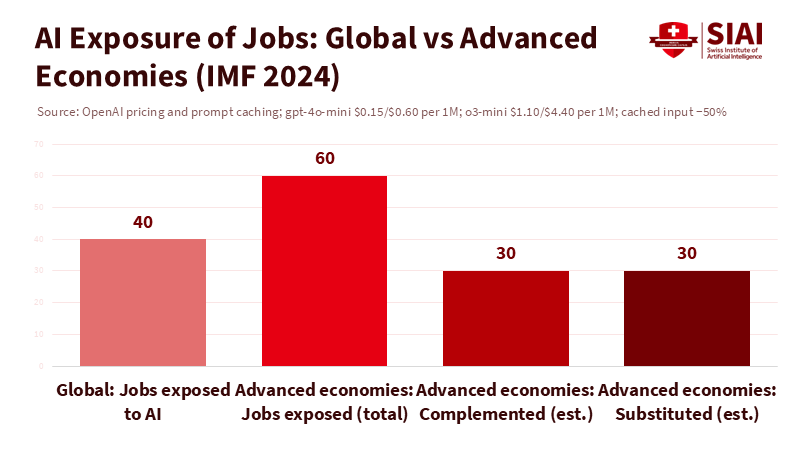

One figure should change how we think about schools, skills, and pay: about 60% of jobs in advanced economies are exposed to artificial intelligence. In roughly half of those cases, AI could perform key tasks directly, potentially lowering wages or eliminating positions. This is not a distant forecast—it is an immediate risk influenced by current systems and firms. The implications for AI and earnings inequality are severe. When technology automates tasks that train beginners, the ladder breaks at the first rung. This causes inequality to grow even as average productivity increases. It explains why graduates find fewer entry-level jobs, why mid-career workers struggle to change paths, and why a select few firms and top experts claim the majority of the benefits. The policy question is no longer whether AI makes us collectively richer; it is whether our education and labor institutions can rebuild those initial rungs quickly enough to prevent AI and earnings inequality from becoming the new normal.

We need to rethink the debate. The hopeful view suggests that AI levels the field by improving conditions for the least experienced workers in firms. This effect is real but incomplete. It only looks at results for those who already have jobs. It overlooks the overall loss of entry points and the concentration of profits. The outcome is contradictory: within specific roles, differences may narrow; across the entire economy, AI and earnings inequality can still increase. The crucial aspect is what happens with junior tasks, where learning takes place and careers begin.

AI and earnings inequality start at the bottom: the disappearing ladder

The first channel involves entry-level jobs. In many fields, AI now handles the routine information processing that used to be assigned to junior staff. Clerical roles are most affected, and this matters because they employ many women and serve as gateways to better-paying professional paths. The International Labour Organization finds that the most significant impacts of generative AI are in clerical occupations in high- and upper-middle-income countries. When augmentation replaces apprenticeship, the "learn-by-doing" phase disappears. This is the breeding ground for AI and earnings inequality: fewer learning opportunities, slower advancement, and greater returns concentrated among experienced workers and experts.

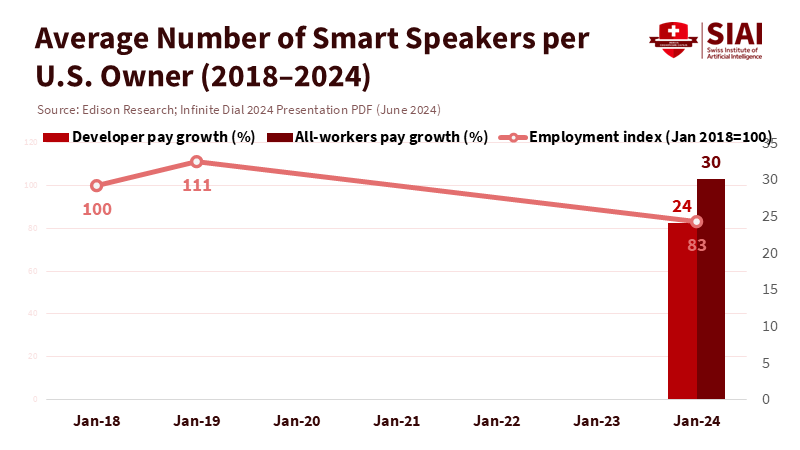

Labor-market signals reflect this shift. In the United States, payroll data indicate that by January 2024, the number of software developers employed was fewer than it had been six years earlier, despite the sector's historical growth. Wages for developers also rose more slowly than for the overall workforce from 2018 to 2024. This doesn't represent the entire economy, but software is at the forefront of experiencing the earliest effects of AI. When a sector that once employed thousands of juniors is shrinking, we should expect consequences across business services, cybersecurity support, and IT operations. This creates a more significant pay gap for those who remain in the workforce.

Outside tech, the situation also appears challenging for newcomers. Recent analyses of hiring trends show a weakening market for "first jobs" in several advanced economies. Research indicates that roles exposed to AI are seeing sharper declines, with employers using automation to eliminate the simplest entry positions. Indeed's 2025 GenAI Skill Transformation Index shows significant skill reconfigurations across nearly 2,900 tasks. Coupled with employer caution, this means fewer low-complexity tasks are available for graduates to learn from. The Burning Glass Institute's 2025 report describes an "expertise upheaval," where AI reduces the time required to master jobs for current workers while eliminating the more manageable tasks that previously justified hiring entry-level staff. The impact is subtle yet cumulative: fewer internships, fewer apprenticeships, and job descriptions that require experience, which most applicants lack.

The immediate math of AI and earnings inequality is straightforward. If junior tasks decrease and the demand for experienced judgment increases, pay differences at the top widen. Suppose displaced beginners cycle through short-term contracts or leave for lower-paying fields. In that case, the lower end of earnings stretches further. And if capital owners capture a larger share of productivity gains, the labor share declines. The International Monetary Fund warns that, in most realistic scenarios, inequality worsens without policy intervention. Around 40% of global jobs are exposed to AI, with about 60% in advanced economies facing the possibility of displacement rather than support. The distributional shift is clear: without new ladders, those who can already use the tools win while those who need paid learning time are left behind.

AI and earnings inequality within firms versus across the market

The second channel is more complex. Several credible studies indicate that AI can reduce performance gaps within a firm. In a large-scale field experiment in customer support, access to AI assistance improved productivity. It narrowed the gap between novices and experienced workers. This is good news for inclusion within surviving jobs. However, it does not ensure equal outcomes in the broader labor market. The same technologies that support a firm's least experienced workers can also encourage the company to hire fewer beginners. If ten agents with tools can do the work of twelve, and the tool incorporates the best agent's knowledge, the organization requires fewer trainees. The micro effect is equalizing; the macro effect can be exclusionary. Both can co-occur, and both impact AI and earnings inequality.

A growing body of research suggests that dynamics between firms are the new dividing line. A recent analysis has linked increases in a firm's "AI stock" to higher average wages within those firms (a complementarity effect), lower overall employment (a substitution effect), and rising wage inequality between firms. Companies that effectively use AI tend to become more productive and offer higher pay. Others lag and shrink. This pattern reflects the classic "superstar" economy, updated for the age of generative technologies. It suggests that mobility—between firms and into better jobs—becomes a key policy focus. If we train people effectively, but they do not get hired by firms using AI, the benefits are minimal. If we neglect training and allow adoption to concentrate, the gap widens. Addressing AI and earnings inequality requires action on both fronts.

Cross-country evidence is mixed, highlighting diverse timelines and methodologies. The OECD's pre-genAI panel (2014–2018) finds no clear impact on wage gaps between occupations due to AI exposure, even noting declines in wage inequality within exposed jobs, such as the business and legal professions—consistent with the idea of leveling within roles. Those data reflect an earlier wave of AI and an economy before the surge in deployments from 2023 to 2025. Since 2024, the IMF has highlighted the opposite risk: faster diffusion can increase overall inequality without proactive measures in place. The resolution is clear. In specific jobs, AI narrows gaps. In the broader economy, displacement, slower hiring of new entrants, and increased capital investment can lead to greater variation in employment rates. Policy must address the market-level failure: the lack of new rungs.

AI and earnings inequality require new pathways, not empty promises

The third channel is institutional. Education systems were created around predictable task ladders. Students learned theory, practiced routine tasks in labs or internships, and then graduated into junior roles to build practical knowledge. AI disrupts this sequence. Many routine tasks are eliminated or consolidated into specialized tools. The remaining work requires judgment, integration, and complex coordination. This raises the skill requirements for entering the labor market. If the system remains unchanged, AI and earnings inequality will become a structural outcome rather than a temporary disruption.

The solution isn't a single program. It's a redesign of the pipeline. Universities should treat advanced AI as essential infrastructure—like libraries or labs—rather than a novelty. Every student in writing-intensive, data-intensive, or design-intensive programs should have access to computing resources, models, and curated data. Courses must shift from grading routine task outputs to evaluating processes, judgment, and verified collaboration with tools. Capstone projects should be introduced earlier, utilizing industry-mentored, work-integrated learning to replace the lost "busywork" on the job. Departments should track and share "first-job rates" and "time-to-competence" as key performance indicators. They should also receive funding, in part, based on improvements to these measures in AI-exposed fields. This is how an education system can address AI and earnings inequality—by demonstrating that beginners can still add real value in teams that use advanced tools.

K–12 and vocational systems need a similar shift. Curricula should focus on three essential skills: statistical reasoning, structured writing, and systems thinking. Each is enhanced by AI rather than replaced by it. Apprenticeships should be expanded, not as a throwback, but with AI-specific safeguards, including tracking prompts and outputs, auditing decisions, and rotating beginners through teams to learn tacit standards. Governments can support this by mandating credit-bearing micro-internships tied to public projects and requiring firms to host apprentices when bidding for AI-related contracts. This reestablishes entry-level positions as a public good, rather than a cost burden for any single firm. It is the most efficient way to prevent AI and earnings inequality from worsening.

A realistic path to safeguards and ladders

What about the counterarguments? First, that AI will create as many tasks as it eliminates. Maybe in the long run, but for now, transition challenges are significant. IMF estimates suggest that exposure levels can exceed normal retraining abilities, particularly in advanced economies where cognitive-intensive jobs are prevalent. Without targeted support, the friction leads to joblessness for beginners and stalled mobility for those seeking to switch careers—both of which worsen AI and earnings inequality now.

Second, AI helps beginners learn faster. Yes, in firms that hire them. Field experiments in support and programming show substantial gains for less experienced workers when tools are incorporated into workflows. However, these findings occur alongside a decline in junior roles in AI-influenced functions and ongoing consolidation in employer demand for these roles. Equalization within firms cannot counteract exclusion in the broader market. The policy response shouldn't be to ban tools. It should give learners time by funding supervised practice, tying apprenticeships to contracts, and ensuring that every student has access to the same AI resources that employers use. This is how we align the learning benefits within firms with a fair entry market.

Third, the evidence shows that decreasing inequality exists. It does, within occupations, during an earlier period, and in specific situations. The OECD's findings are encouraging, but they cover the period from 2014 to 2018, before the widespread adoption of AI. Since 2023, deployment has accelerated, and the benefits have become more concentrated. Inequality between firms is now the bigger issue, with productivity gains and capital investments clustered among AI-focused companies. Research linking higher "AI stock" to reduced employment and wider wage gaps between firms should be taken seriously. This suggests that education and labor policy must function as a matching policy, preparing people for where jobs will exist and incentivizing firms to hire equitably.

So what should leaders do this academic year? Set three commitments and measure their progress. First, every program in an AI-exposed field should publish a pathway: a sequence of fundamental tasks that beginners can work on with tools and provide value, from day one to their first job. Second, every public contract involving AI should include a training fee to fund apprenticeships and micro-internships within the vendor's teams, with oversight for tool use. Third, every student should have a managed AI account with computing quotas and data access, along with training on attribution, privacy, and verification. These are straightforward, practical steps. They keep the door open for new talent while allowing firms to fully adopt AI. They are also the most cost-effective way to slow the advancement of AI and earnings inequality before it escalates.

Finally, we must be honest about power dynamics. Gains from AI will be distributed to individuals and firms that control capital, data, and distribution. The IMF has suggested fiscal measures—including taxation on excess profits, updated capital income taxes, and targeted green levies—to prevent a narrow winner-take-most situation and to support training budgets. Whether countries choose those specific measures, the goal is correct: recycle a portion of the gains into ladders that promote mobility. Education alone cannot fix distribution; however, it can help make the rewards of learning real again—if we fund the pathways and track the progress.

The opening number still stands. Approximately 60% of jobs in advanced economies are exposed to AI, and a substantial portion of these jobs could be subject to task substitution. It's easy to say this technology will reduce wage gaps by helping less experienced workers. That might happen within firms. However, it won't occur in the broader market unless we rebuild the pathway into those firms. If we do nothing, AI and earnings inequality will increase. This will happen slowly as entry-level jobs decline, returns on experience and capital rise, and gaps widen between AI-heavy companies and others. If we take action, we can ensure that progress is both swift and equitable. The message is clear: treat novice skills as vital infrastructure; direct public funds to real training; share clear paths and results. This is how schools, employers, and governments can turn a delicate transition into widespread progress—and keep opportunities close to those at the bottom.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

ADP Research Institute. (2024, June 17). The rise—and fall—of the software developer.

Brynjolfsson, E., Li, D., & Raymond, L. (2023, rev. 2023). Generative AI at Work (NBER Working Paper 31161). https://www.nber.org/papers/w31161

Burning Glass Institute. (2025, July 28). No Country for Young Grads.

International Labour Organization. (2023, August 21). Generative AI and Jobs: A global analysis of potential effects on job quantity and quality.

International Monetary Fund. (2024, January 14). Georgieva, K. AI Will Transform the Global Economy. Let’s Make Sure It Benefits Humanity.

International Monetary Fund. (2024, June 11). Brollo, F., et al. Broadening the Gains from Generative AI: The Role of Fiscal Policies (Staff Discussion Note SDN/2024/002).

Indeed Hiring Lab. (2025, September 23). AI at Work Report 2025: How GenAI is Rewiring the DNA of Jobs.

OECD. (2024, April 10). Georgieff, A. Artificial intelligence and wage inequality.

OECD. (2024, November 29). What impact has AI had on wage inequality?

Prassl, J., et al. (2025). Pathways of AI Influence on Wages, Employment, and Inequality. SSRN.

Comment