AI Productivity in Education: Real Gains, Costs, and What to Do Next

Published

Modified

AI productivity in education is real but uneven and adoption is shallow Novices gain most; net gains require workflow redesign, training, and guardrails Measure time returned and learning outcomes—not hype—and scale targeted pilots

The most relevant number we have right now is small but significant. In late 2024 surveys, U.S. workers who used generative AI saved about 5.4% of their weekly hours. Researchers estimate this translates to approximately a 1.1% increase in productivity across the entire workforce. This is not a breakthrough, but it is also not insignificant. For a teacher or instructional designer working a 40-hour week, this saving amounts to just over two hours weekly, assuming similar patterns continue. The key question for AI productivity in education is not whether the tools can create rubrics or outline lessons, as they can. Instead, it's whether institutions will change their processes so those regained hours lead to better feedback, stronger curricula, and fairer outcomes, without introducing new risks that offset the gains. The answer depends on where we look, how we measure, and what we decide to focus on first.

AI productivity in education is inconsistent and not straightforward

Most headlines suggest that advancements benefit everyone. Early evidence, however, points to a bumpier road. In a randomized rollout at an extensive customer support operation, access to a generative AI assistant increased agent productivity by approximately 14% to 15% on average, with the most significant improvements observed among less-experienced workers. This pattern is essential for AI productivity in education. When novice teachers, new TAs, or early-career instructional staff have structured AI support, their performance aligns more closely with that of experienced educators, offering a beacon of hope in the journey of AI integration in education. But in areas outside the model's strengths—tasks that require judgment, unique contexts, and local nuances—AI can mislead or even hinder performance. Field experiments with consultants show the same inconsistent results: strong improvements on well-defined tasks, and weaker or adverse effects on more complex problems. The takeaway is clear. We will see significant wins in specific workflows, not universally, and the most considerable initial benefits will be realized by "upper-junior" staff and students who need the most support.

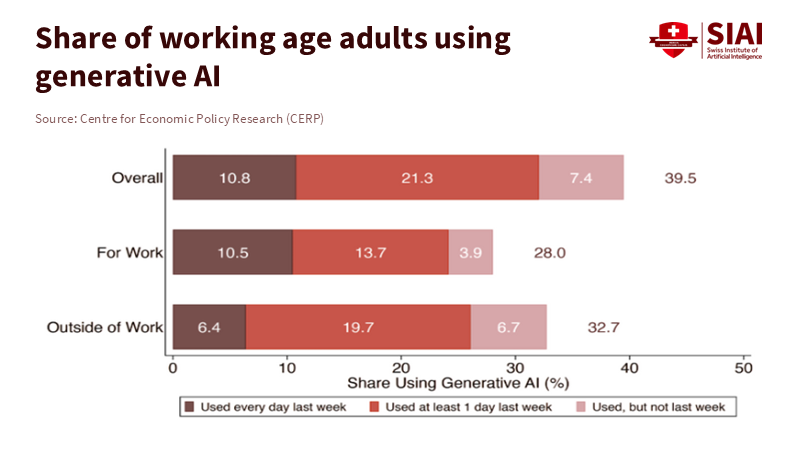

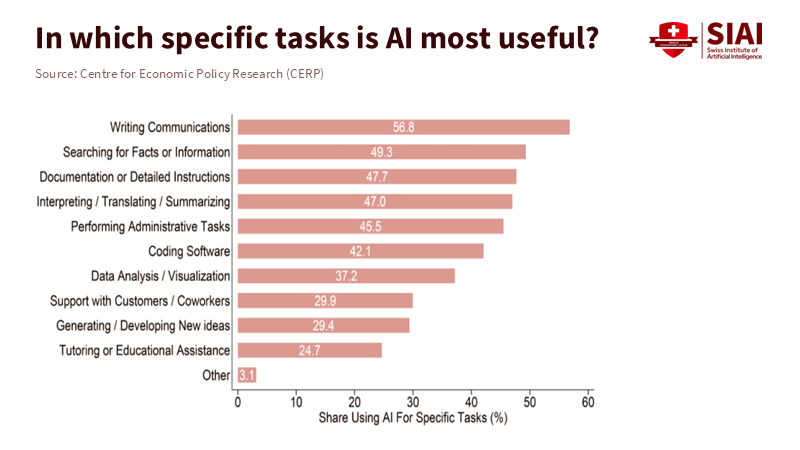

The extent of adoption is another barrier. U.S. survey data indicate that generative AI is spreading quickly overall. Still, only a portion of workers use it regularly for their jobs. One national study found that 23% of employed adults used it for work at least once in the previous week. OpenAI's analysis suggests that about 30% of all ChatGPT usage is work-related, with the remainder being personal. In educational settings, this divide is evident as faculty and students test tools for minor tasks. At the same time, core course design and assessment remain unchanged. If only a minority use AI at work and even fewer engage deeply, system-wide productivity barely shifts. This isn't a failure of the technology; it signals that policy should focus on encouraging deeper use in the workflows that matter most for learning and development.

Improving AI productivity in education needs more than tools

The basic technology is advancing rapidly, but AI productivity in education relies on several key factors: high-quality data, redesigned workflows, practical training, and robust safeguards. The Conversation's review of public-sector implementations is clear: productivity gains exist, but they require significant effort and resources to achieve. Integration costs, oversight, security, and managing change consume time and funds. These aren't extras; they determine whether saved minutes translate into better teaching or are lost to additional work. In software development, controlled studies have shown significant time savings—developers complete tasks approximately 55% faster with AI pair programmers when tasks are well-defined and structured. However, organizations only realize these gains when they standardize processes, document prompts, and improve code review. Education is no different. To turn drafts into tangible outcomes, institutions need shared templates, model "playbooks," and clear guidelines for uncertain situations, providing reassurance and guidance throughout the AI integration process.

Costs and risk management also influence the rate of adoption. Hallucinations can be reduced with careful retrieval and structured prompts, but they won't disappear completely. Privacy regulations limit what student data can be processed by a model. Aligning curricula takes time and careful design. These challenges help explain why national productivity hasn't surged despite noticeable AI adoption. In the U.S., labor productivity grew about 2.3% in 2024 and 1.5% year-over-year by Q2 2025—an encouraging uptick after a downturn, but far from a substantial AI-driven change. This isn't a judgment on the future of education with AI; it reflects the context. The macro trend is improving, but significant gains will come from targeted, well-managed deployments in key educational processes, rather than blanket approaches.

Assess AI productivity in education by meaningful outcomes, not hype.

We should rethink the main question. Instead of asking, "Has productivity really increased?", we should ask, "Where, for whom, and at what total cost?" For AI productivity in education, three outcome areas matter most. First, time is saved on low-stakes tasks that can be redirected toward feedback and student interaction. Second, measurable improvements in assessment quality and course completion rates for at-risk learners. Third, institutional resilience: fewer bottlenecks in student services, less variability across sections, and shorter times from evidence to course updates. The best evidence we have suggests that when AI assists novices, the performance gap decreases. This presents a policy opportunity: target AI at bottlenecks for early-career instructors and first-generation students, and design interventions that allow the "easy" time savings to offset the "hard" redesign work that follows.

Forecasts should be approached cautiously. The Penn Wharton Budget Model predicts modest, non-linear gains from generative AI for the broader economy, with more potent effects expected in the early 2030s before diminishing as structures adapt. Applied to campuses, the lesson is clear. Early adopters who redesign workflows will capture significant benefits first; those who lag will experience smaller, delayed returns and may end up paying more for retrofits. That's why it's essential to measure outcomes: hours returned to instruction, reductions in grading variability, faster support for students who fall behind, and documented error rates in AI-assisted outputs. If we can't track these, we're not managing productivity; we're just guessing. This emphasis on measuring outcomes instills a sense of responsibility and accountability in the audience, encouraging them to participate actively in the AI integration process.

A practical agenda for the next 18 months

The way forward begins with focus. Identify three workflows where AI productivity in education can increase both time and quality: formative feedback on drafts, generating aligned practice items with explanations, and triaging student services. In each, establish what the "gold standard" looks like without AI, then insert the model where it can replace repetitive tasks and support decision-making, not replace it altogether. Use specific retrieval for course-related content to minimize hallucinations. Establish a firm guideline: anything high-stakes—such as final grades or progression decisions—requires human review. Document this and provide training. Show the first improvements in returned time to instructors and faster responses for students. Evidence, not excitement, should guide the next wave of AI use.

Procurement should reward complementary tools. Licenses must include organized training, prompt libraries linked to the learning management system, and APIs for safe retrieval from approved course repositories. Create incentives for teams to share their workflows—how they prompt, review, and what they reject—so that knowledge builds across departments. Start with small, cross-functional pilot projects: a program lead, a data steward, two instructors, a student representative, and an IT partner. Treat each pilot as a mini-randomized controlled trial: define the target metric, gather a baseline, run it for a term, and publish a brief report on methods. This is how AI productivity in education transforms from a vague promise into a manageable, repeatable process.

Measurement must accurately reflect costs—track computing and licensing expenses, as well as the "hidden" labor involved in redesigning and reviewing. If a course saves ten instructor hours per week on drafting but adds six hours for quality control because prompts deviate, the net gain is four hours. That is still a win, but smaller, and it points to the following fix: stabilize prompts, use drafts to teach students to critique AI outputs, and automate permitted checks. Where effect sizes are uncertain, borrow from labor-market studies by measuring not only the outputs created but also the hours saved and reductions in variability. Suppose novices close the gap with experts in rubric-based grading or writing accuracy. In that case, the benefits will be seen in more consistent learning experiences and higher progression rates for historically struggling students.

Finally, maintain control of the narrative while grounding it in reality. Macro numbers will fluctuate—quarterly productivity does this—and bold claims will continue to emerge. Maintain a close connection between campus evidence and policy if pilot projects show a steady two-hour weekly return per instructor without a decline in quality, scale that up. If error rates increase in certain classes, pause to address retrieval or assessment design issues before expanding the scope of the intervention. Use clear method notes in your reports. If adoption lags, don't blame reluctance; instead, look for gaps in workflows and training. The economies that benefit most from AI are not the loudest; they are the ones that effectively pair technology with process and people, all while learning in public. This is how AI productivity in education becomes a reality and a lasting impact.

We started with a modest figure: a 1.1% productivity boost at the workforce level, driven by a 5.4% time savings among users. Detractors might view this as lackluster. However, in education, it is enough to alter the baseline if we consider it working capital—time we reinvest into providing feedback, improving course clarity, and enhancing student support. The evidence shows us where the gains begin: at the "upper-junior" level, in routine tasks that free up expert time, and in redesigns that establish strong practices as standard. The risks are real, and the costs are not trivial. But we can set the curve. If we align incentives to deepen use in a few impactful workflows, purchase complementary tools instead of just licenses, and measure what students and instructors truly gain, the small increases will add up. That is the vital productivity story of the day. It's not about a headline figure. It's about the week-by-week time returned to the work that only educators can do.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Bick, A., Blandin, A., & Mertens, K. (2024). The Rapid Adoption of Generative AI. NBER Working Paper w32966 / PDF. (Adoption levels; work-use share.)

Bureau of Labor Statistics. (2025). Productivity and Costs, Second Quarter 2025, Revised; and related Productivity home pages. (U.S. productivity growth, 2024–2025.)

Brynjolfsson, E., Li, D., & Raymond, L. (2025). Generative AI at Work. Quarterly Journal of Economics 140(2), 889–944; and prior working papers. (14–15% productivity gains; largest effects for less-experienced workers.)

Dell’Acqua, F., McFowland III, E., Mollick, E. R., et al. (2023). Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on Knowledge Worker Productivity and Quality. HBS Working Paper; PDF. (Heterogeneous effects; frontier concept.)

Noy, S., & Zhang, W. (2023). Experimental Evidence on the Productivity Effects of Generative Artificial Intelligence. Science (2023) and working paper versions. (Writing task productivity, quality effects.)

OpenAI. (2025). How people are using ChatGPT. (Share of work-related usage ~30%.)

Penn Wharton Budget Model. (2025). The Projected Impact of Generative AI on Future Productivity Growth. (Modest, non-linear macro effects over time.)

St. Louis Fed. (2025). The Impact of Generative AI on Work Productivity. (Users save 5.4% of hours; ~1.1% workforce productivity.)

The Conversation / University of Melbourne ADM+S. (2025). Does AI really boost productivity at work? Research shows gains don’t come cheap or easy. (Integration costs, governance, and risk.)

GitHub / Research. (2023–2024). The Impact of AI on Developer Productivity. (Task completion speedups around 55% in bounded tasks.)

Comment