When a Face Becomes a License: Deepfake NIL Licensing and the Next Lesson for Education

Published

Modified

Deepfake NIL licensing will surge as content costs collapse Schools must use contracts and authenticity tech to protect communities Provenance and consent rebuild trust when faces become licensable assets

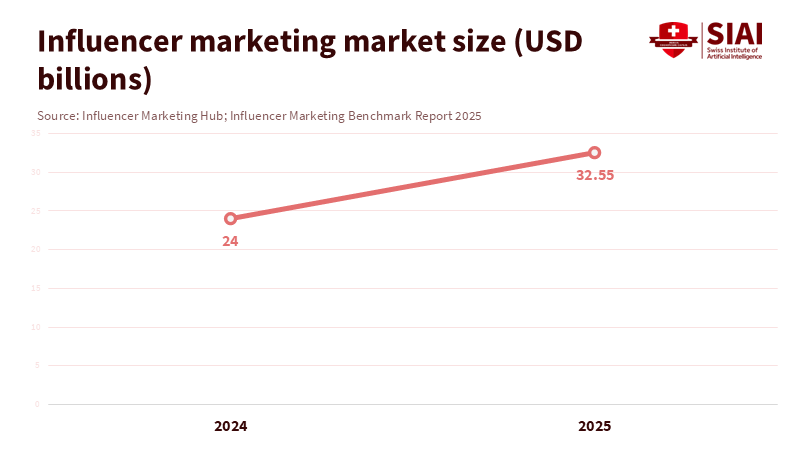

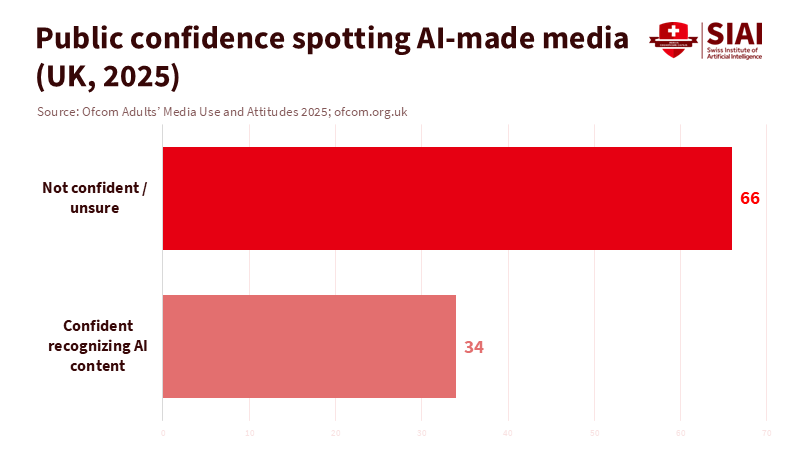

Only three in ten UK adults say they feel confident in spotting whether AI made an image, voice clip, or video. This finding from the country's communications regulator highlights a critical gap in public life: the difference between what we see and what we can trust. Meanwhile, the influencer market is projected to reach about $32.6 billion in 2025, with content volume growing and production costs decreasing. Put these two facts together, and a simple truth appears. Deepfake NIL licensing—the paid use of a person’s name, image, and likeness through synthetic media—will grow faster than our defenses unless schools, universities, and education ministries take action. This issue goes beyond celebrity appearances. It affects students, teachers, and institutions that now compete for attention like brands and creators do. Classrooms have become studios, and authenticity is now part of the curriculum.

Deepfake NIL licensing is changing the cost curve

The reason for this change is technological. In 2025, OpenAI released Sora 2, a model that generates hyper-realistic video with synchronized audio and precise control. OpenAI’s launch materials highlight improved physical realism. Major tech outlets report that typical consumer outputs run for tens of seconds per clip. The app now includes social features designed for quick remixing and cameo-style participation. In short, anyone can create convincing short videos at scale using someone else’s licensed (or unlicensed) face and voice. When credible tools drop the cost of production to near zero, licensing becomes the business model. The market will follow the path of least resistance: pay creators or their agents a fee for permissioned “digital doubles” and then mass-produce content featuring them. This shift is already visible in pilot platforms that let users record a brief head movement and voice sample for cameo use, complete with revocable permissions and embedded watermarks. However, those marks don’t always hold up against determined adversaries.

Policy is progressing, but not quickly enough. The EU’s AI Act mandates that AI-generated or AI-modified media—deepfakes—must be clearly labeled. YouTube now requires creators to disclose realistic synthetic media. Meta and TikTok have introduced labeling and, importantly, support for Content Credentials (the C2PA provenance standard) to ensure that authentic data travels with files across platforms. However, these disclosures rely on adoption, which is inconsistent. Recent studies indicate that fewer than half of creators use adequate watermarking, and deepfake labeling remains uncommon across the ecosystem. This gap—in rules, enforcement, and infrastructure—provides an opportunity for deepfake NIL licensing to thrive in gray areas. At the same time, misuse spreads through the same channels.

The economics are compelling. Goldman Sachs estimates that the broader creator economy could reach $480 billion by 2027. Influencer marketing alone has jumped from $24 billion in 2024 to an estimated $32.55 billion in 2025. As volume increases, brands will treat faces as licensable assets rather than items to juggle. Unions have noticed this trend. The SAG-AFTRA agreements for 2024–2025 set minimum standards for consent, compensation, and control over digital replicas—including voice—creating a framework that education and youth sports can follow. However, the same time period also revealed how fragile these safeguards can be. Investigations and watchdogs have reported extensive non-consensual deepfake pornography, rising synthetic-fraud rates, and the ease with which realistic fakes can bypass naive checks. Deepfake NIL licensing will flourish in this environment.

Deepfake NIL licensing and campus policy

Education currently sits at a crossroads where NIL, influence, and duty of care intersect. In US college sports, a court-supervised settlement now allows schools to share up to $20.5 million each year directly with athletes for NIL starting in the 2025–26 season. At the same time, the collegiate NIL market itself is often valued at around $1.5 billion, while the broader influencer economy that students engage with is much larger. Many students are already micro-influencers, and many departments now function like media shops. In this climate, deepfake NIL licensing becomes a campus issue because licensing—and mis-licensing—can occur on school accounts, in team agreements, and within courses that require students to create and publish. The ethical guidelines on campus must keep up with the financial realities.

The risk profile is real. Channel 4 News, covered by The Guardian, reported that nearly 4,000 celebrities had been targeted by deepfake porn, which received hundreds of millions of views over just a few months. Teen creators and student-athletes face the same tools and risks, often without protection. Regulators and platforms stress labeling and disclosure, but confidence remains low: only 30% of UK adults feel they can judge whether media was AI-generated. At the same time, surveys in the US show that large majorities want labels because they cannot trust their ability to detect such content. Schools cannot pass this responsibility to platforms. Athletic departments, communications offices, and student services need consent processes, model releases that clearly cover digital doubles, and default provenance tagging on official outputs. This is necessary to protect students who license their likeness as well as those who never intended to do so.

Deepfake NIL licensing needs an authenticity infrastructure

Rules are essential, but infrastructure is crucial. The C2PA “Content Credentials” standard provides media files with a verifiable provenance trail. Major platforms and manufacturers have begun to adopt it, from TikTok’s automatic labeling of credentialed content to camera makers that include credentials in their devices. The Content Authenticity Initiative reports thousands of organizations are now involved. This is significant for education because provenance can be integrated into workflows: a journalism school, a design program, or a district communications team can require Content Credentials during creation and maintain them through editing and publishing. It is not a complete solution; it is a safety measure. Where labels are present, trust can grow. Where they are absent, disclosure rules become optional.

Yet adoption is inconsistent. A 2025 study found that only about 38% of popular image generators use adequate watermarking, and only about 18% employ meaningful deepfake labeling. Meanwhile, platform policies remain fluid. Meta announced labeling in early 2024 and later adjusted its placement and scope. YouTube’s disclosure requirement is now active, and TikTok has expanded auto-labels for media with credentials. Schools should treat these as baselines rather than guarantees. The transparency provisions in the EU AI Act offer a stronger framework and will influence global practices, but institutions cannot wait for uniform enforcement to arrive.

What educators should do now about deepfake NIL licensing

Start with contracts instead of code. Every school involved with student media or athletics should update release forms to include explicit consent, scope, duration, revocation, and compensation for digital replicas, following the principles of transparency, consent, compensation, and control present in recent SAG-AFTRA deals. The form must address voice clones, face swaps, and synthetic narration and specify whether training uses are permitted. For minors, obtain consent from a parent or guardian. For international programs, align forms with the EU AI Act’s disclosure requirements to prevent cross-border confusion. Then support the paperwork with technology: require Content Credentials on all official outputs, enable automatic credential preservation in editing tools, and keep a registry of authorized digital doubles so staff can verify if a “cameo” is licensed.

The curriculum also needs an upgrade. Media and information literacy should make checking provenance and identifying platform-specific labels standard practices. UNESCO warns that two-thirds of creators do not systematically fact-check before posting; PISA materials show how weak digital practices impact learning. Teach students how to interpret a Content Credentials panel, how to disclose synthetic edits, and how to file a takedown when a deepfake targets them. Develop lab exercises around real platform policies on YouTube, Meta, and TikTok. Teach detection skills, but do not base trust solely on visual judgment. The evidence is clear: the public’s ability to identify AI is limited, and in some scenarios, negligible. Trust should come from reliable processes rather than guesswork. Deepfake NIL licensing will hinge on how consent is gathered, how provenance is maintained, and how misuse is managed before it becomes a significant issue.

The existing gap remains a significant concern. Only 30% of adults feel confident identifying AI-generated media, even as the creator and influencer economies fuel the content boom. This serves as the backdrop for deepfake NIL licensing—a system that will monetize faces on a large scale, reward permissioned clones, and entice bad actors to bypass consent. Education cannot remain on the sidelines. It is the place where young creators learn the rules of the attention economy. It is where student-athletes negotiate NIL for the first time. It is where public trust in knowledge is either rebuilt or lost. The call to action is clear: align releases with the realities of digital replicas, require provenance by default, teach disclosure and verification as essential skills, and prepare for the day a viral fake targets your institution. If we do this, schools will not just keep pace with the platforms. They will set the standard for a public space where licensing a face is straightforward—but earning trust remains the goal.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Adobe. (2024). Authenticity in the age of AI: Growing momentum for Content Credentials across social media platforms and AI companies.

Coalition for Content Provenance and Authenticity (C2PA). (2025). C2PA technical specification v2.2.

Content Authenticity Initiative. (2025). 5,000 members: Building momentum for a more trustworthy digital world.

European Parliament. (2025, Feb. 19). EU AI Act: First regulation on artificial intelligence.

Freshfields. (2024, Jun. 25). EU AI Act unpacked #8: New rules on deepfakes.

Goldman Sachs. (2023, Apr. 19). The creator economy could approach half-a-trillion dollars by 2027.

Influencer Marketing Hub. (2025, Apr. 25). Influencer Marketing Benchmark Report 2025.

Meta. (2024, Feb. 6). Labeling AI-generated images on Facebook, Instagram and Threads.

Ofcom. (2024, Nov. 27). Four in ten UK adults encounter misinformation; only 30% confident judging AI-generated media.

OpenAI. (2025, Sept. 30). Sora 2 is here.

OpenAI. (2025, Feb. 15). Sora: Creating video from text.

Opendorse. (2025, Jul. 1). NIL at Four: Monetizing the new reality.

Reuters. (2025, Jul. 10). Industry video-game actors pass agreement with studios for AI security.

SAG-AFTRA. (2024–2025). Artificial Intelligence: Contract provisions and member guidance.

The Guardian. (2024, Mar. 21). Nearly 4,000 celebrities found to be victims of deepfake pornography.

TikTok Newsroom. (2024, May 9). Partnering with our industry to advance AI transparency and literacy. https://newsroom.tiktok.com.

YouTube Help. (2024). Disclosing use of altered or synthetic content.

Zhao, A., et al. (2025, Oct. 8). Adoption of watermarking for generative AI systems in the wild. arXiv.