Schools vs. Social Media Polarization: Make Accuracy the Product

Input

Modified

Social media polarization is a feature of engagement-driven design, not a glitch Schools should shift from neutrality to making accuracy the product students share Prebunking, accuracy prompts, lateral reading, and policy partnerships raise information fidelity

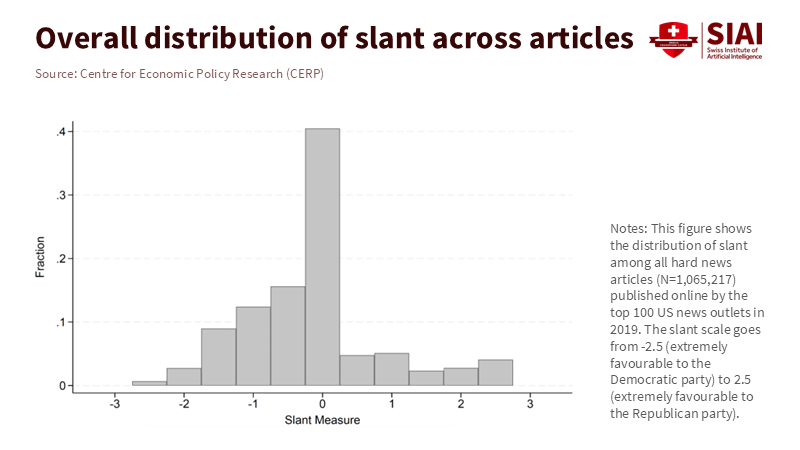

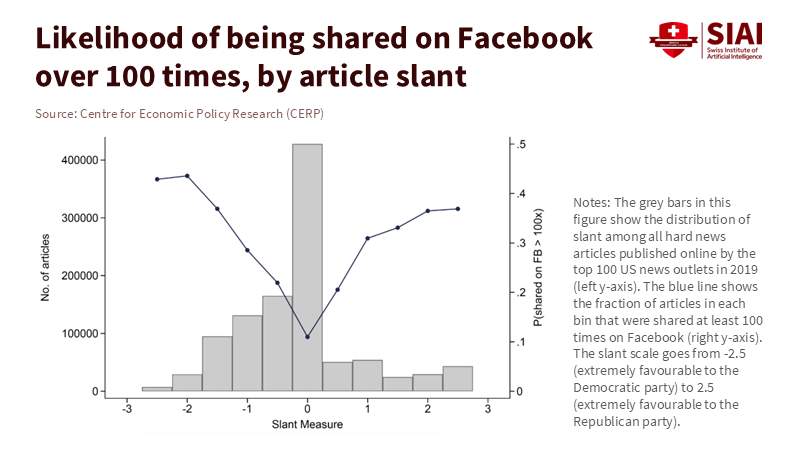

A single number tells the story: on Facebook, the average news consumption of liberals and conservatives differs by 1.5 times the ideological distance between a typical New York Times article and a typical FoxNews.com article. Most news published is moderate, yet extreme content gets shared the most. This is social media polarization in practice, not theory. It explains why a platform focused on engagement will learn to promote more extreme views. About half of U.S. adults report getting news from social media at least occasionally. Trust in national media is at near record lows. In cable news, primetime audiences tend to favor the loudest brands, while centrist options often fall by the wayside. The lesson for schools and universities is clear: we cannot make feeds “neutral.” However, we can prioritize accuracy as the product that students see, share, and are rewarded for promoting. This is more of an education challenge than a platform wish list.

Reframing social media polarization as a feature, not a bug

The business model matters. Engagement-based ranking and ad-driven attention reward emotional and identity-threatening posts. Recent randomized audits on Twitter and X show that algorithmic ranking increases out-group hostility and charged language beyond user preferences. Users report feeling worse after seeing what the algorithm promotes. This is a design feature, not a glitch, and it predicts more social media polarization over time. Education leaders should stop assuming platforms will balance themselves if we complain loudly enough. They won’t.

The supply of news has also changed. A large-scale analysis of U.S. TV news from 2012 to 2022 finds that cable channels have diverged from each other and from broadcast news, driven not only by opinion shows but also by choices in complex news topics and language. What gets covered and how it is presented has become more distinct. This broadcast-to-cable gap mirrors what we see online when platforms learn our tastes and adapt to them. With 53% of adults getting news from social media, classrooms inherit a divided information world the moment students unlock their phones.

Audience preferences also become evident through remote controls and clicks. In August 2025, Fox News averaged about 2.3 million primetime viewers while CNN’s primetime audience dropped nearly 60% compared to the same month the previous year. Reading this only as a political issue would be a mistake. It also reflects market signals that sharper framing is more effective. A Japanese minister once claimed climate policy had to be fun, cool, and appealing. The phrasing was grating, but the logic was sound. In politics, dull content loses attention, and attention is the currency online. Social media polarization feeds on this simple fact.

Focus on fidelity, not neutrality, in social media polarization

The education sector should relinquish the goal of depolarizing platforms. That is not something we can control with a lever. Instead, we can change what gains traction in student networks: the accuracy and credibility of information. Evidence suggests that three key approaches are practical: first, prebunking is effective. Field tests of short videos that expose common manipulation tactics before students encounter them improved people’s ability to recognize misinformation in Eastern Europe and are now part of Google Jigsaw’s playbook in Germany and beyond. Inoculation effects fade and need to be refreshed, but the cost is minimal, and it fits the scale of the platforms.

Second, accuracy prompts can change sharing behavior. Across experiments on Facebook and X, simple reminders to consider accuracy before posting reduced willingness to share false headlines and improved the quality of content shared. These prompts are content-neutral, quick to implement in learning management systems and campus apps, and compatible with free expression. They do not ban speech; they alter the default question students ask themselves.

Third, teach lateral reading as a core academic skill rather than a niche digital-literacy elective. Curricula based on Civic Online Reasoning have shown classroom improvements by training students to open new tabs, check sources, and compare multiple sources instead of relying on a single page. New studies from 2023 to 2025 have expanded these methods across various subjects and grade levels. When teachers demonstrate lateral reading, students become better at distinguishing credible claims from unreliable ones—even when slick visuals and scientific jargon are present.

What educators can do about social media polarization?

Start with measurement. Choose three metrics to track monthly in classes or student groups: (1) the share of sources students cite that have accountable ownership and transparent corrections; (2) the proportion of shared items that pass a lateral-reading check by peers; (3) the change in belief accuracy regarding contested claims before and after discussions. Use quick quizzes with fact-checking on current events to reinforce knowledge and understanding of the material. Publish anonymized class dashboards to make improvements visible. This reframes social media polarization as a learning outcome instead of a problem to bemoan. For methodological rigor, sample claims equally from left- and right-leaning outlets and report results by topic, not ideology.

Incorporate prebunking into the curriculum. In the first week of each term, run a 20-minute inoculation session: two short videos on common manipulation tactics (such as false dichotomies and scapegoating), a quick worksheet for spotting these tactics, and a reflection question: “Where have you seen this tactic online?” Repeat a “booster” in weeks five and ten. For schools with screensavers or internal apps, rotate prebunking clips there as well. The operations are straightforward: the videos are available for free and can be customized to suit your needs. Evidence suggests that they decrease the likelihood of falling for the next instance of viral misinformation.

Implement accuracy prompts wherever students compose or share their work. In LMS discussion forums, add a pre-submit checkbox—“I’ve thought about accuracy”—along with an optional link to a quick lateral-reading guide. In campus emails and student newsrooms, test a footer like “Want to help classmates? Share something true.” These small changes reduce the spread of misinformation without editorializing about politics. Instructors can try out prompts in one section and compare the outcomes with those of a control group over four weeks. Report the results to the dean with a straightforward summary. Strong studies indicate that even a single nudge can improve what individuals share next.

Align assessment with fidelity. Reward citations that demonstrate lateral reading—such as linking to an “About” page that shows funding or to an independent profile that outlines editorial standards. Penalize cherry-picking the one blog post that supports a claim when higher-credibility sources say otherwise. Provide clear grading criteria: provenance, transparency, independence, and corroboration each earn a point. Avoid vague “balance” criteria; students will learn to game symmetry instead of pursuing truth. This approach reduces social media polarization in the classroom by prioritizing accuracy—not outrage—as the valuable quality that earns grades.

Policy and platform responsibility in social media polarization

Develop platform partnerships that comply with the law. In the EU, the Digital Services Act requires large platforms to assess systemic risks, including manipulation and misinformation, and to publish the steps they will take to mitigate those risks. In the U.K., Ofcom’s Online Safety framework is rolling out codes that encourage services to manage recommendation algorithms for children and address illegal harms at scale. Universities can use these frameworks to request data access for research collaborations and pilot features that mitigate risks in student communities. Request campus-wide “accuracy prompts” in recommended content during election weeks and aggregated transparency on what students click.

Be mindful of equity. Surveys indicate that news via social feeds is particularly important for young people and communities that traditional media have historically underserved. This provides a reason to improve feeds, not to shame those who use them. Media-literacy studies in diverse environments show gains in recognizing false claims when instruction is practical and repeated. Schools should provide prebunking materials in the languages spoken at home by students and assess outcomes by subgroup rather than only in aggregate. When students see their lived information habits respected, they adopt accuracy norms more quickly.

Prepare for critiques that any intervention is political. The solution is to remain content-neutral when possible. Prebunking targets tactics, not ideologies. Accuracy prompts ask a common question. Lateral reading checks sources and evidence, not partisan labels. When unavoidable content decisions arise—such as in response to a health hoax—combine policy with transparency: publish the rule, document the reasoning, and provide a straightforward way for appeal linked to external standards, such as retraction policies or corrections pages. Platforms are private companies. Schools are public institutions with a specific mission. The guiding principle is openness about methods and metrics, not false neutrality.

Connect the classroom to the civic sphere. Create student “verification desks” that collaborate with local newsrooms. Allow students to perform rapid lateral-reading checks on trending local rumors, with faculty oversight, and then share the results in concise visual summaries. The goal is not to combat every falsehood but to normalize public responsibility for accuracy. Cable and social media incentives may still lean toward drama. Still, local information improves when young people can provide clear, sourced corrections before rumors spread widely.

Address well-being alongside accuracy. Short bursts of political content can spike outrage and subsequently diminish well-being. Teach “cool-down” habits as part of media literacy: pause before resharing, switch to non-political content, check one credible source, and then decide. These steps might sound simple, but they work because they change attention, which subsequently alters what the algorithm learns. If accuracy is our focus, attention becomes the factory floor.

When schools adopt fidelity metrics, they should contribute anonymized results to policy discussions. Regulators in Brussels and London now require risk assessments and are creating codes for recommendation systems. Data from educational pilots—what prompts were effective, which adjustments helped students, and what transparency students utilized—can inform balanced speech-protective standards. The goal is not to take political sides in a dean’s office but to demonstrate that design choices can reduce social media polarization without favoring any ideology.

Return to market reality. Platforms will continue to promote “fun, cool, and appealing” messages to grow their audiences. Rather than condemn this, educators should attractively present accuracy. Assignments should require clean visuals, engaging hooks, and human-centered storytelling linked to reliable sources. When accurate work looks appealing and spreads widely, students learn a valuable skill: how to combine truth with effective presentation. This is essential for competing in feeds that favor style. It also upholds the academic mission in an attention-driven economy.

What this perspective changes now. It reframes social media polarization from a problem we cannot fix to a learning goal we can influence. The evidence for prebunking, nudges, and lateral reading is strong enough to act on in the 2025–26 school year. The regulatory framework provides schools with leverage to request simple, content-neutral features from platforms that enhance fidelity and reduce the presence of low-quality claims. The media market reminds us that attention naturally gravitates toward engaging frames. Education can shift that default one student network at a time.

Precision over balance

We began with the striking 1.5 times gap in what citizens see and share. We conclude with a choice. We can complain about feeds and expect neutrality, which will never happen. Alternatively, we can teach, measure, and design for accuracy while being aware that the algorithms will reward whatever captures attention. The classroom is where we make reliable content stand out. The campus is where we demonstrate transparency and publish our methods. The network is where students learn to pre-bunk, pause, check, and share well-sourced claims compellingly. If we succeed, the next cohort will continue to move forward. But they will also slow down, verify, and circulate truth. This is not a utopia; it is a realistic strategy to reduce social media polarization this year. While platforms will focus on grabbing attention, schools should prioritize fidelity and ensure its success.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Adweek/TVNewser. (2025, Sept. 4). Here are the cable news ratings for August 2025. Retrieved Sept. 4, 2025.

AlgorithmWatch. (2023). A guide to the Digital Services Act.

Braghieri, L., Eichmeyer, S., Levy, R., Möbius, M., Steinhardt, J., & Zhong, R. (2024). Article-level slant and polarization of news consumption on social media (CEPR DP19807).

European Commission. (2024). EU Digital Services Act: transparency and systemic risk mitigation.

Gallup. (2024, Oct. 14). Americans’ trust in media remains at trend low.

Google Jigsaw, Cambridge SDML, et al. (2023). Prebunking expansion in Europe (AP report).

Hosseinmardi, H., et al. (2025). Unpacking media bias in the growing divide between cable and network news, Scientific Reports.

Knight First Amendment Institute / Milli, S., et al. (2025). Engagement, user satisfaction, and the amplification of divisive content on social media.

MarketWatch / Reuters. (2019). “Climate fight must be ‘sexy, fun and cool’,” remarks by Shinjiro Koizumi.

MIT IDE. (2024). Reducing misinformation sharing with accuracy prompts (research brief).

Penn Annenberg. (2025). How cable news has diverged from broadcast news.

Pennycook, G., & Rand, D. (2021). Shifting attention to accuracy can reduce misinformation online, Nature.

Pew Research Center. (2025). Social media and news fact sheet.

Stanford History Education Group / McGrew, S., & Breakstone, J. (2023). Civic Online Reasoning across the curriculum, AERJ Open.

U.K. Government / Ofcom. (2024–25). Online Safety Act explainer; First codes of practice.