Throughput Over Tenure: How LLMs Are Rewriting “Experience” in Education and Hiring

Published

Modified

AI is recomposing jobs, not erasing them Throughput with judgment beats years of experience Schools and employers must teach, verify, and hire for AI-literate workflows

Between 60% and 70% of the tasks people perform at work today can be automated using current tools. This is not a prediction for 2040; it reflects what is already feasible, based on one of the most extensive reviews of work activities to date. Despite this, job data has not collapsed. Recent macro studies show no significant change in unemployment or hiring patterns since late 2022, even in fields most impacted by AI. The tension is evident: tasks are evolving rapidly, while overall job counts are changing more slowly. This gap highlights what matters now. The market is seeking individuals who can deliver results efficiently and effectively. It's not just about having ten years of experience; it’s about being able to convert ten hours of work into what used to take ten days—consistently, with sound judgment, and using the right tools. This new measure of expertise will determine how schools and employers are evaluated.

From “replacement” to “recomposition”

The key question has shifted from whether AI will replace jobs to where demand is headed and how teams are being restructured. Recent data from the global online freelance market illustrates this trend. In areas most affected by generative AI, the number of contracts fell by approximately 2%, and earnings decreased by roughly 5% after the late-2022 wave of new models. Meanwhile, searches for AI-related skills and job postings surged, and new, more valuable contract types emerged outside core technical fields. In short, while some routine roles are shrinking, adjacent work requiring AI skills is growing. The total volume of work is not the whole story; the mix of jobs is what really counts.

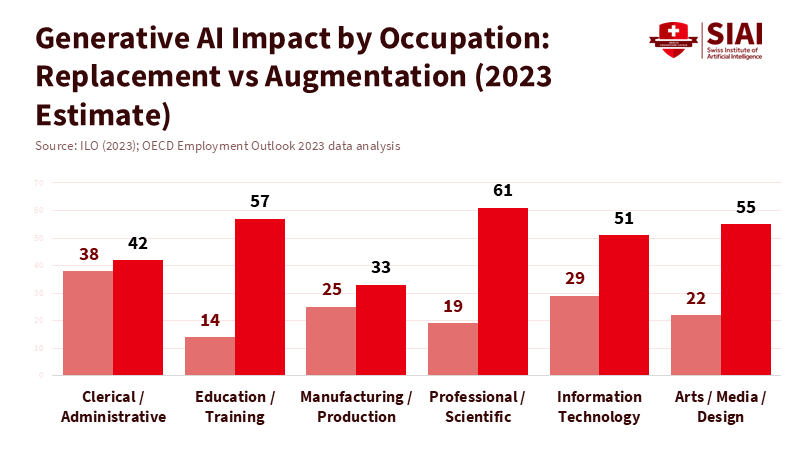

A broader perspective shows similar pressures for change. The OECD’s 2023 report estimated that 27% of jobs in member countries are in fields at high risk from automation across various technologies, with AI as a significant factor. The World Economic Forum's 2023 survey expects that 42% of business tasks will be automated by 2027 and anticipates major disruptions in essential skills. Yet, an ILO global study finds that the primary short-term impact of generative AI is enhancement rather than full automation, with only a small portion of jobs falling into the highest exposure category. This resolves the apparent contradiction. Tasks change quickly, but occupations adapt more slowly. Teams are reformed around what the tools excel at. The net result is a shift in the skills required for specific roles, rather than a straightforward replacement of jobs.

The education sector is actively involved in this evolution. Universities, colleges, and training providers are directly affected by these changes. Suppose educational programs continue to emphasize time spent in class and tenure alone. In that case, they will fail to meet learners' needs. Training should focus on throughput—producing repeatable, verifiable outputs with human insight and support from models. This shift requires clearer standards for tool use, demonstrations of applied judgment, and faster methods to verify skills. Educational policies must also acknowledge who may be left behind as task requirements evolve. Current data reveal disparities based on gender and prior skill levels, so policy must address these issues directly.

When tools learn, “experience” changes

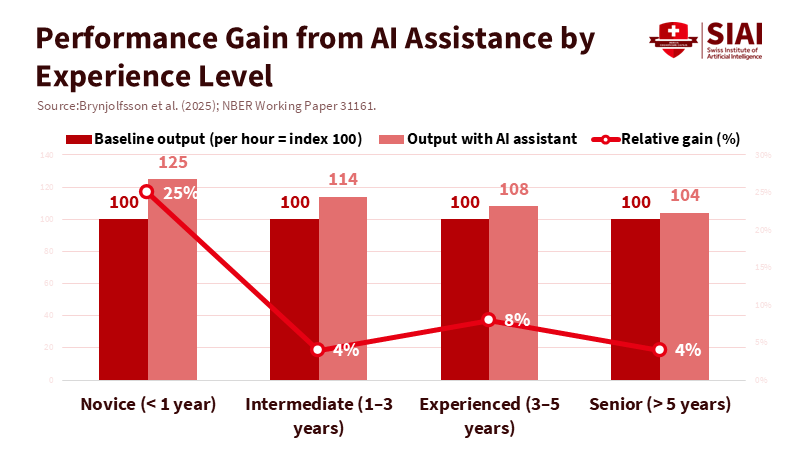

Research indicates that AI support improves output and narrows gaps, especially for less-experienced workers. In a large firm's customer support, using a generative AI assistant increased the number of issues resolved per hour by about 14%, with novice agents seeing the most significant benefits. Quality did not decline, and complaints decreased. This isn't laboratory data; it's based on over five thousand agents. The range narrowed because the assistant shared and expanded tacit knowledge. This is what “experience” looks like when tools are utilized effectively.

Software provides a similar picture with straightforward results. In a controlled task, developers using an AI coding assistant completed their work 55% faster compared to those without it. They also reported greater satisfaction and less mental strain. The critical takeaway is not just the increased speed, but what that speed allows: quicker feedback for beginners, more iterations before deadlines, and more time for review and testing. With focused practice and the right tools, six months of training can now generate the output that used to take multiple years of routine experience. While this doesn't eliminate the need for mastery, it shifts the focus of mastery to problem identification, data selection, security, integration, and dealing with challenging cases.

Looking more broadly, estimates suggest that current tools might automate 60% to 70%of the tasks occupying employees’ time. However, the real impact hinges on how processes are redesigned, not just on the performance of the tools. This explains why overall labor statistics change slowly, even as tasks become more automatable. Companies that restructure workflows around verification, human oversight, and data management will harness these benefits. In contrast, those merely adding tools to outdated processes will not see gains. Education programs that follow the first approach—training students to design and audit workflows using these models—will produce graduates who deliver value in weeks rather than years.

Degrees, portfolios, and proofs of judgment

If throughput is the new measure of expertise, we need clear evidence of judgment. Employers cannot gauge judgment based solely on tenure; they need to see it reflected in the work produced. This situation has three implications for education. First, assessments should change from “write it from scratch” to “produce, verify, and explain.” Students should be required to use approved tools to draft their work and then show their prompts, checks, and corrections—preferably against a brief aligned with the roles they want to pursue. This approach is not lenient; it reflects how work actually gets done and helps identify misuse more easily. UNESCO’s guidance for generative AI in education supports this direction: emphasizing human-centered design, ensuring transparency in tool use, and establishing explicit norms for attribution.

Second, credentials should verify proficiency with the actual tools being used. The World Economic Forum reports that 44% of workers' core skills will be disrupted by 2027, highlighting the increasing importance of data reasoning and AI literacy. However, OECD reviews reveal that most training programs still prioritize specialized tracks, neglecting broad AI literacy. Institutions can close this gap by offering micro-credentials within degree programs and developing short, stackable modules for working adults. These modules should be grounded in real evidence: one live brief, one reproducible workflow, and one reflective piece on model limitations and biases. The key message to employers is not merely that a student “used AI,” but that they can reason about it, evaluate it, and meet deadlines using it.

Third, portfolios should replace vague claims about experience. Online labor markets illustrate this need. After the 2022 wave of models, demand shifted toward freelancers who could specify how and where they used AI in their workflows and how they verified their results. In 2023, job postings seeking generative AI skills surged, even in non-technical fields such as design, marketing, and translation, with more of those contracts carrying higher value. Students can begin to understand this signaling early: each item in their portfolio should describe what the tool did, what the person did, how quality was ensured, and what measurable gains were achieved. This language speaks to modern teams.

Additionally, a brief method note should be included in the curriculum. When students report a gain (e.g., “time cut by 40 %”), they should explain how they measured it, such as the number of drafts, tokens processed, issues resolved per hour, or review time saved. This clarity benefits hiring managers and makes it easier to replicate in internships. It also cultivates the crucial habit of treating model outputs as hypotheses to be verified, not as facts to be uncritically accepted. That represents the essence of applied judgment.

Guardrails for a lean, LLM-equipped labor market

More efficient teams with tool-empowered workers can boost productivity. However, this transition has certain risks that education and policy must address. Exposure to new technologies is not uniform. ILO estimates indicate that a small percentage of jobs are in the highest exposure tier; yet, women are more often found in clerical and administrative jobs, where the risk is more pronounced, particularly in high-income countries. This situation creates a dual responsibility: develop targeted reskilling pathways for those positions and reform hiring processes to value adjacent strengths. Suppose an assistant can handle scheduling and drafting emails. In that case, the human element should focus on service recovery, exception handling, and team coordination. Programs should explicitly train and certify those skills.

The freelance market also serves as a cautionary tale. Research indicates varied impacts on different categories of work; some fields lose routine jobs, while others see an increase in higher-value opportunities linked to AI workflows. Additionally, the layers associated with data labeling and micro-tasks that support AI systems are known for low pay and scant protections. Education can play a role by teaching students how to price, scope, and contract for AI-related work, while also underscoring the ethical considerations of data work. Policy can assist by establishing minimum standards for transparency and pay on platforms that provide training data, and by tying public procurement to fair work evaluations for AI vendors. This approach prevents gains from accumulating in the model supply chain while shifting risks to unseen workers.

Hiring practices need to evolve in response to these changes. Job advertisements that require “x years” of experience as a blunt measure should instead focus on demonstrating throughput and judgment: a timed work sample with a specific brief, allowed tools, a fixed dataset, and an error budget. This adjustment is not a gimmick; it provides a better indication of performance within AI-enhanced workflows. For fairness, the brief and data should be shared in advance, along with clear rules regarding allowed tools. Candidates should submit a log of their prompts and checks. Education providers can replicate this format in capstone projects, sharing the outcomes with employer partners. Over time, this consistency will ease hiring processes for all parties involved.

Finally, understanding processes is just as important as knowing how to use tools. Students and staff should learn to break tasks into stages that the model can assist with (such as drafting, summarizing, and searching for patterns), stages that must remain human (like framing, ethical reviews, and acceptance), and stages that are hybrid (like verification and monitoring). They should also acquire a fundamental toolset, including how to retrieve trusted sources, maintain a library of prompts with version control, and utilize evaluation tools for standard tasks. None of this requires cutting-edge research; it requires diligence, proper documentation, and the routine practice of measurement.

Measure what matters—and teach it

The striking statistic remains: up to 60%-70% of tasks are automatable with today’s tools. However, the real lesson lies in how work is evolving. Tasks are shifting faster than job titles. Teams are being restructured to emphasize verification and handling exceptions. Experience, as a measure of value, now relies less on years worked and more on the ability to produce quality results promptly. Education must consciously respond to this change. Programs should allow students to use the tools, require them to show their verification methods, and certify what they can achieve under time constraints. Employers should seek evidence of capability, rather than merely years of experience. Policymakers should support transitions, particularly in areas with high exposure, and raise standards in the data supply chain. By taking these steps, we can turn a noisy period of change into steady, cumulative benefits, developing graduates who can achieve in a day what previously took a week, and who can explain why their work is trustworthy. This alignment of human talents with the realities of model-aided production hinges on knowing what to measure and teaching accordingly.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Brynjolfsson, E., Li, D., & Raymond, L. (2025). Generative AI at Work. Quarterly Journal of Economics, 140(2), 889–931.

Brynjolfsson, E., Li, D., & Raymond, L. (2023). Generative AI at Work (NBER Working Paper No. 31161). National Bureau of Economic Research.

GitHub. (2022, Sept. 7). Quantifying GitHub Copilot’s impact on developer productivity and happiness.

International Labour Organization. (2023). Generative AI and Jobs: A global analysis of potential effects on job quantity and quality (Working Paper 96).

International Labour Organization. (2025). Generative AI and Jobs: A Refined Global Index of Occupational Exposure (Working Paper 140).

McKinsey & Company. (2023). The economic potential of generative AI: The next productivity frontier.

OECD. (2023). OECD Employment Outlook 2023. Paris: OECD Publishing.

OECD. (2024). Readying Adult Learners for Innovation: Reskilling and Upskilling in Higher Education. Paris: OECD Publishing.

OECD. (2025). Bridging the AI Skills Gap. Paris: OECD Publishing.

Oxford Internet Institute. (2025, Jan. 29). The Winners and Losers of Generative AI in the Freelance Job Market.

Reuters. (2025, May 20). AI poses a bigger threat to women’s work than men’s, says ILO report.

UNESCO. (2023). Guidance for Generative AI in Education and Research. Paris: UNESCO.

Upwork. (2023, Aug. 22). Top 10 Generative AI-related searches and hires on Upwork.

Upwork Research Institute. (2024, Dec. 11). Redesigning Work Through AI.

Upwork Research Institute. (2024, Feb. 13). How Generative AI Adds Value to the Future of Work.

World Economic Forum. (2023). The Future of Jobs Report 2023. Geneva: WEF.

Yale Budget Lab & Brookings Institution (coverage). (2025, Oct.). US jobs market yet to be seriously disrupted by AI. The Guardian.