Say 'Ah' to Data: AI Tongue Diagnosis as the New First Look

Input

Modified

AI tongue diagnosis turns a centuries-old check into fast, cheap triage With phones and clear protocols, it flags likely risks for confirmatory tests Deploy in primary care with consent, calibration, and monitoring to scale safely

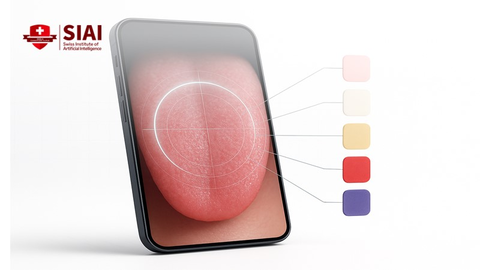

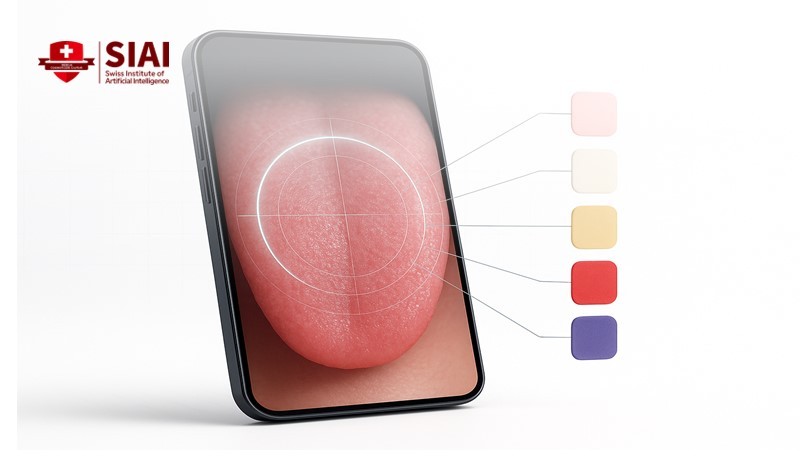

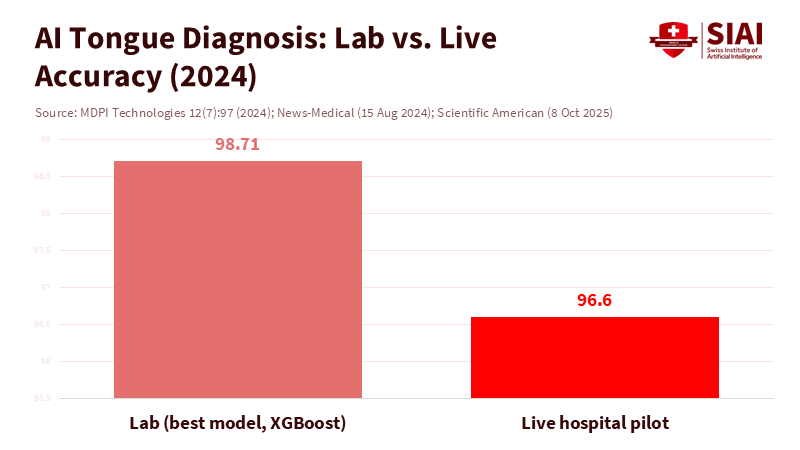

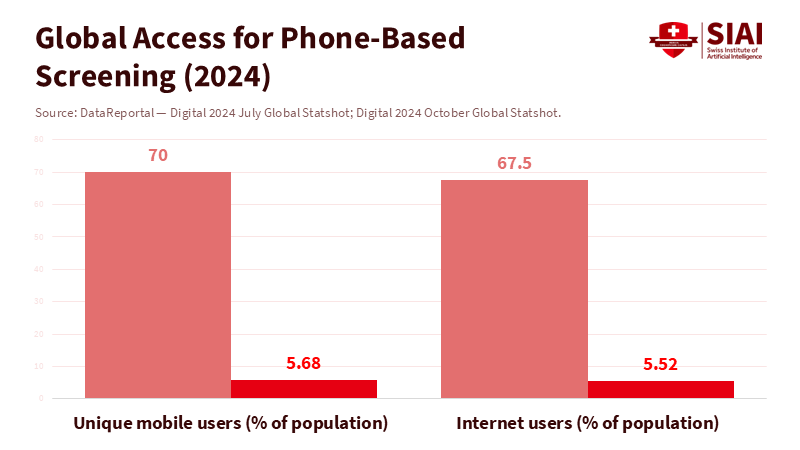

The most notable number in clinical triage this year is not about MRI magnets or gene panels. It is 96.6. This was the real-time test accuracy of a simple camera-based system that reads tongue color and identifies related conditions in hospital patients. The model, trained on thousands of images, accurately classified 58 out of 60 tongue photos taken with a basic USB webcam in a live setting. A pink tongue indicated "likely healthy." Yellow, white, red, violet, or blue indicated different risk cues such as anemia, diabetes, infection, or vascular stress. The same research showed lab classification accuracy above 98 percent on separate tongue color data, suggesting that the signal is strong and consistent. Also, roughly seven in ten people now carry a mobile phone, many of which have high-resolution cameras. Considering these factors, AI tongue diagnosis is not just a novelty; it is a scalable first look that can improve triage inexpensively, quickly, and broadly.

AI Tongue Diagnosis, from ritual to rapid triage

Doctors have examined tongues for centuries. The logic is straightforward. Blood, oxygen, inflammation, and fluid balance all visibly change color and coating. Deep learning now transforms that old signal into a repeatable, low-cost screening tool. The recent tongue-color system was trained on 5,260 images across seven color classes. It used standard color spaces (RGB, HSV, LAB, and others) to minimize lighting bias. In live tests with hospital patients who had diabetes, asthma, anemia, fungal disease, and COVID-19, the tool operated on a laptop and a consumer webcam, making a real-time assessment. There were no needles, no lab waiting, and no clinician time beyond capturing the image. This is the role AI tongue diagnosis should serve: not as a final verdict but as a reliable initial assessment that identifies who needs a blood test, a pulse oximeter, or a same-day consultation. The evidence base is also expanding beyond color. Models that analyze tongue texture and coating can distinguish between benign and concerning oral findings.

The appeal for health systems is real. New research suggests that tongue images carry insights beyond dentistry. Researchers report that tongue features can help screen for hepatic fibrosis risk. Others argue that these features enhance models for coronary artery disease in hypertensive patients. This matters in clinics where time is limited and where each additional test or referral has costs. A phone camera combined with a quick capture protocol could serve as the "stethoscope of the mouth," flagging risks for non-specialists and community health workers. Importantly, the earliest uses should focus on conditions where color changes have known clinical connections, such as iron-deficiency anemia, severe infection, or decompensation, to ensure that follow-up tests are clear and affordable. Suppose we are transparent about what the model reads (color and surface features) and what it cannot (cause). In that case, AI tongue diagnosis becomes a focused triage layer rather than an exaggerated claim about "diagnosing everything."

Building curricula around AI tongue diagnosis

Education is the key. Suppose doctors, nurses, and community health workers learn to use AI tongue diagnosis as an initial step. In that case, they can bring triage closer to patients. Teach the capture protocol: positioning, lighting, distance, and hygiene. Explain what a screen means and what it does not. A positive screen means "order a hemoglobin test," not "treat anemia." A negative screen means "move on," not "all clear." For medical and nursing schools, this can be a two-hour skills lab, not a new degree. Include a brief module on bias, image artifacts, and consent. Add a lab on how to interpret model outputs and when to escalate. The equipment is affordable and portable. A mid-range phone works. A color card helps. A privacy shield is essential. These are practical skills students can apply immediately in primary care, school health, or outreach clinics.

Administrators should think about distribution. There are over three million community health workers in at least 98 countries. Many already carry phones and follow checklists for prenatal care, vaccinations, and vital signs. Integrate AI tongue diagnosis into this workflow with a clear pathway to confirmatory testing. For instance, a community health worker could use their phone to capture a tongue image, which is then analyzed by the AI model. If the result is positive, the worker could be directed to perform a specific confirmatory test or refer the patient to a higher-level facility. Create a data loop so that models improve only with consented, de-identified images. Pair the tool with guidelines that direct positive screens toward the least expensive confirmatory test available locally. Use mobile dashboards to track false positives and false negatives by site, allowing for adjustments in training. This is prevention infrastructure, not a substitute for labs or exams. Its value lies in time: quick reassurance for most people and faster access to care for those at risk.

Proof, bias, and boundaries: making AI tongue diagnosis safe

Skeptics raise concerns about overclaiming. They rightfully question sample sizes, lighting conditions, skin tone, and disease prevalence. The live test that achieved 96.6 percent accuracy used 60 patient images taken under controlled conditions. While promising, it is still a small sample. The training involved more than five thousand tongue images to instruct the model on color classes and associated risks. The strong lab accuracy indicates a clear signal, yet deployment must consider factors such as drift, glare, and camera differences. The solution is not rhetoric. It is protocol. Standardize image capture. Record ambient light and device type, and calibrate color against a reference patch. Lock down the inference app, log outputs, and connect every positive screen to a confirmatory test code. This is how to assess accurate yield in real-world settings.

While AI tongue diagnosis shows promise, it is not without its limitations. Tongue color is set within a mouth with varying lips and teeth across individuals and cultures. Some coatings reflect diet or smoking, and some tongues are stained. Models may focus on the context instead of the tongue itself. Recent studies have tackled this with segmentation that isolates the mid-tongue region while removing the facial background. Others broaden feature sets to include texture and coating depth. The existing literature already connects tongue features to liver fibrosis risk and cardiac status in hypertensive populations. Still, the appropriate boundary should be both narrow and practical. Use AI tongue diagnosis to guide individuals to basic tests where the prevalence is high and treatment is affordable. Avoid using it to make definitive diagnoses without confirming evidence. This approach is safer, fairer, and provides better value.

A practical pilot for systems under strain

Primary care faces pressure. Access is limited in many countries, and staff shortages slow down the initial decision of who needs a test and who can wait. A low-cost phone-based screen can help. Start with the risk of iron-deficiency anemia in adolescents and pregnant women. The capture is safe, the follow-up test is inexpensive, and the intervention is straightforward. A second pilot could focus on diabetes risk, where yellow-tinged tongues have been linked to oral changes in high-glucose conditions. A third could monitor recovery from respiratory infections, where changes in tongue redness indicate severity. Each pilot should include a consent flow, a simple capturing tutorial, and a strict guideline: no treatment decisions without a confirmatory test. All images should be encrypted on the device and deleted after upload. All model updates should be reviewed for ethics. AI tongue diagnosis then acts as an entry point, not a substitute for clinical judgment.

Scale is attainable. About 70 percent of the global population uses a mobile phone, and two-thirds use the internet. Even in low-resource settings, basic cameras are standard. With proper training, community teams can capture images during home visits and school screenings. Positive screens would trigger a lab voucher or a clinic appointment. In contrast, negative screens would reassure families and prevent unnecessary trips. The math is simple. Suppose screening reduces one unnecessary visit per worker each day across millions of workers. In that case, the strain on the system decreases quickly. The benefits are most significant in areas where travel costs are high and labs are far away. These areas also experience the most essential ethical gains, as triage times decrease for individuals who often wait the longest.

The impressive 96.6 percent live test accuracy for a camera that reads the tongue does not claim to replace doctors. It does something better. It offers systems a cheap, fast first look that reaches nearly everyone with a phone. The science is precise. Color and surface changes convey information about oxygenation, infection, and metabolic stress. The engineering is straightforward. A capture protocol, a lightweight model, and a clear path to confirmatory tests are essential. The policy is practical. Train students and community workers, establish standards for consent, storage, and escalation. Start with anemia, diabetes risk, and severe infections, where follow-up tests are clear and the stakes are high. We regard AI tongue diagnosis as triage rather than a definitive verdict. In that case, we can free up time for clinicians, reduce costs for families, and get care to people more quickly. The mouth has always told a story. It's time to listen with today's tools.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Abdulghafor Khudhaer Abdullah, Saleem Lateef Mohammed, Ali Al-Naji, & Mohammed Sameer Alsabah. (2023). Tongue Color Analysis and Diseases Detection Based on a Computer Vision System. Journal of Techniques, 5(1), 22–37. https://doi.org/10.51173/jt.v5i1.868.

Baybars, S. C., et al. (2025). Artificial Intelligence in Oral Diagnosis: Detecting Coated Tongue. Diagnostics. PMC12025637.

Duan, M., et al. (2024). Feasibility of tongue image detection for coronary artery disease in hypertensive populations. Frontiers in Cardiovascular Medicine, 11, 1384977.

International Telecommunication Union (2024). Facts and Figures 2024. Retrieved Nov. 27, 2024.

Lu, X., et al. (2024). Exploring hepatic fibrosis screening via deep learning analysis of tongue images. Frontiers in Medicine. PMC11384071.

MDPI Technologies (Hassoon, A. R., et al.). (2024). Tongue Disease Prediction Based on Machine Learning Algorithms, 12(7), 97. https://doi.org/10.3390/technologies12070097.

Milbank Memorial Fund (2024). The Health of U.S. Primary Care 2024 Scorecard: No One Can See You Now.

Scientific American (2025, Oct. 8). AI Reads Your Tongue Color to Reveal Hidden Diseases.

UNISA (University of South Australia) (2024, Aug. 14). Say 'aah' and get a diagnosis on the spot: is this the future of health? Media release.

We Are Social / DataReportal (2024, Jul. 31). Digital 2024 Global Statshot.