Algorithmic Decoupling Will Change What U.S. Students See Online

Input

Modified

U.S. spin-off narrows feeds Students lose global views Mandate diversity metrics, open datasets

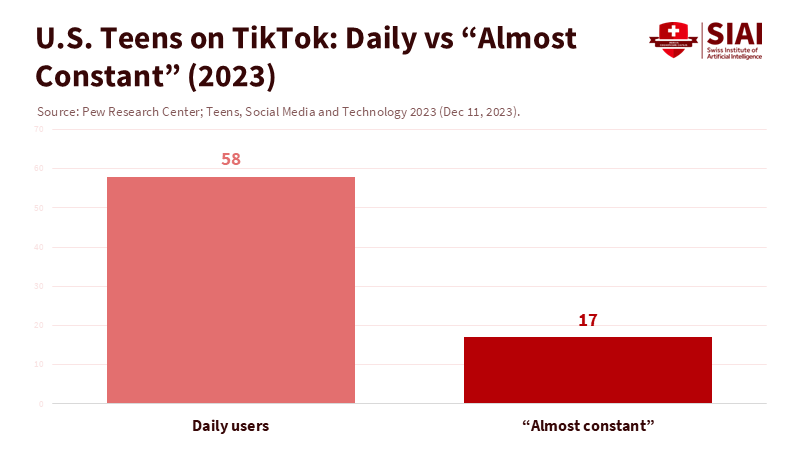

TikTok reaches millions of Americans every day, and a significant number are students. In 2024, Pew found that 58% of U.S. teens use TikTok daily, with 17% saying they use it almost constantly. This fact is striking. Now consider another: in January 2025, the U.S. Supreme Court set the stage for a law that could either force a complete divestment of TikTok or lead to the app's removal from U.S. app stores. Together, these points highlight an essential reality that education leaders must acknowledge. If divestment happens and a new U.S. owner cuts off data flows to the broader network, the recommendation engine will change. This change, called algorithmic decoupling, will determine which ideas, role models, dialects, and histories reach our classrooms and campuses. For U.S. education, this is not just a tech issue. It involves curriculum, student well-being, and civic literacy simultaneously. The stakes are high and urgent.

Algorithmic Decoupling Is a Policy Choice, Not a Technical Accident

The public discussion frames TikTok as a national security issue, which is accurate. However, for schools and universities, the more pressing concern is how a sale and enforced localization could create a "U.S.-only" training loop. The Protecting Americans from Foreign Adversary Controlled Applications Act is clear: either divestment or disappearance. The practical effect of the Supreme Court's January 17, 2025, order is evident. If the platform remains in the U.S. under new ownership, access controls and data pathways will likely be rebuilt to limit cross-border flows. Those flows are crucial for modern recommendation engines. When they decrease, the model's view of the world shrinks. This shift would change us from a global "For You" to a domestically focused "For Us." It would limit the model's exposure to foreign creators, languages, and civic viewpoints. The outcome would not be a conspiracy but a result of the inputs chosen by policy.

In education, we have seen the consequences of algorithmic decoupling before. When textbook markets consolidate and select a narrow range of content, diversity of perspectives decreases. The same pattern occurs with algorithmic systems, only more rapidly. Communication scholars warn that platform nationalism can limit cultural exchange and oversimplify complexity. Policy experts advocate for data to flow freely and with trust, as cross-border data enhances quality and resilience. If a new U.S. operator restricts training data, the machine's sense of variety and discovery will suffer. In real terms, students will encounter fewer external voices, fewer regional accents, and fewer perspectives on how peers around the world interpret the same events. The model will still be effective, but it will be more insular. This is the essence of algorithmic decoupling, and it is a choice with significant consequences for education.

The Education Risk: Narrower Feeds, Hotter Content, Colder Horizons

We should not speculate about the effects on feeds. Years of research on recommender systems have shown a trade-off between accuracy and diversity. When left to its own devices, a system that prioritizes short-term engagement tends to repeat successful content and neglect fresh or diverse material. Social science audits of TikTok's 2024 election cycle found that toxic, partisan posts received more engagement despite moderation. This is the baseline in a global training environment. Under algorithmic decoupling, the training pool tightens and the signals that "work" become louder. For classrooms, this raises two immediate issues. First, students will see fewer credible non-U.S. sources in history, science, and current events. Second, the domestic incentive structure will favor content that aligns with local outrage cycles, as the model will lack cross-cultural context to soften or redirect the feedback loop. This combination leads to hotter content and colder worldviews.

The potential negative impact on student well-being is a significant concern in the context of algorithmic decoupling. Extensive studies have linked certain social media behaviors to body image issues and eating disorders. TikTok, with its rapid, short-form content, is particularly impactful for teens. If algorithmic decoupling results in fewer opposing global standards—such as exposure to healthier food habits or different sports aesthetics—the content may increasingly favor whatever domestic micro-trend maximizes watch time. The same logic applies to study habits and educational myths. Suppose the U.S. model showcases fewer external examples of apprenticeship paths, vocational success, or low-pressure gap years. In that case, it restricts the futures available to students who scroll for what's considered 'normal.' Educators cannot shift this responsibility to the unseen hand of engagement. That hand will optimize without promoting diversity.

Algorithmic Decoupling Can Be Managed—If We Design for Diversity

This is not a call to do nothing. It is a governance plan. Begin with this truth: recommendation diversity isn't magic; it is a design goal that can be measured and improved. Computer science research from 2024 to 2025 shows clear methods—from pre-processing debiasing to multi-objective and adversarial training—that can increase diversity without compromising accuracy. News and social platforms already track metrics like novelty, coverage, and serendipity. A domestically owned app can do the same, even if data flows are narrow. The governance solution is to incorporate these diversity metrics into the agreement: publish them, audit them, and connect them to design standards suitable for minors. This provides educators with a measure and ensures students receive more than just a promise. It offers them a measurable guarantee that the feed will not simply reflect last week's outrage.

Policy design should also focus on the inputs we can still share across borders. Data governance groups advocate for trusted methods of international transfer. Even after a sale, a U.S. operator can use federated or de-identified data sets that enhance cultural diversity without compromising personal information. Consider structured "data spaces" that feature non-personal, public-interest video collections—global science explanations, art performances, language-learning clips—all curated for quality and licensed for training. Consider universities partnering to provide open educational resources in various languages, ensuring the model remains broad rather than narrow. For K-12 and higher education, this leads to a direct benefit: a more diverse content feed for U.S. learners while keeping personal data local. We have the concepts; now we need to put them into practice through contracts and oversight.

What Educators, Administrators, and Policymakers Should Do Next on Algorithmic Decoupling

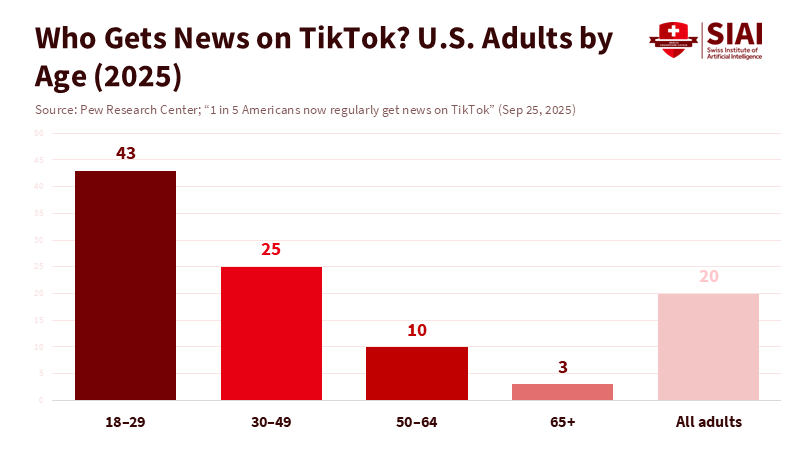

Education leaders cannot dictate foreign investment policy. However, they can influence student media environments. First, assume TikTok or any successor will remain a key venue for teens. Forecasts for 2024 to 2025 estimate U.S. usage at between 118 million and 170 million monthly active users. While the exact number varies, the scale is undeniable. The lesson is clear: do not prepare for a world without short-form feeds. Instead, plan for a world where feeds may be more U.S.-focused. This involves teaching skills for managing news consumption and sourcing information across cultures as essential media literacy, not as an add-on. It means assigning students to "break the bubble" by seeking non-U.S. accounts on relevant subjects and reflecting on how framing changes. It requires treating the feed as a text for analysis, not a weather system to endure.

Administrators should demand transparency from vendors and platforms regarding diversity metrics and age-appropriate defaults. Suppose a platform primarily relies on domestic interactions. In that case, districts should ask how the model ensures exposure to external views and languages. They should also inquire about how it measures and mitigates toxicity, especially since audits of the 2024 election cycle indicated high engagement with harmful political content. Contracts can include requirements for annual third-party audits of the diversity of recommendations for student-linked accounts, similar to accessibility audits for websites. State education agencies can provide template clauses. Federal agencies can link grant eligibility to evidence of diversity-aware algorithm design. If we can measure graduation rates by subgroup, we can measure content diversity by topic. The technology is available; what's lacking is the intention to integrate this into regulations.

Policymakers should reject the false choice between national security and intellectual openness. The U.S. can demand domestic control while ensuring that the student experience does not reduce to a domestic monoculture. The Supreme Court's ruling confirmed the government's authority to compel a sale; it did not dictate how algorithms should be designed after that. Congress and regulators can establish guidelines to protect minors, publish metrics on diversity and toxicity, and support trusted datasets for training and evaluation across borders. They can also promote access for independent research. Methods such as sock-puppet audits, research APIs, and standardized auditing processes can assess how a feed changes after decoupling. Allow qualified researchers safe space to conduct those audits, report findings by age and region, and advise school systems on ways to adapt. Evidence, not slogans, should direct the next steps.

The content moderation aspect remains crucial. A new U.S. owner will still face the same engagement pressures. In practice, that means misinformation on health, cheating tactics, and political memes will continue to thrive. A domestic team may be quicker to remove harmful content against protected groups. Still, it may also amplify local outrage and culture wars. Educators can advocate for "friction" tools that slow the spread of unverified claims across school-linked accounts. They can also push for a verified educator category that highlights authoritative classroom content in teen feeds during school hours. This approach does not concede control to platforms. Instead, it formalizes a public-interest commitment within them. It makes the feed feel more like a library, with clear selection and quality prioritized.

Finally, we need to address the challenges posed by short-form video. If the U.S. model becomes more insular after divestment, exposure to foreign languages will decline unless we take steps to address this. Districts can set targets for media literacy curricula: a minimum percentage of assignments that require non-U.S. sources, a rotation of creators from at least three regions, and regular reflection on how framing affects meaning. Colleges can collaborate with international universities to create short explainers in multiple languages and contribute them to open training repositories. These are not perfect solutions, but they are measures that push back against algorithmic decoupling. They recognize this shift as a structural issue and respond with structural solutions.

We began with two facts: heavy teen reliance on the app and a legal framework that necessitates divestment. The likely third fact—algorithmic decoupling—follows from these. If a domestically owned platform restricts its data, student feeds will lean towards internal voices, trends, and issues. This is not an argument for overturning the law. Instead, it advocates designing the next phase to ensure that American control does not lead to a narrow American vision. The U.S. can protect student data while also broadening student perspectives. It can mandate diversity-focused recommendation objectives, trusted cross-border training inputs, and independent audits that inform educators on changes. It can integrate teachers' roles into platform design rather than add them after a crisis. The alternative is passive drift. We can either keep the app and lose the world, or intentionally keep the world in the app. We should choose the latter and incorporate it into policy now.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

AP News. (2025, Jan.). Federal appeals court upholds law requiring sale or ban of TikTok in the U.S. Retrieved from AP News.

Business of Apps. (2025). TikTok revenue and usage statistics (2025). Retrieved from Business of Apps.

Feltman, R., Mwangi, F., & Sugiura, A. (2025). What TikTok's U.S. spin-off means for its algorithm and content moderation. Scientific American.

Harvard Kennedy School Misinformation Review. (2025, Aug.). Toxic politics and TikTok engagement in the 2024 U.S. election.

Holland & Knight. (2025, January 17). U.S. Supreme Court upholds TikTok sale-or-ban law. Client alert.

Lawfare. (2024, March 20). What happened to TikTok's Project Texas?

OECD. (2023). Moving forward on data free flow with trust (DFFT). Paris: OECD Publishing.

OECD. (n.d.). Cross-border data flows. Policy overview.

Pew Research Center. (2023, December 11). Teens, social media and technology 2023.

Reuters. (2025, September 27). ByteDance expected to play a big role in new U.S. TikTok; sources say.

Supreme Court of the United States. (2025, January 17). 24-656 TikTok Inc. v. Garland (per curiam order).

The Guardian. (2024, June 20). ByteDance alleges U.S.'s "singling out of TikTok" is unconstitutional.

Yuan, Y., et al. (2024). Enhancing recommendation diversity and novelty with bi-level optimization. Electronics, 13(19), 3841.

Zhao, Y., et al. (2025). Fairness and diversity in recommender systems: A survey. ACM Computing Surveys.