The Urgent Need to Address AI Security: Why AI Agents, Not LLMs, Are the Real Risk Surface

Published

Modified

The real risk isn’t the LLM’s words but the agent’s actions with your credentials Malicious images, pages, or files can hijack agents and trigger privileged workflows Treat agents as superusers: least privilege, gated tools, full logs, and human checks

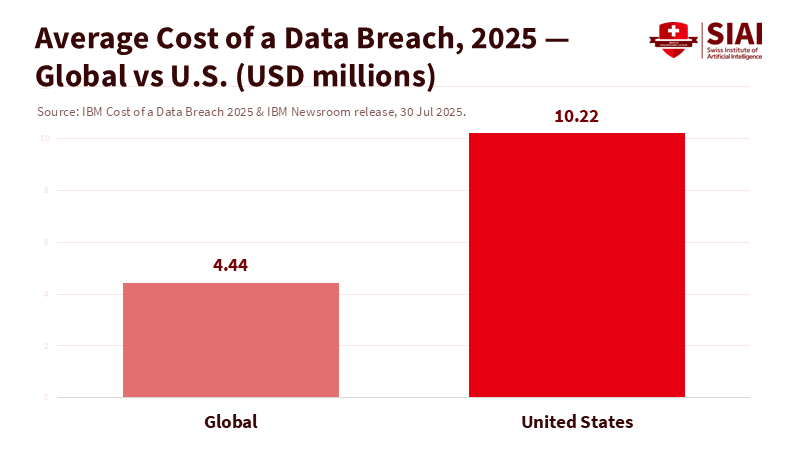

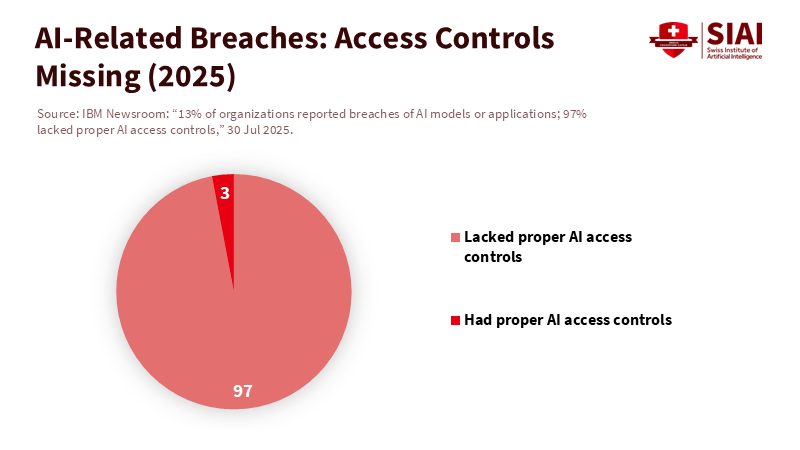

In 2025, the average cost of a U.S. data breach reached $10.22 million. Thirteen percent of surveyed organizations reported that an AI model or application was involved, yet 97% of them did not have proper AI access controls. This number should prompt us to rethink our threat model. We focus on the language model—its cleverness, its mistakes, its jailbreaks—while the real risk lies just one step away: the agent that holds keys, clicks buttons, opens files, fetches URLs, executes workflows, and impersonates us across business systems. When a seemingly "harmless" image, calendar invite, or web page provides instructions that an agent follows without question, the potential damage is not simply a bad paragraph; it is a chain of actions performed under your identity. Recent examples have demonstrated "silent hijacking" with minimal or no user interaction, involving mainstream assistants. If we continue to treat these incidents as model problems, we will continue to create superusers with inadequate controls. The solution starts by identifying the risk at its source: the LLM-based program that takes action.

From Chat to System Actor

The significant change is not about better or worse statistics, but about operations. An agent comprises prompt logic, tools and plugins, credentials, and policies. It can browse, run code, read emails, query CRMs, push tickets, edit spreadsheets, and make purchases. This combination brings traditional attack surfaces into a modern interface. Consider a support agent that reads PDFs, screenshots, and forms. A single compromised image or document can carry harmful instructions that the model unwittingly turns into actions—such as forwarding sensitive files, visiting attacker-controlled URLs, or stealing tokens. This is why image or pixel-level manipulations matter now: they don't just "trick" the model, but push a tool-enabled process to take action. Viewing this solely as a content moderation issue overlooks a larger systems problem: input handling, privilege boundaries, output filtering, and identity controls for software that takes action.

Security researchers and practitioners have started to recognize this shift. The OWASP Top 10 for LLM applications warns about prompt injection, insecure output handling, and vulnerabilities associated with plugins and third-party APIs. UK guidance on securely deploying machine-learning systems emphasizes the importance of the runtime environment, including credentials, storage, and monitoring. Attacks often combine methods and exploit areas where the software can act. Most agent incidents are not 'model failures' but failures related to identity and action: over-privileged tokens, unchecked toolchains, unrestricted browsing, and the absence of human oversight for high-risk activities. This necessitates a different solution: treating the agent like a privileged automation account with minimal privileges, outbound controls, and clear logs rather than as a chat interface with simple safeguards.

The description of credentials is particularly overlooked. Unit 42 points out that the theft or misuse of agent credentials puts every downstream system the agent can access at risk. Axios reported that "securing AI agent identities" was a significant theme at RSA this year. If your help desk agent can open HR tickets or your research agent can access lab drives and grant permissions, then agent impersonation represents a serious breach, not just a minor user interface annoyance. The central issue is simple: we connected a probabilistic planner to predictable tools and gave it the keys. The straightforward fix is to issue fewer keys, monitor the doors, and restrict the planner's access to certain areas.

What the Evidence Really Shows

Emerging evidence leads to one uncomfortable conclusion: AI agents are real targets today. In August, researchers revealed "silent hijacking" techniques that enable attackers to manipulate popular enterprise assistants with minimal interaction, thereby facilitating data theft and workflow changes. Shortly after, the trade press and professional organizations highlighted these findings: commonly used agents from major vendors can be hijacked, often by embedding malicious content in areas the agent already visits. The pattern is worryingly similar to web security—tainted inputs and over-trusted outputs—but the stakes are higher because the actor is different: software already authorized to operate within your company.

Governments and standards organizations have responded by extending traditional cyber guidelines into the agent era. A joint U.S. and international advisory on safely deploying AI systems emphasizes that defenses should cover the entire environment, not just the model interface. NIST's Generative AI Profile translates this approach into operational controls: define and test misuse scenarios, carefully restrict tool access by design, and monitor for unusual agent behavior like you would for any privileged service. These measures align with OWASP's focus on output handling and supply-chain risks—issues that arise when agents access code libraries, plugins, and external sites. None of this is groundbreaking; it's DevSecOps for a new type of automation that communicates.

The cost and prevalence of these issues underscore the urgency of addressing this issue for educators and public-sector administrators. According to IBM's 2025 study, the global average breach cost is around $4.44 million, with the U.S. average exceeding $10 million. 'Shadow AI' appeared in about 20% of breaches, adding hundreds of thousands of dollars to response costs. In a large university, if 20% of incidents now involve unauthorized or unmanaged AI tools, and even a small percentage of those incidents go through an over-privileged agent connected to student records or grant systems, the potential loss—financial, operational, and reputational—quickly adds up. A conservative estimate for a 25,000-student institution facing a breach with U.S.-typical costs suggests that the difference can mean a challenging year or a canceled program. We can debate specific numbers, but we cannot ignore the trend.

The demonstration area continues to expand. Trend Micro's research series catalogs specific weaknesses in agents, ranging from code execution misconfigurations to data theft risks concealed in external content, illustrating how these vulnerabilities can be exploited when agents fetch, execute, and write. Additionally, studies have reported that pixel-level tricks in images and seemingly harmless user interface elements can mislead agents that rely on visual models or screen readers. The message is clear: once an LLM takes on the role of a controller for tools, the relevant safeguards shift to securing those tools rather than just maintaining prompt integrity. Focusing only on jailbreak prompts misses the bigger picture; often, the most harmful attacks don't aim to outperform the model at all. Instead, they exploit the agent's permissions.

A Safer Pattern for Schools and Public Institutions

The education sector presents an ideal environment for agent risk: large data sets, numerous loosely managed SaaS tools, diverse user groups, and constant pressure to "do more with less." The same features that make agents appealing—automating admissions, drafting grant reports, monitoring procurement, or large-scale tutoring—also pose risks when guidelines are unclear. Here's a practical approach that aligns with established security norms: begin with identity, limit the keys, monitor actions, capture outputs, and involve a human in any processes that impact finances, grades, credentials, or health records. In practice, this means using agent-specific service accounts, temporary and limited tokens, clear tool allow-lists, and runtime policies that prevent file modifications, network access, or API calls beyond approved domains. When an agent needs temporary elevated access—for example, to submit a purchase order—they require a second factor or explicit human approval. This is not just "AI safety"; it's access management for a communicative RPA.

Implement that pattern with controls that educators and IT teams know well. Use NIST's GenAI profile as a checklist for procurement and deployment, map agent actions against your risk register, and develop scenarios for potential misuse, such as indirect prompt injection through student submissions, vendor documents, or public websites. Utilize the OWASP Top 10 for LLM apps to guide your testing: simulate prompt injection, ensure outputs do not activate unvetted tools, and test input variability. Follow the UK NCSC's deployment recommendations: safeguard sensitive data, run code in a secure environment, track all agent activities, and continually watch for unusual behaviors. Finally, treat agent credentials with utmost importance. If Unit 42 warns about agent impersonation, take it seriously—update keys, restrict tokens to single tools, and store them in a managed vault with real-time access. These are security practices already in place; the change lies in applying them to software that mimics user behavior.

Education leaders must also rethink their governance expectations. Shadow AI is not a moral issue; it's a procurement and enablement challenge. Staff members will adopt tools that work for them. If central IT does not provide authorized agents with clear features, people will use browser extensions and paste API keys into unapproved apps. The IBM data is crystal clear: unmanaged AI contributes to breaches and escalates costs. An effective response is to create a "campus agent catalog" of approved features, with levels of authorization: green (read-only), amber (able to write to internal systems with human oversight), and red (financial or identity actions requiring strict control). Combine this with a transparent audit process that tracks agent actions as you would for any other enterprise service. Encourage use by making the approved route the easiest option: set up pre-configured tokens, vetted toolchains, and one-click workspaces for departments. A culture of security will follow convenience.

Objections will arise. Some may argue that strict output filters and safer prompts suffice; others might insist that "our model never connects to the internet." However, documented incidents show that content-layer restrictions fail when the agent is trusted to act, and "offline" quickly becomes irrelevant once plugins and file access are included. A more thoughtful critique may center on costs and complexity. This is valid—segmented sandboxes, egress filtering, and human oversight can slow down teams. The key is focusing on impact: prioritize where agents can access finances or permissions, not where they merely suggest reading lists. The additional cost of limiting an agent that interacts with student finances is negligible compared to the expense of cleaning up after a credential-based breach. As with any modernization effort, we implement changes in stages and sequence risk reduction; we do not wait for a flawless control framework to ensure safer defaults.

Incidents in the U.S. cost double-digit millions, with a growing number tied to AI systems lacking essential access controls. The evidence has consistently pointed in one clear direction. The issue is not the sophistication of a model; it is the capability of software connected to your systems with your keys. Images, web pages, and files now serve as operational inputs that can prompt an agent to act. If we accept this perspective, the path forward is familiar. Treat agents as superusers deserving the same scrutiny we apply to service accounts and privileged automation: default to least privilege, explicitly restrict tool access, implement content origin and sanitization rules, maintain comprehensive logs, monitor for anomalies, and require human approval for high-impact actions. In education, where trust is essential and budgets are tight, this is not optional. It is necessary for unlocking significant productivity safely. Identify the risk where it exists, and we can mitigate it. If we keep viewing it as a "chatbot" issue, we will continue to pay for someone else's actions.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Axios. (2025, May 6). New cybersecurity risk: AI agents going rogue.

Cybersecurity and Infrastructure Security Agency (CISA) et al. (2024, Apr. 15). Deploying AI Systems Securely (Joint Cybersecurity Information).

Cybersecurity Dive (Jones, D.). (2025, Aug. 11). Research shows AI agents are highly vulnerable to hijacking attacks.

IBM. (2025). Cost of a Data Breach Report 2025.

IBM Newsroom. (2025, Jul. 30). 13% of organizations reported breaches of AI models or applications; 97% lacked proper AI access controls.

Infosecurity Magazine. (2025, Jun.). #Infosec2025: Concern grows over agentic AI security risks.

Kiplinger. (2025, Sept.). How AI puts company data at risk.

NCSC (UK). (n.d.). Machine learning principles: Secure deployment.

NCSC (UK). (2024, Feb. 13). AI and cyber security: What you need to know.

NIST. (2024). Artificial Intelligence Risk Management Framework: Generative AI Profile (NIST AI 600-1).

OWASP. (2023–2025). Top 10 for LLM Applications.

Palo Alto Networks Unit 42. (2025). AI Agents Are Here. So Are the Threats.

Scientific American (Béchard, D. E.). (2025, Sept.). The new frontier of AI hacking—Could online images hijack your computer?

Trend Micro. (2025, Apr. 22). Unveiling AI agent vulnerabilities—Part I: Introduction.

Trend Micro. (2025, Jul. 29). State of AI Security, 1H 2025.

Zenity Labs. (2025, May 1). RSAC 2025: Your Copilot Is My Insider.

Zenity Labs (PR Newswire). (2025, Aug. 6). AgentFlayer vulnerabilities allow silent hijacking of major enterprise.