Mind Captioning in Education: Pattern Recognition Needs Policy, Not Hype

Published

Modified

Mind captioning maps visual brain patterns to text, but it’s pattern recognition—not mind reading In schools, keep it assistive only, with calibration, human-in-the-loop checks, and clear error budgets Adopt strict governance first: informed consent, mental-privacy protections, and audited model cards

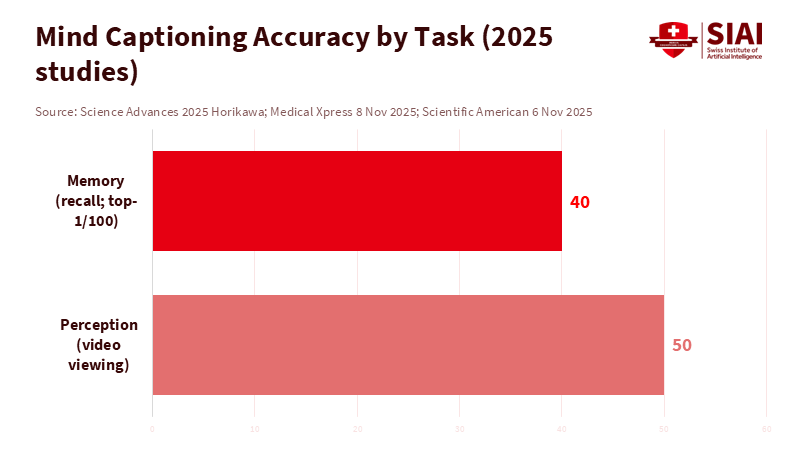

A machine that can guess your memories should make every educator think twice. In November 2025, researchers revealed that a non-invasive "mind captioning" system could identify which of 100 silent video clips a person was recalling nearly 40% of the time for some individuals, even when language areas were inactive. That exceeds mere chance. The model learned to connect patterns of visual brain activity to the meaning of scenes, then generated sentences that described the memories. It isn’t telepathy, and it isn’t perfect. But it clearly shows that semantic structure in the brain can be captured and translated into text. If this ability finds its way into school tools—ostensibly to help non-speaking students, assess comprehension, or personalize learning—it will come with power, risk, and the temptation to exaggerate its capabilities. Our systems excel at pattern recognition, but our policies fall short. This highlights the necessity and potential of interdisciplinary teamwork in developing mind captioning technology, underscoring the importance and promise of collaboration between engineers and neuroscientists.

Mind Captioning: Pattern Recognition, Not Mind Reading

The core idea of mind captioning is straightforward and transformative: the system maps visual representations in the brain to meaning and then to sentences. In lab settings, fMRI signals from a few participants watching or recalling short clips were turned into text that retained who did what to whom, rather than just a collection of words. This is important for classrooms because a tool that can retrieve relational meaning could, in theory, help students who cannot speak or who struggle to express what they saw. The potential benefits of this technology in education are vast, offering hope and optimism for the future of learning. However, the key term is "pattern recognition," not "mind reading." Models infer likely descriptions from brain activity linked to semantics learned from video captions. They do not pull private thoughts at will; they need consent, calibration, and context. Even the teams behind this research stress those limits and the ethical implications of mental privacy. We should adopt the same approach and draft our rules accordingly.

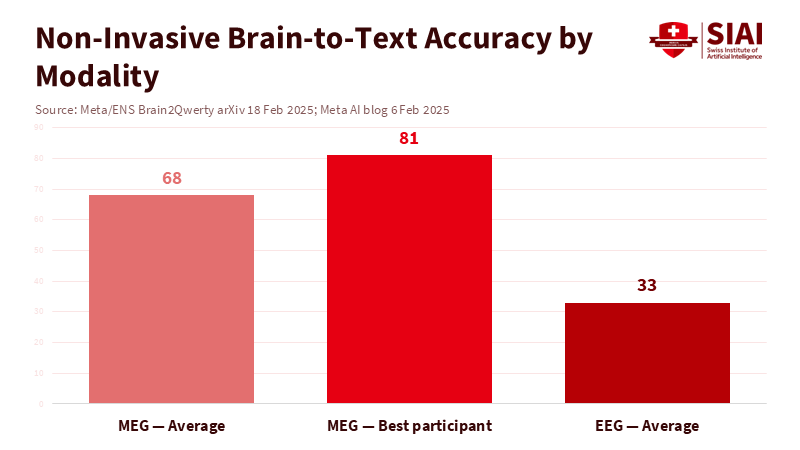

Performance demands similar attention. Related advances outline the progress and its limits. A 2023 study reconstructed the meaning of continuous language from non-invasive fMRI, showing that semantic content—rather than exact words—can be decoded when participants listen to stories. However, decoding what someone produces is more challenging. In 2025, a non-invasive “Brain2Qwerty” system translated brain activity while people typed memorized sentences, achieving an average character-level accuracy of around 68% with MEG and up to 81% for top participants; EEG was much less effective.

In contrast, invasive implants for inner speech have reported word or sentence accuracy in the 70% range in early trials, highlighting gaps in portability and safety that still need to be addressed. These figures do not support grading or monitoring. They advocate for narrow, assistive use, open error budgets, and interdisciplinary oversight wherever mind captioning intersects with learning.

Mind Captioning in Education: Accuracy, Error Budgets, and Use Cases

If mind captioning enters schools, its first appropriate role is in assistive communication. Imagine a student with aphasia watching a short clip and having a system suggest a simple sentence, which the student can then confirm or edit. This process puts the student in control, not the model. It also aligns with existing science: mind captioning works best with seen or remembered visual content that has a clear structure, is presented under controlled timing, and is calibrated to the individual. The complex equipment, such as fMRI today and promising but not yet wearable MEG, keeps this technology in clinics and research labs for now. Claims that it can evaluate reading comprehension on the spot are premature. Even fast EEG setups that decode rapid visual events have limited accuracy, reminding us that “instant” in headlines often means processing that takes seconds to minutes with many training trials behind the scenes. Tools should never exceed the error margins that establish their safe educational use.

Administrators should seek a clear error budget linked to each application. If the goal is to generate a one-sentence summary of a video the class just watched, what rate of false descriptions is acceptable before trust is broken—5%? 10%? For crucial decisions, the answer is zero, which means no high-stakes applications. Developers must disclose how long calibration takes per student, session length, and whether performance remains consistent across days without retraining. Results from fMRI may not apply to EEG in a school environment, and semantic decoders trained on cinematic clips may not work as well with hand-drawn diagrams. A reasonable standard is “human-in-the-loop or not at all.” The model suggests language; the learner approves it; the teacher oversees. Where timing or circumstances make that loop unfeasible, deployment should wait. Mind captioning should be treated as a tool for expression, not a silent judge of thought. This perspective safeguards students and keeps the technology focused on real needs.

Governance for Mind Captioning and Neural Data in Schools

The importance of governance in keeping pace with technological advancements cannot be overstated. In 2024, Colorado passed the first U.S. law to protect consumer “neural data,” classifying it as sensitive information and requiring consent—an essential part of any district procurement. In 2025, the U.S. Senate introduced the MIND Act, directing the Federal Trade Commission to study neural data governance and suggest a national framework. UNESCO adopted global standards for neurotechnology this month, emphasizing mental privacy and freedom of thought. The OECD’s 2025 Neurotechnology Toolkit pushes for flexible regulations that align with human rights. Together, these create a solid foundation: schools should treat neural signals like biometric health data, not as simple data trails. Contracts must prohibit secondary use, require default deletion, and draw a clear line between assistive communication and any form of behavior scoring or monitoring. Without that distinction, the social acceptance of mind captioning in education will vanish.

Districts can put this into practice. Require on-device or on-premise processing whenever possible; if cloud algorithms are necessary, mandate encryption during storage and transmission, strict purpose limitations, and independent audits. Insist on understandable local consent forms for students and guardians that teachers can easily explain. Request model cards disclosing training data types, subject counts, calibration needs, demographic performance differences, and failure modes, along with data sheets for each hardware sensor. Clearly define kill-switches and sunset clauses in contracts, with mandatory timelines for data deletion. Explain that outputs used in learning must be verifiable by students. Finally, implement red-team testing before any pilot: adversarial scenarios that test whether the system infers sensitive traits, leaks private associations, or produces inaccurate, confident sentences. Policy cannot remain just words. Procurement reflects policy in action; use it to establish a safe foundation for mind captioning.

Bridging Engineers and Neuroscience: A Curriculum for Evidence

The quickest way for mind captioning to fail in schools is to let engineers release a product without collaborating closely with neuroscientists or to let neuroscientists overlook classroom realities. We can address this by making co-production standard practice. Teacher-education programs should include a short, practical module on neural measurement: what fMRI, MEG, and EEG actually capture; why signal-to-noise ratios and subject variability are significant; and how calibration affects performance. Recent guidance on brain-computer interface design is straightforward: neuroscience principles must inform engineering decisions and user protocols. This means setting clear hypotheses, being transparent about pre-registration where possible, and focusing on how task design affects the decodable signal. The research frontier is changing—visual representation decoders report high top-5 accuracy in lab tasks, and semantic decoders now extract meaning from stories—but classroom tasks are messier. A shared curriculum across education, neuroscience, and human-computer interaction can help maintain realistic expectations and humane experiments.

Critiques deserve responses. One critique suggests the vision is exaggerated: Meta-funded non-invasive decoders achieve only "alphabet-level" accuracy. That misinterprets the trend. The latest non-invasive text-production study using MEG achieves about 68% character accuracy on average and 81% for the top subjects; invasive systems reach similar accuracy for inner speech but at the cost of surgery. Another critique states that privacy risks overshadow benefits. Governance can help here: Colorado’s law, the proposed MIND Act, and UNESCO’s standards allow schools to establish clear boundaries. A third critique claims that decoding structure from the visual system does not equate to understanding. Agreed. That is why we restrict educational use to assistive tasks that require human confirmation and why we measure gains in agency rather than relying on mind-reading theatrics. Meanwhile, the evidence base is expanding: the mind captioning study shows solid mapping from visual meaning to text and, significantly, generalizes to memory without depending on language regions. Use that progress. Do not oversell it.

That "40% from 100" figure is our guiding principle and our cautionary tale. It shows that mind captioning can recover structured meaning from non-invasive brain data at rates well above chance. It also illustrates that the system is imperfect and probabilistic, not a peek into private thoughts. Schools should implement technology only where it is valuable and limited: for assistive purposes with consent, for tasks aligned with the science, and with a human involved. The remaining focus is governance. We should treat neural data as sensitive by default, enforce deletion and purpose limits, and expect public model cards and red-team results before any pilot enters a classroom. We should also support training that allows teachers, engineers, and neuroscientists to communicate effectively. Mind captioning will tempt us to rush the process. We must resist that. Pattern recognition is impressive. Educational policy must be even more careful, protective, and focused on dignity.

The views expressed in this article are those of the author(s) and do not necessarily reflect the official position of the Swiss Institute of Artificial Intelligence (SIAI) or its affiliates.

References

Congress of the United States. (2025). Management of Individuals’ Neural Data Act of 2025 (MIND Act). 119th Congress, S.2925. Retrieved from Congress.gov.

Ferrante, M., et al. (2024). Decoding visual brain representations from biomedical signals. Computer Methods and Programs in Biomedicine.

The Guardian. (2025, November 6). UNESCO adopts global standards on the ‘wild west’ field of neurotechnology.

Horikawa, T. (2025). Mind captioning: Evolving descriptive text of mental content from human brain activity. Science Advances.

Live Science. (2025). New brain implant can decode a person’s inner monologue.

Neuroscience News. (2025, November 7). Brain decoder translates visual thoughts into text.

OECD. (2025, July). Neurotechnology Toolkit. Paris: OECD.

Rehman, M., et al. (2024). Decoding brain signals from rapid-event EEG for visual stimuli. Frontiers in Neuroscience.

Reuters. (2024, April 18). First law protecting consumers’ brainwaves signed by Colorado governor.

Scientific American / Nature. (2025, November 6). AI Decodes Visual Brain Activity—and Writes Captions for It.

Tang, J., et al. (2023). Semantic reconstruction of continuous language from non-invasive brain recordings. Nature Neuroscience.

Yang, H., et al. (2025). Guiding principles and considerations for designing a well-grounded brain-computer interface. Frontiers in Neuroscience.

Zhang, A., et al. (2025). Brain-to-Text Decoding: A Non-invasive Approach via Typing (Brain2Qwerty).

Comment